Introduction

The rise of endpoint protection and the use of mobile operating systems has created additional challenges when targeting corporate users with phishing payloads designed to execute code on their endpoint device. Credential capture campaigns offer an alternative chance to leverage remote working solutions such as VPNs or Desktop Gateways in order to gain access to a target network through a legitimate access point.

A credential capture campaign typically starts with a convincing email or SMS that contains a link to a web page that encourages the user to enter their username, password and any other information that may be useful, for example a two-factor authentication token value.

When constructing a credential capture phishing campaign, success depends on the ability to pass the scrutiny of both technical controls and the user’s ability to spot a fake. This usually requires:

- A valid SSL certificate

- A categorised domain

- A trusted URL

- Believable content

- A properly terminated user journey

- A place to capture and process captured credentials

Simply registering a new domain and then emailing a link to it is likely to result in a failed phishing campaign. Even if the email reaches the target user, a well configured corporate proxy is likely to block the newly registered domain as it does not have category associated with it.

Cloud based application suites such as Office365 offer the ability to author and share rich content on a trusted domain. This blog post will explain how trusted Microsoft cloud infrastructure can be used to host a credential capture campaign.

Creating the Content

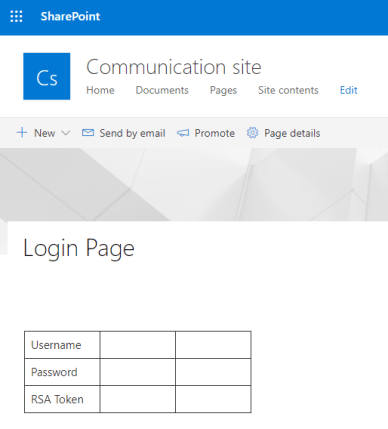

A vanilla SharePoint page looks far from convincing enough to fool a target into entering their credentials, with only the inner page content being customisable:

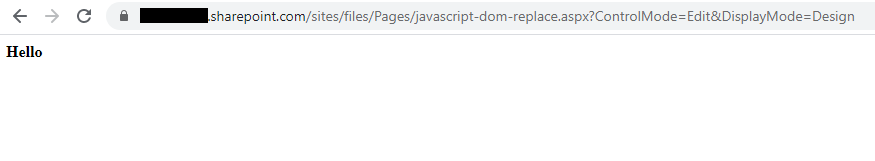

The ability to provide snippets of code to customise the look and feel of a SharePoint page appears to be in the process of being phased out, at least through the standard user interface provided via Office 365. However, by experimenting with the different site type, it appears that JavaScript could be included in legacy SharePoint Wiki pages in order to allow customisation of the appearance and function. Given that JavaScript has control of the entire DOM (Document Object Model), something like the snippet of code below would override any existing page content and print the word ‘Hello’:

document.body.innerHTML=("Hello")

A quick test shows that this works in the sharepoint.com environment:

The page loads all of the original content in the background but the JavaScript re-writes the content to change the appearance. With sharepoint.com content being hosted on Microsoft domains the valid SSL certificate, domain categorisation and trusted URL are all taken care of and should make the content accessible via the majority of corporate proxies.

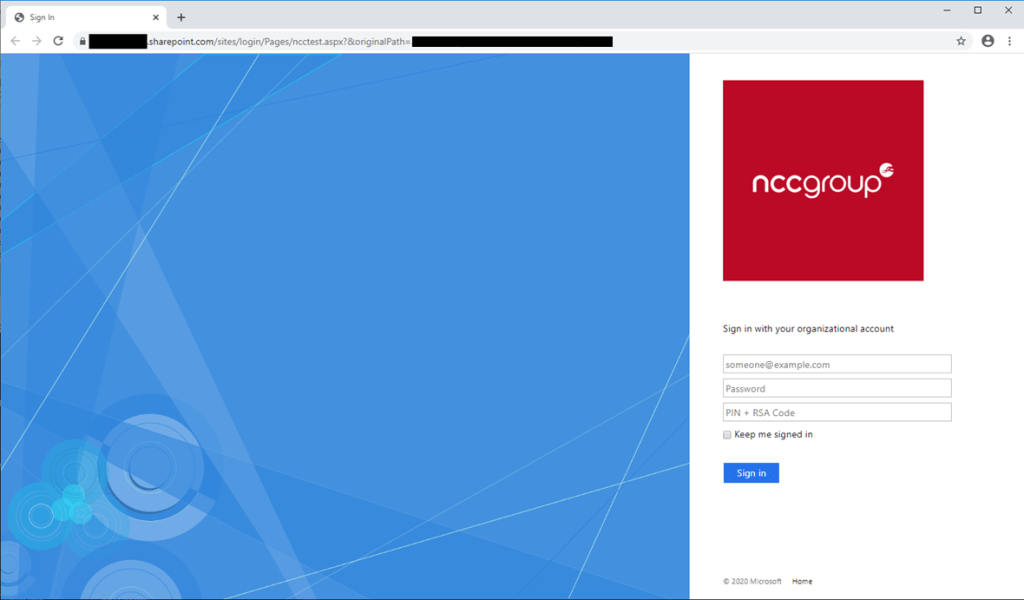

At this point it’s possible to customise the look and feel of the page by creating a HTML page and then base64 encoding it and using it to set the innerHTML value in the DOM. Base64 encoding any images makes sure that there is no reliance on external resources. An example page can be seen below:

This covers off the believable content requirement and can be extended to create multiple pages, forming a user journey that ultimately ends in a tangible conclusion. The page can be made accessible outside of the Office tenant by creating a shared link, set to ‘accessible by anyone’. An example of an accessible link can be seen below, where ‘phisher’ is the company name used when creating the Office tenant:

https://phisher.sharepoint.com/:u:/s/login/BTBpB3lAbwFIm11hnFO00L4B2VEqJG9ApoJyqmDrPYE0mw?e=1Y4A6G

In order to actually capture passwords, the ‘Sign in’ button must send the user’s input to somewhere that can be accessed by the attacker. This is easily achievable by simply having the form post to any other domain, however the issues of valid SSL certificates and domain reputation re-surface.

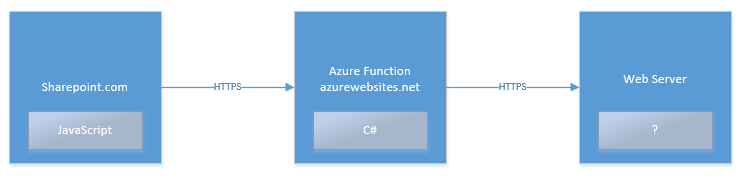

As JavaScript can be used to send the data, the destination domain does not need to be inherently trusted by the end user as the interactions will not be visible to them, so we can make use of Azure Functions that can be created via the Azure Portal and are hosted on an azurewebsites.net subdomain. A simple C# forwarder can be created and hosted as an Azure function, allowing the captured credentials to be sent to any server, for example an AWS hosted VPS. With the correct CORS headers set, the Function can accept data from any origin, providing the URL has the correct authentication code. An example of a URL that may be used to POST data to an Azure Function can be seen below, where ‘phisher’ is the Function name and ‘Analytics’ is the name of the HTTP trigger:

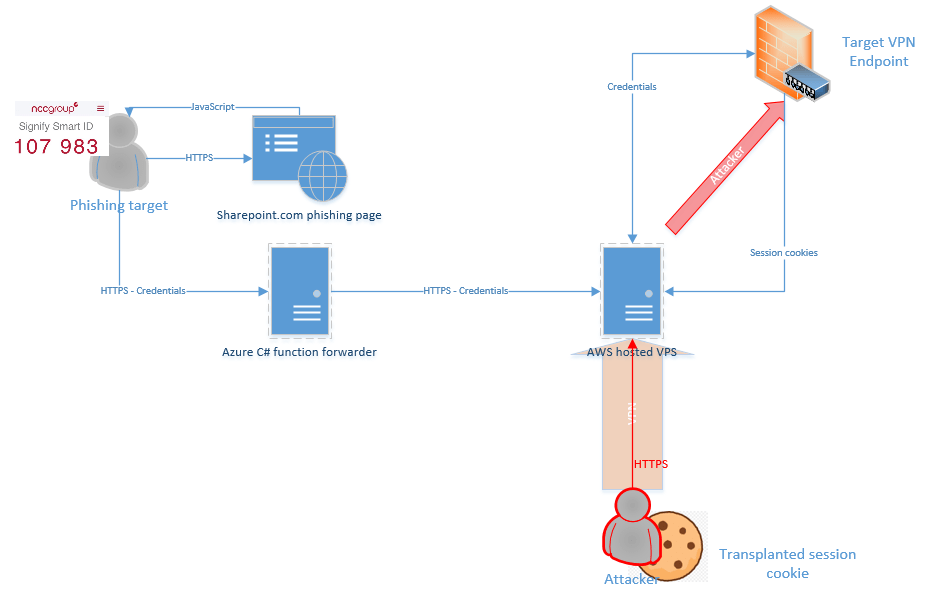

The flow chart below shows the interactions and associated code languages so far – note that PHP is shown as the language used for the final stage but this could be substituted for any other server-side scripting language:

After the forwarding, this is the point where the dependency on Azure or Office hosted content ends and data can be transferred to a server that is more configurable. As the final server in the chain is never visible to the phishing targets, configuring a domain or SSL certificate is not necessarily required. In fact, in some instances, not having a domain associated with an IP address can reduce the chances of attribution. Code on this server can log the credentials to a file, automatically relay them to a target and alert the owner of the server that credentials have been captured.

Whilst capturing credentials is useful, the majority of Internet facing authentication portals require a short-lived two-factor authentication token. As the web server component of the attack chain is fully customisable, it can be used to relay the captured credentials to the target system and gain an authenticated session within the lifespan of the two-factor token value. Logging the web server’s interactions with the target endpoint allows the session to be resumed by transplanting any received session cookies to an appropriate browser. The final attack chain can be seen below:

Note that in the diagram above, the attacker relays session cookies via a VPN session on the virtual private server. This is necessary to avoid the termination of the VPN session due to a change in source IP address.

Given the ability to host JavaScript on a sharepoint.com subdomain, this also presents an opportunity to serve files using HTML smuggling techniques [1], either as part of the user login journey, or as a standalone payload delivery campaign.

The creation of content that is running in several different environments can be time consuming and prone to error. The process was automated internally at NCC Group in order to quickly demonstrate the use of SharePoint in this way.

Detection and Protection

Typical email filtering and proxy categorisation may not detect sharepoint.com subdomains as malicious and users are likely to be familiar with the domain. However, there are some steps that can be taken to reduce the success of this and many other types of credential relaying attacks.

Enable two-factor authentication

Whilst this blog post has shown that access can still be gained to systems protected by two-factor authentication, enabling it on all Internet facing endpoints significantly raises the technical bar to do so. Where U2F is supported, this can defeat credential relay attacks.

Whitelist your own SharePoint subdomain

Blocking access to subdomains of sharepoint.com that are not commonly used by your company may not be a viable option but would certainly be effective. It should be noted though that any domain which allows users to host JavaScript could be leveraged in the same way.

Client certificates for authentication to strategic endpoints

Requiring mutual TLS authentication means that even with captured credentials, authentication is not possible. Care should be taken to implement this consistently across all Internet facing authentication endpoints.

Block authentication to endpoints from cloud based sources

Virtual private servers offer a level of anonymity and the ability to quickly switch IP addresses. Blocking authentication attempts from common providers such as AWS, Digital Ocean and Azure may help to prevent credentials from being relayed. Equally, blocking authentication from known TOR nodes or VPN endpoints should reduce exposure without impacting legitimate connections to remote working solutions.

Enable host checkers on remote working solutions

Many providers of remote working solutions provide the ability to perform checks on the connecting host via a plugin or agent before allowing the connection. Whilst these are not fool-proof, when configured to require more than just the presence of an anti-virus product, they can limit the use of an unauthorised session.

Footnote

We disclosed this research to MSRC, who were grateful for the opportunity to review and comment. They concluded that there is no obvious mitigation to the techniques described above.

References