NCC Group is offering a new fully Managed Detection and Response (MDR) service for our customers in Azure. This blog post gives a behind the scenes view of some of the automated processes involved in setting up new environments and managing custom analytics for each customer, including details about our scripting and automated build and release pipelines, which are deployed as infrastructure-as-code.

If you are:

- a current or potential customer interested in how we manage our releases

- just starting your journey into onboarding Sentinel for large multi-Tenant environments

- are looking to enhance existing deployment mechanisms

- are interested in a way to manage multi-Tenant deployments with varying set-up

…you have come to the right place!

Background

Sentinel is Microsoft’s new Security Information and Event Management solution hosted entirely on Azure.

NCC Group has been providing MDR services for a number of years using Splunk and we are now adding Sentinel to the list.

The benefit of Sentinel over the Splunk offering is that you will be able to leverage existing Azure licenses and all data will be collected and visibile in your own Azure tenants.

The only bits we keep on our side are the custom analytics and data enrichment functions we write for analysing the data.

What you might learn

The solution covered in this article provides a possible way to create an enterprise scale solution that, once implemented, gives the following benefits:

- Management of a large numbers of tenants with differing configurations and analytics

- Minimal manual steps for onboarding new tenants

- Development and source control of new custom analytics

- Management of multiple tenants within one Sentinel instance

- Zero downtime / parallel deployments of new analytics

- Custom web portal to manage onboarding, analytics creation, baselining, and configuration of each individual tenants’ connectors and enabled analytics

The main components

Azure Sentinel

Sentinel is Microsoft’s cloud native SIEM (Security Incident and Event Management) solution. It allows for gathering of a large number of log and event sources to be interrogated in real time, using in-built and custom KQL to automatically identify and respond to threats.

Lighthouse

“Azure Lighthouse enables multi-tenant management with scalability, higher automation, and enhanced governance across resources.”

In essence, it allows a master tenant direct access to one or many sub or customer tenants without the need to switch directory or create custom solutions.

Lighthouse is used in this solution to connect to log analytics workspaces in additional tenants and view them within the same instance of Sentinel in our “master tenant”.

Log Analytics Workspaces

Analytics workspaces are where the data sources connected to Sentinel go into in this scenario. We will connect workspaces from multiple tenants via Lighthouse to allow for cross tenant analysis.

Azure DevOps (ADO)

Azure DevOps provides the core functionality for this solution used for both its CI/CD pipelines (Using YAML, not Classic for this) and inbuilt Git repos. The solutions provided will of course work if you decide to go for a different system.

App Service

To allow for easy management of configurations, onboarding and analytics development / baselining we hosted a small Web application written in Blazor, to avoid having to manually change JSON config files. The app also draws in MITRE and other additional data to enrich analytics.

Technologies breakdown:

In order for us to create and manage the required services we need to make use Infrastructure as Code (IaC), scripting, automated build and release pipelines as well as have a way to develop a management portal.

The technologies we ended up using for this were:

- ARM Templates for general resource deployments and some of the connectors

- PowerShell using Microsoft AZ / Sentinel and Roberto Rodriquez’s Azure-Sentinel2Go

- API calls for baselining and connectors not available through PowerShell modules

- Blazor for configuration portal development

- C# for API backend Azure function development

- YAML Azure DevOps Pipelines

Phase 1: Tenant Onboarding

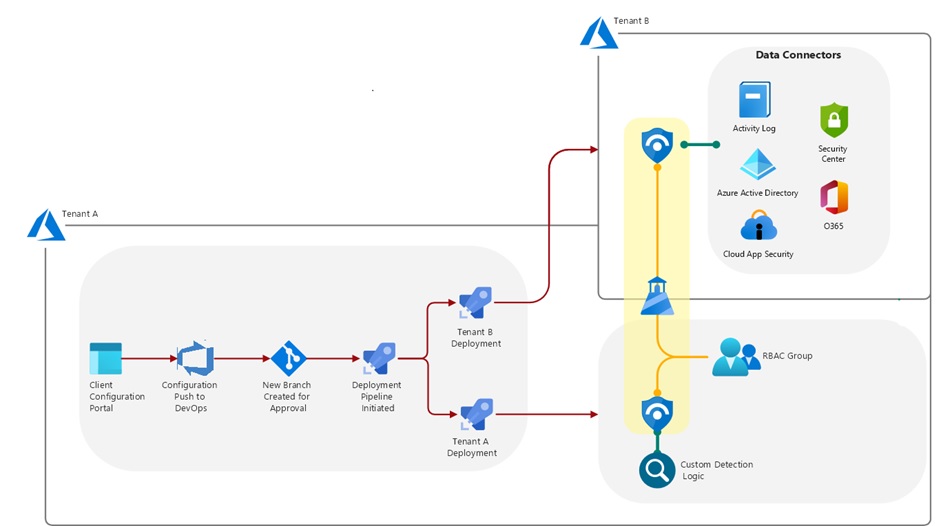

Let’s step through this diagram.

We are going to have a portal that allows us to configure a new client, go through an approval step to ensure configs are peer reviewed before going into production, by pushing the configuration into a new branch in source control, then trigger a Deployment pipeline.

The pipeline triggers 2 Tenant deployments.

Tenant A is our “Master tenant” that will hold the main Sentinel, all custom analytics, alerts, and playbooks and connect, using lighthouse, to all “Sub tenants” (Tenant B).

In the large organisations we would create a 1:1 mapping for each sub tenant by deploying additional workspaces that then point to the individual tenants. This has the benefit of keeping the analytics centralised, protecting intellectual property. You could, however, deploy them directly into the actual workspaces once Lighthouse is set up or have one workspace that queries all the others.

Tenant B and all other additional Tenants have their own instance of Sentinel with all required connectors enabled and need to have the lighthouse connection initiated from that side.

Pre-requisites:

To be able to deploy to either of the tenants we need Service connections for Azure DevOps to be in place.

“Sub tenant” deployment:

Some of the Sentinel Connectors need pretty extreme permissions to be enabled, for example to configure the Active Directory connector properly we need Global Administrator, Security Administrator roles as well as root scope Owner permissions on the tenant.

In most cases, once the initial set up of the “sub tenant” is done there will rarely be a need to add additional connectors or deploy to that tenant, as all analytics are created on the “Master tenant”. I would strongly suggest either to disable or completely remove the Tenant B service connection after setup. If you are planning to regularly change connectors and are happy with the risk of leaving this service principal in place, then the steps will allow you to do this.

A sample script for setting this up the “Sub tenant” service Principal

$rgName = 'resourceGroupName' ## set the value of this to the resource group name containing the sentinel log analytics workspace

Connect-AzAccount

$cont = Get-AzContext

Connect-AzureAD -TenantId $cont.Tenant

$sp = New-AzADServicePrincipal -DisplayName ADOServicePrincipalName

Sleep 20

$assignment = New-AzRoleAssignment -RoleDefinitionName Owner -Scope '/' -ServicePrincipalName $sp.ApplicationId -ErrorAction SilentlyContinue

New-AzRoleAssignment -RoleDefinitionName Owner -Scope "/subscriptions/$($cont.Subscription.Id)/resourceGroups/$($rgName)" -ServicePrincipalName $sp.ApplicationId

New-AzRoleAssignment -RoleDefinitionName Owner -Scope "/subscriptions/$($cont.Subscription.Id)" -ServicePrincipalName $sp.ApplicationId

$BSTR = [System.Runtime.InteropServices.Marshal]::SecureStringToBSTR($sp.Secret)

$UnsecureSecret = [System.Runtime.InteropServices.Marshal]::PtrToStringAuto($BSTR)

$roles = Get-AzureADDirectoryRole | Where-Object {$_.displayName -in ("Global Administrator","Security Administrator")}

foreach($role in $roles)

{

Add-AzureADDirectoryRoleMember -ObjectId $role.ObjectId -RefObjectId $sp.Id

}

$activeDirectorySettingsPermissions = (-not $assignment -eq $null)

$sp | select @{Name = 'Secret'; Expression = {$UnsecureSecret}},@{Name = 'tenant'; Expression = {$cont.Tenant}},@{Name = 'Active Directory Settings Permissions'; Expression = {$activeDirectorySettingsPermissions}},@{Name = 'sub'; Expression = {$cont.Subscription}}, applicationId, Id, DisplayName

This script will:

- create a new Service Principal,

- set the Global Administrator and Security Administrator roles for the principal,

- attempt to give root scope Owner permissions as well as subscription scope owner as a backup as this is the minimum required to connect Lighthouse if the root scope permissions are not set.

The end of the script prints out the details required to set this up as a Service connection in Azure DevOps . You could of course continue on to add the Service connection directly to Azure DevOps if the person running the script has access to both, but it was not feasible for my requirements.

For Tenant A we have 2 options.

Option 1: One Active Directory group to rule them all

This is something you might do if you are setting things up for your own multi-tenant environments. If you are have multiple environments to manage and different analysts’ access for each, I would strongly suggest using option 2.

Create an empty Azure Active Directory group either manually or using

(New-AzADGroup -DisplayName 'GroupName' -MailNickname 'none').Id

(Get-AzTenant).Id

You will need the ID of both the Group and the tenant in a second so don’t lose it just yet.

Once the group is created, set up a Service Connection to your Master tenant from Azure DevOps (just use the normal connection Wizard), then add the Service Principal to the newly created group as well as users you want to have access to the Sentinel (log analytics) workspaces.

To match the additional steps in Option 2, create a Variable group (either directly but masked or connected to a KeyVault depending on your preference) in Azure DevOps (Pipelines->Library->+Variable Group)

Make sure you restrict both Security and Pipeline permissions for the variable group to only be usable for the appropriate pipelines and users whenever creating variable groups.

Then Add:

AAdGroupID with your new Group ID

And

TenantID with the Tenant ID of the master tenant.

Then reference the Group at the top of your YAML pipeline within the Job section with

variables:

- group: YourGroupName

Option 2: One group per “Sub tenant”

If you are managing customers, this is a much better way to handle things. You will be able to assign different users, to the groups to ensure only the correct people have access to the environments they are meant to have.

However, to be able to do this you need a Service Connection with some additional rights in your “Master Tenant”, so your pipelines can automatically create customer specific AD groups and add the Service principal to them.

Set up a Service Connection from Azure DevOps to your Master Tenant, then find the Service Principal and give it the following permissions

- Azure Active Directory Graph

- Directory.ReadWrite.All

- Microsoft Graph

- Directory.ReadWrite.All

- PrivilegedAccess.ReadWrite.AzureADGroup

Then include the following script in your Pipeline to Create the group to be used for each customer and adding the service principal name to it.

param(

[string]$AADgroupName,

[string]$ADOServicePrincipalName

)

$group1 = Get-AzADGroup -DisplayName $AADgroupName -ErrorAction SilentlyContinue

if(-not $group1)

{

$guid1 = New-Guid

$group1 = New-AzADGroup -DisplayName $AADgroupName -MailNickname $guid1 -Description $AADgroupName

$spID = (Get-AzADServicePrincipal -DisplayName $ADOServicePrincipalName).Id

Add-AzADGroupMember -MemberObjectId $spID -TargetGroupObjectId $group1.Id

}

$id = $group1.id

$tenant = (Get-AzTenant).Id

Write-Host "##vso[task.setvariable variable=AAdGroupId;isOutput=true]$($id)"

Write-Host "##vso[task.setvariable variable=TenantId;isOutput=true]$($tenant)"

The Write-Hosts at the end of the script outputs the group and Tenant ID back to the pipeline to be used in setting up Lighthouse later.

With the boring bits out of the way, let’s get into the core of things.

Client configurations

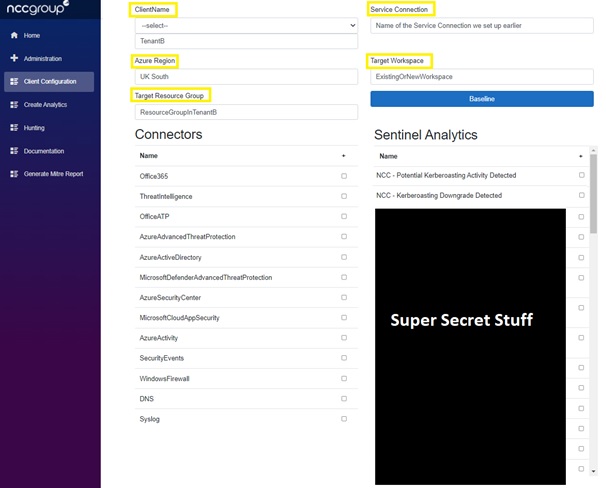

First, we need to create configuration files to be able to manage the connectors and analytics that get deployed for each “Sub tenant”.

You can of course do this manually, but I opted to go for a slightly more user-friendly approach with the Blazor App.

The main bits we need, highlighted in yellow above are:

- A name for the config

- The Azure DevOps Service Connection name for the target Sub tenant,

- Azure Region (if you are planning to deploy to multiple regions),

- Target Resource Group and Target Workspace, this is either where the current Log Analytics of your target environment already exist or should be created.

Client config Schema:

public class ClientConfig

{

public string ClientName { set; get; } = "";

public string ServiceConnection { set; get; } = "";

public List SentinelAlerts { set; get; } = new List();

public List SavedSearches { set; get; } = new List();

public string TargetWorkspaceName { set; get; } = "";

public string TargetResourceGroup { set; get; } = "";

public string Location { get; set; } = "UK South";

public string connectors { get; set; } = "";

}

Sample Config output:

{

"ClientName": "TenantB",

"ServiceConnection": "ServiceConnectionName",

"SentinelAlerts": [

{

"Name": "ncc_t1558.003_kerberoasting"

},

{

"Name": "ncc_t1558.003_kerberoasting_downgrade_v1a"

}

],

"SavedSearches": [],

"TargetWorkspaceName": "targetworkspace",

"TargetResourceGroup": "targetresourcegroup",

"Location": "UK South",

"connectors": "Office365,ThreatIntelligence,OfficeATP,AzureAdvancedThreatProtection,AzureActiveDirectory,MicrosoftDefenderAdvancedThreatProtection,AzureSecurityCenter,MicrosoftCloudAppSecurity,AzureActivity,SecurityEvents,WindowsFirewall,DNS,Syslog"

}

This data can then be pushed onto a storage account and imported into Git from a pipeline triggered through the app.

pool:

vmImage: 'windows-latest'

steps:

-checkout: self

persistCredentials: true

clean: true

- task: AzurePowerShell@5

displayName: 'Export Configs'

inputs:

azureSubscription: 'Tenant A Service Connection name'

ScriptType: 'FilePath'

ScriptPath: '$(Build.SourcesDirectory)/Scripts/Exports/ConfigExport.ps1'

ScriptArguments: '-resourceGroupName "StorageAccountResourceGroupName" -storageName "storageaccountname" -outPath "SentinelClientConfigs" -container "configs"'

azurePowerShellVersion: 'LatestVersion'

- task: PowerShell@2

displayName: 'Commit Changes to new Git Branch'

inputs:

filePath: '$(Build.SourcesDirectory)/Scripts/PipelineLogic/PushToGit.ps1'

arguments: '-branchName conf$(Build.BuildNumber)'

Where ConfigExports.ps1 is

param(

[string]$ResourceGroupName ,

[string]$storageName ,

[string]$outPath =,

[string]$container

)

$ctx = (Get-AzStorageAccount -ResourceGroupName $ResourceGroupName -Name $storageName).Context

$blobs = Get-AzStorageBlob -Container $container -Context $ctx

if(-not (Test-Path -Path $outPath))

{

New-Item -ItemType directory $outPath -Force

}

Get-ChildItem -Path $outPath -Recurse | Remove-Item -force -recurse

foreach($file in $blobs)

{

Get-AzStorageBlobContent -Context $ctx -Container $container -Blob $file.Name -Destination ("$($outPath)/$($file.Name)") -Force

}

And PushToGit.ps1

param(

[string] $branchName

)

git --version

git switch -c $branchName

git add -A

git config user.email WhateverEmailYouWantToUse@Somewhere.com

git config user.name "Automated Build"

git commit -a -m "Commiting rules to git"

git push --set-upstream -f origin $branchName

This will download the config files, create a new Branch in the Azure DevOps Git Repo and upload the files.

The branch can then be reviewed and merged. You could bypass this and check directly into the main branch if you want less manual intervention, but the manual review adds an extra layer of security to ensure no configs are accidentally / maliciously changed.

Creating Analytics

For analytics creation we have 2 options.

- Create Analytics in a Sentinel Test environment and export them to git. This will allow you to source control analytics as well as review any changes before allowing them into the main branch.

param(

[string]$resourceGroupName,

[string]$workspaceName,

[string]$outPath,

[string]$module='Scripts/Modules/Remove-InvalidFileNameChars.ps1'

)

import-module $module

Install-Module -Name Az.Accounts -AllowClobber -Force

Install-Module -Name Az.SecurityInsights -AllowClobber -Force

$results =Get-AzSentinelAlertRule -WorkspaceName $workspaceName -ResourceGroupName $resourceGroupName

$results

$myExtension = ".json"

$output = @()

foreach($temp in $results){

if($temp.DisplayName)

{

$resultName = $temp.DisplayName

if($temp.query)

{

if($temp.query.Contains($workspaceName))

{

$temp.query= $temp.query -replace $workspaceName , '{{targetWorkspace}}'

$myExportPath = "$($outPath)Alerts$($temp.productFilter)"

if(-not (Test-Path -Path $myExportPath))

{

new-item -ItemType Directory -Force -Path $myExportPath

}

$rule = $temp | ConvertTo-Json

$resultName = Remove-InvalidFileNameChars -Name $resultName

$folder = "$($myExportPath)$($resultName)$($myExtension)"

$properties= [pscustomobject]@{DisplayName=($temp.DisplayName);RuleName=$resultName;Category=($temp.productFilter);Path=$myExportPath}

$output += $properties

$rule | Out-File $folder -Force

}

}

}

}

Note the -replace $workspaceName , ‘{{targetWorkspace}}’. This replaces the actual workspace used in our test environments via workspace(‘testworkspaceName’)with workspace(‘{{targetWorkspace}}’) to allow us to then replace it with the actual “Sub tenants” workspace name when being deployed.

- Create your own analytics in a custom portal, for the Blazor Portal I found Microsoft.Azure.Kusto.Language very useful for validating the KQL queries.

The benefit of creating your own is the ability to enrich what goes into them.

You might, for example, want to add full MITRE details into the analytics to be able to easily generate a MITRE coverage report for the created analytics.

If you are this way inclined, when deploying the analytics, use a generic template from Sentinel and replace the required values before submitting it back to your target Sentinel.

{

"AlertRuleTemplateName": null,

"DisplayName": "{DisplayPrefix}{DisplayName}",

"Description": "{Description}",

"Enabled": true,

"LastModifiedUtc": "/Date(1619707105808)/",

"Query": "{query}"

"QueryFrequency": {

"Ticks": 9000000000,"Days": 0,

"Hours": 0, "Milliseconds": 0, Minutes": 15, "Seconds": 0,

"TotalDays": 0.010416666666666666, "TotalHours": 0.25,

"TotalMilliseconds": 900000,

"TotalMinutes": 15, "TotalSeconds": 900

},

"QueryPeriod": {

"Ticks": 9000000000,

"Days": 0, "Hours": 0, "Milliseconds": 0, "Minutes": 15,

"Seconds": 0,

"TotalDays": 0.010416666666666666,

"TotalHours": 0.25,

"TotalMilliseconds": 900000,

"TotalMinutes": 15,

"TotalSeconds": 900

},

"Severity": "{Severity}",

"SuppressionDuration": {

"Ticks": 180000000000, "Days": 0, "Hours": 5, "Milliseconds": 0, "Minutes": 0,"Seconds": 0,"TotalDays": 0.20833333333333331, "TotalHours": 5, "TotalMilliseconds": 18000000, "TotalMinutes": 300, "TotalSeconds": 18000

},

"SuppressionEnabled": false, "TriggerOperator": 0, "TriggerThreshold": 0,

"Tactics": [

"DefenseEvasion"

],

"Id": "{alertRuleID}",

"Name": "{alertRuleguid}",

"Type": "Microsoft.SecurityInsights/alertRules",

"Kind": "Scheduled"

}

Let’s Lighthouse this up!

Now we have the service connections and initial client config in place, we can start building the Onboarding pipeline for Tenant B.

As part of this we will cover:

- Generate consistent naming conventions

- Creating the Log Analytics workspace specified in config and enabling Sentinel

- Setting up the Lighthouse connection to Tenant A

- Enabling the required Connectors in Sentinel

Consistent naming (optional)

There are a lot of different ways for getting naming conventions done.

I quite like to push a few parameters into a PowerShell script, then write the naming conventions back to the pipeline, so the convention script can be used in multiple pipelines to provide consistency and easy maintainability.

The below script generates variables for a select number of resources, then pushes them out to the pipeline.

param(

[string]$location,[string]$environment,[string]$customerIdentifier,[string]$instance)

$location = $location.ToLower()

$environment = $environment.ToLower()

$customerIdentifier = $customerIdentifier.ToLower()

$instance = $instance.ToLower()

$conventions = New-Object -TypeName psobject

$conventions | Add-Member -MemberType NoteProperty -Name CustomerResourceGroup -Value "rg-$($location)-$($environment)-$($customerIdentifier)$($instance)"

$conventions | Add-Member -MemberType NoteProperty -Name LogAnalyticsWorkspace -Value "la-$($location)-$($environment)-$($customerIdentifier)$($instance)"

$conventions | Add-Member -MemberType NoteProperty -Name CustLogicAppPrefix -Value "wf-$($location)-$($environment)-$($customerIdentifier)-"

$conventions | Add-Member -MemberType NoteProperty -Name CustFunctionAppPrefix -Value "fa-$($location)-$($environment)-$($customerIdentifier)-"

foreach($convention in $conventions.psobject.Properties | Select-Object -Property name, value)

{

Write-Host "##vso[task.setvariable variable=$($convention.name);isOutput=true]$($convention.Value)"

}

Sample use would be

- task: PowerShell@2

name: "naming"

inputs:

filePath: '$(Build.SourcesDirectory)/Scripts/PipelineLogic/GenerateNamingConvention.ps1'

arguments: '-location "some Location" -environment "some environment" -customerIdentifier "some identifier" -instance "some instance"'

- task: AzurePowerShell@5

displayName: 'Sample task using variables from naming task'

inputs:

azureSubscription: '$(ServiceConnection)'

ScriptType: 'FilePath'

ScriptPath: 'some script path'

ScriptArguments: '-resourceGroup "$(naming.StorageResourceGroup )" -workspaceName "$(naming.LogAnalyticsWorkspace)"'

azurePowerShellVersion: 'LatestVersion'

pwsh: true

Configure Log analytics workspace in sub tenant / enable Sentinel

You could do the following using ARM, however, in the actual solution, I am doing a lot of additional taskswith the Workspace that were much easier to achieve in script (hence my choice to script it instead).

Create new workspace if it doesn’t exist:

param(

[string]$resourceGroupName,

[string]$workspaceName,

[string]$Location

[string]$sku

)

$rg = Get-AzResourceGroup -Name $resourceGroupName -ErrorAction SilentlyContinue

if(-not $rg)

{

New-AzResourceGroup -Name $resourceGroupName -Location $Location

}

$ws = Get-AzOperationalInsightsWorkspace -ResourceGroupName $resourceGroupName -Name $workspaceName -ErrorAction SilentlyContinue

if(-not $ws){

$ws = New-AzOperationalInsightsWorkspace -Location $Location -Name $workspaceName -Sku $sku -ResourceGroupName $resourceGroupName -RetentionInDays 90

}

Check if Sentinel is already enabled for the Workspace and enable it if not:

param(

[string]$resourceGroupName,

[string]$workspaceName

)

Install-Module AzSentinel -Scope CurrentUser -Force -AllowClobber

Import-Module AzSentinel

$solutions = Get-AzOperationalInsightsIntelligencePack -resourcegroupname $resourceGroupName -WorkspaceName $workspaceName -ErrorAction SilentlyContinue

if (($solutions | Where-Object Name -eq 'SecurityInsights').Enabled) {

Write-Host "SecurityInsights solution is already enabled for workspace $($workspaceName)"

}

else {

Set-AzSentinel -WorkspaceName $workspaceName -Confirm:$false

}

Setting up Lighthouse to the Master tenant

param(

[string]$customerConfig='',

[string]$templateFile = '',

[string]$templateParameterFile='',

[string]$AadGroupID='',

[string]$tenantId=''

)

$config = Get-Content -Path $customerConfig |ConvertFrom-Json

$parameters = (Get-Content -Path $templateParameterFile -Raw) -replace '{principalId}' , $AadGroupID

Set-Content -Value $parameters -Path $templateParameterFile -Force

New-AzDeployment -Name "Lighthouse" -Location "$($config.Location)" -TemplateFile $templateFile -TemplateParameterFile $templateParameterFile -rgName "$($config.TargetResourceGroup)" -managedByTenantId "$tenantId"

This script takes in the config file we generated earlier to read out client or sub tenant values for location and the resource group in the sub tenant then sets up Lighthouse with the below ARM template / parameter file after replacing the ’principalID’ placeholder in the config with the Azure AD group ID we set up earlier.

Note that in the Lighthouse ARM template and parameter file, we are keeping the permissions to an absolute minimum, so users in the Azure AD group in the master tenant will only be able to interact with the permissions granted using the role definition IDs specified.

If you are setting up Lighthouse for the management of additional parts of the Sub tenant you can add andchange the role definition IDs to suit your needs.

Lighthouse.parameters.json

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentParameters.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"mspOfferName": {

"value": "Enter Offering Name"

},

"mspOfferDescription": {

"value": "Enter Description"

},

"managedByTenantId": {

"value": "ID OF YOUR MASTER TENANT"

},

"authorizations": {

"value": [

{

"principalId": "{principalId}",

"roleDefinitionId": "ab8e14d6-4a74-4a29-9ba8-549422addade",

"principalIdDisplayName": " Sentinel Contributor"

},

{

"principalId": "{principalId}",

"roleDefinitionId": "3e150937-b8fe-4cfb-8069-0eaf05ecd056",

"principalIdDisplayName": " Sentinel Responder"

},

{

"principalId": "{principalId}",

"roleDefinitionId": "8d289c81-5878-46d4-8554-54e1e3d8b5cb",

"principalIdDisplayName": "Sentinel Reader"

},

{

"principalId": "{principalId}",

"roleDefinitionId": "f4c81013-99ee-4d62-a7ee-b3f1f648599a",

"principalIdDisplayName": "Sentinel Automation Contributor"

}

]

},

"rgName": {

"value": "RG-Sentinel"

}

}

}

Lighthouse.json

{

"$schema": "https://schema.management.azure.com/schemas/2018-05-01/subscriptionDeploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"mspOfferName": {"type": "string",},"mspOfferDescription": {"type": "string",}, "managedByTenantId": {"type": "string",},"authorizations": {"type": "array",}, "rgName": {"type": "string"}},

"variables": {

"mspRegistrationName": "[guid(parameters('mspOfferName'))]",

"mspAssignmentName": "[guid(parameters('rgName'))]"},

"resources": [{

"type": "Microsoft.ManagedServices/registrationDefinitions",

"apiVersion": "2019-06-01",

"name": "[variables('mspRegistrationName')]",

"properties": {

"registrationDefinitionName": "[parameters('mspOfferName')]",

"description": "[parameters('mspOfferDescription')]",

"managedByTenantId": "[parameters('managedByTenantId')]",

"authorizations": "[parameters('authorizations')]"

}},

{

"type": "Microsoft.Resources/deployments",

"apiVersion": "2018-05-01",

"name": "rgAssignment",

"resourceGroup": "[parameters('rgName')]",

"dependsOn": [

"[resourceId('Microsoft.ManagedServices/registrationDefinitions/', variables('mspRegistrationName'))]"],

"properties":{

"mode":"Incremental",

"template":{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {},

"resources": [

{

"type": "Microsoft.ManagedServices/registrationAssignments",

"apiVersion": "2019-06-01",

"name": "[variables('mspAssignmentName')]",

"properties": {

"registrationDefinitionId": "[resourceId('Microsoft.ManagedServices/registrationDefinitions/', variables('mspRegistrationName'))]"

} } ]} } }],

"outputs": {

"mspOfferName": {

"type": "string",

"value": "[concat('Managed by', ' ', parameters('mspOfferName'))]"

},

"authorizations": {

"type": "array",

"value": "[parameters('authorizations')]"

}}}

Enable Connectors

At the time of creating this solution, there were still a few connectors that could not be set via ARM and I imagine this will still be the case for new connectors as they get added to Sentinel, so I will provide the different ways of handling both deployment types and how to find out what body to include in the posts to the API.

For most connectors you can use existing solutions such as:

Roberto Rodriquez’s Azure-Sentinel2Go

Or

GitHub – javiersoriano/sentinel-all-in-one

First, we install the required modules and get the list of connectors to enable for our client / sub tenant, then, compare them to the list of connectors that are already enabled

param(

[string]$templateLocation = '',

[string]$customerConfig

)

$config = Get-Content -Path $customerConfig |ConvertFrom-Json

$connectors=$config.connectors

$resourceGroupName = $config.TargetResourceGroup

$WorkspaceName = $config.TargetWorkspaceName

$templateLocation

Install-Module AzSentinel -Scope CurrentUser -Force

$connectorList = $connectors -split ','

$rg = Get-AzResourceGroup -Name $resourceGroupName

$check = Get-AzSentinelDataConnector -WorkspaceName $WorkspaceName | select-object -ExpandProperty kind

$outputArray = $connectorList | Where-Object {$_ -notin $check}

$tenantId = (get-azcontext).Tenant.Id

$subscriptionId = (Get-AzSubscription).Id

$Resource = "https://management.azure.com/"

Next, we attempt to deploy the connectors using the ARM templates from one of the above solutions.

foreach($con in $outputArray)

{

write-host "deploying $($con)"

$out = $null

$out= New-AzResourceGroupDeployment -resourceGroupName "$($resourceGroupName)" -TemplateFile $templateLocation -dataConnectorsKind "$($con)"-workspaceName "$($WorkspaceName)" -securityCollectionTier "ALL" -tenantId "$($tenantId)" -subscriptionId "$($subscriptionId)" -mcasDiscoveryLogs $true -Location $rg.Location -ErrorAction SilentlyContinue

if($out.ProvisioningState -eq "Failed")

{

write-host '------------------------'

write-host "Unable to deploy $($con)"

$out | Format-Table

write-host '------------------------'

Write-Host "##vso[task.LogIssue type=warning;]Unable to deploy $($con) CorrelationId: $($out.CorrelationId)"

}

else{

write-host "deployed $($con)"

}

If the connector fails to deploy, we write a warning message to the pipeline.

This will work for most connectors and might be all you need for your requirements.

If there is a connector not currently available such as the Active Directory Diagnostics one was when I created this, we need to use a different approach. Thanks to Roberto Rodriguez for the suggestion.

- Using your favourite Browser, go to an existing Sentinel instance that allows you to enable the connector you need.

- Go through the steps of enabling the connector, then on the last step when submitting open the debug console (Dev tools) in your browser and on the Network tab look through the posts made.

- There should be one containing the payload for the connector you just enabled with the correct settings and URL to post to.

- Now we can replicate this payload in PowerShell and enable them automatically.

When enabling the connector, note the required permissions needed and ensure your ADO service connection meets the requirements!

Create the URI using the URL from the post message, replacing required values such as workspace name with the one used for your target environment

Example:

$uri = "/providers/microsoft.aadiam/diagnosticSettings/AzureSentinel_$($WorkspaceName)?api-version=2017-04-01"Build up the JSON post message starting with static bits

$payload = '{

"kind": "AzureActiveDirectoryDiagnostics",

"properties": {

"logs": [

{

"category": "SignInLogs",

"enabled": true

},

{

"category": "AuditLogs",

"enabled": true

},

{

"category": "NonInteractiveUserSignInLogs",

"enabled": true

},

{

"category": "ServicePrincipalSignInLogs",

"enabled": true

},

{

"category": "ManagedIdentitySignInLogs",

"enabled": true

},

{

"category": "ProvisioningLogs",

"enabled": true

}

]

}

}'|ConvertFrom-Json

Add additional required properties that were not included in the static part:

$connectorProperties =$ payload.properties

$connectorProperties | Add-Member -NotePropertyName workspaceId -NotePropertyValue "/subscriptions/$($subscriptionId)/resourceGroups/$($resourceGroupName)/providers/Microsoft.OperationalInsights/workspaces/$($WorkspaceName)"

$connectorBody = @{}

$connectorBody | Add-Member -NotePropertyName name -NotePropertyValue "AzureSentinel_$($WorkspaceName)"

$connectorBody.Add("properties",$connectorProperties)

And finally, try firing the request at Azure with a bit of error handling to write a warning to the pipeline if something goes wrong.

try {

$result = Invoke-AzRestMethod -Path $uri -Method PUT -Payload ($connectorBody | ConvertTo-Json -Depth 3)

if ($result.StatusCode -eq 200) {

Write-Host "Successfully enabled data connector: $($payload.kind)"

}

else {

Write-Host "##vso[task.LogIssue type=warning;]Unable to deploy $($con) with error: $($result.Content)"

}

}

catch {

$errorReturn = $_

Write-Verbose $_

Write-Host "##vso[task.LogIssue type=warning;]Unable to deploy $($con) with error: $errorReturn"

}

Phase 2: Deploy Sentinel analytics to Master tenant pointed at Sub tenant logs

If you followed all the above steps and onboarded a sub tenant and are in the Azure AD group, you should now be able to see the sub tenants Log analytics workspace in your Master Tenant Sentinel, at this stage you can also remove the service principle in the sub tenant.

If you cannot see it:

It sometimes takes 30+ minutes for Lighthouse connected tenants to show up in the master tenant.

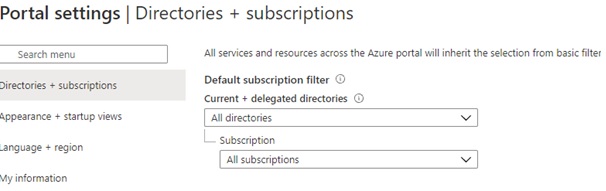

Ensure you have the sub tenant selected in “Directories + Subscriptions”

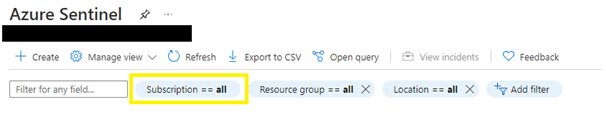

In Sentinel, make sure the subscription is selected in your filter

Deploying a new analytic

First load a template analytic.

If you just exported the analytics from an existing Sentinel instance you just need to make sure you set the workspace inside of the query to the correct one.

$alert = (Get-Content -Path $file.FullName) |ConvertFrom-Json

$analyticsTemplate =$ alert.detection_query -replace '{{targetWorkspace}}' , ($config.TargetWorkspaceName)

In my case, I am loading a custom analytics file, so need to combine it with my template file so need to set a few more bits.

$alert = (Get-Content -Path $file.FullName) |ConvertFrom-Json

$workspaceName = '$(naming.LogAnalyticsWorkspace)'

$resourceGroupName= '$(naming.CustomerResourceGroup)'

$id = New-Guid

$analyticsTemplate = (Get-Content -Path $detectionPath ) -replace '{DisplayPrefix}' , 'Sentinel-' -replace '{DisplayName}' , $alert.display_name -replace '{DetectionName}' , $alert.detection_name -replace '{{targetWorkspace}}' , ($config.TargetWorkspaceName) |ConvertFrom-Json

$QueryPeriod =([timespan]$alert.Interval)

$lookbackPeriod = ([timespan]$alert.LookupPeriod)

$SuppressionDuration = ([timespan]$alert.LookupPeriod)

$alert.detection_query=$alert.detection_query -replace '{{targetWorkspace}}' , ($config.TargetWorkspaceName)

$alert.detection_query

$tempDescription = $alert.description

$analyticsTemplate.Severity = $alert.severity

Create the analytic in Sentinel

When creating the analytics, you can either

- put them directly into the Analytics workspace that is now visible in the Master tenant through Lighthouse

- create a new workspace, and point the analytics at the other workspace by adding workspace(targetworkspace) to all tables in your analytics for example workspace(targetworkspace).DeviceEvents.

The latter solution keeps all the analytics in the Master tenant, so all queries can be stored there and do not go into the sub tenant (can be useful for protecting intellectual property or just having all analytics in one single workspace pointing at all the others).

At the time of creating the automated solution for this, I had a few issues with the various ways of deploying analytics, which have hopefully been solved by now. Below is my workaround that is still fully functional.

- The Az.SecurityInsights version of New-AzSentinelAlertRule did not allow me to set tactics on the analytic.

- The azsentinel version did have a working way to add tactics, but failed to create the analytic properly

- neither of them worked with adding entities.

So in a rather unpleasant solution I:

- Create the initial analytic using Az.SecurityInsightsNew-AzSentinelAlertRule

- Add the tactic in using azsentinelNew-AzSentinelAlertRule

- Add the entities and final settings in using the API

Az.SecurityInsightsNew-AzSentinelAlertRule -WorkspaceName $workspaceName -QueryPeriod $lookbackPeriod -Query $analyticsTemplate.detection_query -QueryFrequency $QueryPeriod -ResourceGroupName $resourceGroupName -AlertRuleId $id -Scheduled -Enabled -DisplayName $analyticsTemplate.DisplayName -Description $tempDescription -SuppressionDuration $SuppressionDuration -Severity $analyticsTemplate.Severity -TriggerOperator $analyticsTemplate.TriggerOperator -TriggerThreshold $analyticsTemplate.TriggerThreshold

$newAlert =azsentinelGet-AzSentinelAlertRule -WorkspaceName $workspaceName | where-object {$_.name -eq $id}

$newAlert.Query = $alert.detection_query

$newAlert.enabled = $analyticsTemplate.Enabled

azsentinelNew-AzSentinelAlertRule -WorkspaceName $workspaceName -Kind $newAlert.kind -SuppressionEnabled $newAlert.suppressionEnabled -Query $newAlert.query -DisplayName $newAlert.displayName -Description $newAlert.description -Tactics $newAlert.tactics -QueryFrequency $newAlert.queryFrequency -Severity $newAlert.severity -Enabled $newAlert.enabled -QueryPeriod $newAlert.queryPeriod -TriggerOperator $newAlert.triggerOperator -TriggerThreshold $newAlert.triggerThreshold -SuppressionDuration $newAlert.suppressionDuration

$additionUri = $newAlert.id + '?api-version=2021-03-01-preview'

$additionUri

$result = Invoke-AzRestMethod -Path $additionUri -Method Get

$entityCont ='[]'| ConvertFrom-Json

$entities = $alert.analyticsEntities.entityMappings | convertto-json -Depth 4

$content = $result.Content | ConvertFrom-Json

$tactics= $alert.tactic.Split(',') | ConvertTo-Json

$aggregation = 'SingleAlert'

if($alert.Grouping)

{

if($alert.Grouping -eq 'AlertPerResult')

{

$aggregation = 'AlertPerResult'

}}

$body = '{"id":"'+$content.id+'","name":"'+$content.name+'","type":"Microsoft.SecurityInsights/alertRules","kind":"Scheduled","properties":{"displayName":"'+$content.properties.displayName+'","description":"'+$content.properties.description+'","severity":"'+$content.properties.severity+'","enabled":true,"query":'+($content.properties.query |ConvertTo-Json)+',"queryFrequency":"'+$content.properties.queryFrequency+'","queryPeriod":"'+$content.properties.queryPeriod+'","triggerOperator":"'+$content.properties.triggerOperator+'","triggerThreshold":'+$content.properties.triggerThreshold+',"suppressionDuration":"'+$content.properties.suppressionDuration+'","suppressionEnabled":false,"tactics":'+$tactics+',"alertRuleTemplateName":null,"incidentConfiguration":{"createIncident":true,"groupingConfiguration":{"enabled":false,"reopenClosedIncident":false,"lookbackDuration":"PT5H","matchingMethod":"AllEntities","groupByEntities":[],"groupByAlertDetails":[],"groupByCustomDetails":[]}},"eventGroupingSettings":{"aggregationKind":"'+$aggregation+'"},"alertDetailsOverride":null,"customDetails":null,"entityMappings":'+ $entities +'}}'

$res = Invoke-AzRestMethod -path $additionUri -Method Put -Payload $body

You should now have all the components needed to create your own multi-Tenant enterprise scale pipelines.

The value from this solution comes from:

- Being able to quickly push custom made analytics to a multitude of workspaces

- Being able to source control analytics and code review them before deploying

- Having the ability to manage all sub tenants from the same master tenant without having to create accounts in each tenant

- Consistent RBAC for all tenants

- A huge amount of room to extend on this solution.

As mentioned at the start, this blog post only covers a small part of the overall deployment strategy we have at NCC Group, to illustrate the overall deployment workflow we’ve taken for Azure Sentinel. Upon this foundation, we’ve built a substantial ecosystem of custom analytics to support advanced detection and response.