Bots, AI, deepfakes, and disinformation in 2024

2024 is widely hailed as the biggest election year in history, with “national elections in more than 60 countries worldwide—around 2 billion voters.”

Last year was the year of generative AI following the launch of ChatGPT at the end of 2022, as well as the rise in popularity of AI image-generation tools such as DALL-E and MidJourney.

Amateur deepfakes have been a problem for celebrities for over five years, and more recently, politicians have been in the sights of those seeking to influence the public perception of politicians or their parties through the generation and sharing of fake images, audio, and video.

Social media platforms have been waging war against bots used to promote false narratives; often, these bots have been reasonably easy to identify with seemingly randomly generated usernames and independently publishing messages with the same or almost identical content. However, as the quality of disinformation improves thanks to the advances in generative AI, the reliance on bots to spread the message is reduced, and ordinary members of the public and even the press get fooled. People are especially at risk of believing disinformation if it aligns with and amplifies their existing worldview.

Moreover, the effect on those unsure of who they are going to vote for (swing voters) is of significant concern to democratic societies as these are the voters who typically decide the outcome of elections.

The timing of a deepfake release can also amplify its impact if it ensures there is less time to evaluate and report it as a fake or if it is shared during mandatory reporting moratoriums, as was the case during the recent Slovakian election. Conversely, eroding trust in the information voters consume online might decrease engagement in the democratic process.

Disinformation

"Deliberately biased or misleading information, as opposed to misinformation - false information that is spread, regardless of its intent to mislead.”

Deepfake

"Synthetic media which contains the likeness of a real person. Originally applied to deep learning techniques used to replace one person’s likeness with another. More recently, the term has been broadened to include generating entirely new media with generative AI."

Concerningly, the press might be fooled into propagating disinformation in order to be perceived as staying on top of breaking news stories or because it aligns with the political views of their target demographics. They have a responsibility to society to ensure that the stories they report are from reputable, or at the very least real, sources.

To clarify, the purpose of this article is not to explore or assist in determining whether a piece of information shared by an individual is factually accurate or misrepresented or where an opinion is shared as fact. Rather, it addresses where a deliberately false piece of information is generated using technology and falsely represented as if it were real or the opinion of a real person, such as in the case of a deepfake of a real person's likeness or voice.

Potential solutions

Several controls are available to reduce the risk of spreading disinformation from deepfakes. If we break down the problem in the figure below, we can explore which technologies can help.

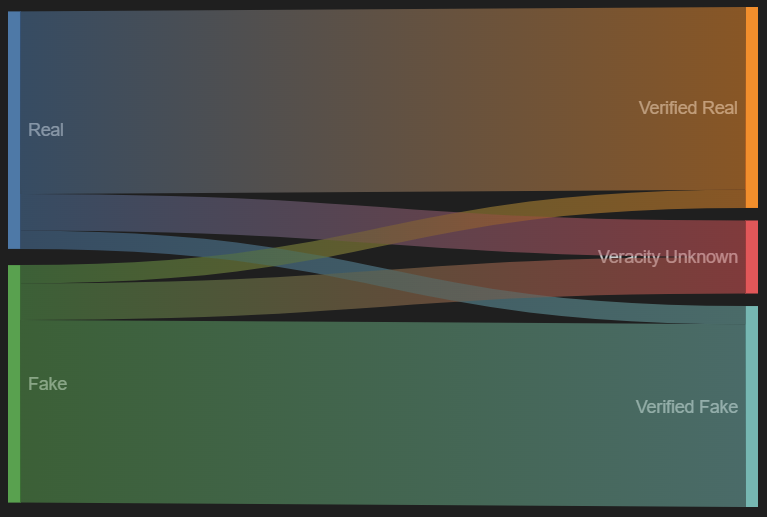

As a consumer of media, you want to be able to differentiate between real and fake content. Content therefore falls into one of these categories.

Regarding content likely influencing how people vote, we want to maximize real content being verified as real and fake content being verified as fake. We want to minimize all other combinations and educate users to differentiate between verified and unverified content.

In reality, there are a series of steps between the content being captured/generated and the public consuming it. If we focus on each verified information flow, then controls applicable to each stage can be identified.

Verified real content:

Real-world content consists of pictures, videos, and audio recordings of authentic materials and text content published by people under their true identity. In the case of audio and visual media, the content is captured on a digital device and encoded into a media file. The devices often record meta-data related to the device used for capture, which can be used as evidence of authenticity. Indeed, a lack of relevant meta-data should be treated as an indicator that an image or recording may be less trustworthy.

Some cameras can digitally sign the photos and videos they take. Digital signatures use cryptography to prove that the owner of the cryptographic key signed the file. Digital signatures are easy to remove but incredibly difficult to fake so long as the signing key is kept secret. A digitally signed photo is a strong indicator of trustworthiness so long as you can trust the security controls implemented by the camera manufacturer.

When considering whether to publish media, press organizations can use these techniques alongside other methods of investigative journalism to form an opinion on whether a piece of content is real or fake. They can then publish content via approved channels and add watermarks or logos to media they have inspected. One of the problems with adding logos and watermarks is that they are easily falsifiable, resulting in nefarious actors abusing the trust placed in the logos to trick people into believing their content is verified.

Digital signatures here also help as they are so difficult to fake, but they have a usability problem. Most laypeople don't understand digital signatures or how to verify them. The most commonly known user-facing implementation of digital signatures that has had mainstream success is the padlock in a browser, which indicates that a website has a valid certificate for its URL. The web Public Key Infrastructure (PKI) and the collection of organizations that issue digital certificates, Certificate Authorities (CA), have greatly improved internet users' security.

But the ecosystem has experienced its fair share of technical and political challenges. Establishing a similar consortium for the much more politically charged environment of the press requires broad industry support, possibly regulation, and the equivalent of the web padlock for signed media files.

Thankfully, such an effort is underway. The Coalition for Content Provenance and Authenticity (C2PA), consisting of news media, technology, and human rights organizations, is developing technical standards, known as content credentials, which will be used to cryptographically prove the provenance information of a piece of media. This includes who created it, edited it, and even how it has been edited. The standards include the equivalent of the padlock and the content credential icon, which is designed to be recognizable and verifiable by media consumers. The British Broadcasting Corporation (BBC) has recently started using content credentials to support its BBC Verify service.

Sharing platforms are responsible for promoting genuine content and avoiding amplifying fake media. Under the EU's Digital Services Act, tech platforms will be required to label manipulated content. Exactly what this looks like in practice will be set out in further guidance from the European Commission, expected later this month.

X (formerly Twitter), TikTok, and Meta have introduced verified accounts to assure users that the accounts they are interacting with are genuine. These verifications provide confidence that content shared by these accounts, whether their opinions are in text content or images, voice, and video recordings, is from the account owner. Unfortunately, however, the algorithms that drive user engagement are also likely to promote fake content if it provokes a strong response from users.

Outside of technical controls, users can perform their own verification. Common advice includes:

- Be wary of believing content online, especially on social media and forums.

- Check if it has been created by an account you know and trust; even better if it is verified.

- Don't blindly trust it just because it has been reposted or liked by an account you do trust, as they may not have verified the content or their account may have been compromised.

Verified fake content:

Deepfakes and generative AI begin life as a set of training data. These models cannot generate a realistic likeness of someone's appearance or voice if they do not have sufficient examples to train on. Unfortunately, for many people in the public eye, it is not possible to control who has access to many examples of your face and voice online.

For the past five years or so, it has been challenging- but possible- for hobbyists to create deepfakes of varying qualities using freely available tools and a set of training data. It is certainly achievable for a politically motivated activist to create realistic voice generations, as seen recently in the cases of UK Labour Party Leader Keir Starmer, Mayor of London Sadiq Khan, and US President Joe Biden. However, in all cases with deepfakes, there are usually clues that the media is fake; unusual audio and visual artifacts are often detectable.

More recently, many generative AI companies have trained their models on images from the internet of celebrities and politicians, significantly lowering the barrier to entry for creating a reasonably realistic synthetic image for anyone with a credit card. The ship has sailed for now on whether it was ethical for these companies to train their models on such data in the first place.

At the time of generation, AI model operators can implement controls to prevent the generation of potentially politically sensitive content. These "guardrails" have proved inconsistent in their effectiveness so far, with research being published about various techniques to "persuade" a model to generate outputs breaching the intent of the guardrails.

Another control being actively researched is embedding watermarks into generated content to determine AI usage easily. Content credentials and digital signing also have applications here; for example, images generated by DALL-E in the Azure OpenAI service automatically apply content credentials that specify the image is AI-generated with details of its provenance. However, a determined and moderately skilled attacker could extract the media from the digitally signed manifest and share it without the content credentials and associated metadata about its origin.

The press has a vital role as a trusted institution in correcting the record when a convincing deepfake has been widely shared. They can use the same techniques used to verify real images to detect fake images, as well as reveal what the meta-data says, how trustworthy the sources are, and if the media have any unusual artifacts.

Equally, social media companies and other platform providers can limit the spread of disinformation. User reporting of disinformation to content moderation teams, as well as automated duplicate content detection, can prevent deepfakes from spreading further. Social media companies may be uniquely qualified to implement automated detection of synthetic media through technologies such as machine learning, given their size and the technical capabilities of their staff and systems. Models to classify media as fake or authentic have been shown to be effective in research; the question now is whether platforms can translate these promising results into ongoing effective automated detection and labelling systems.

Users can also play their part in limiting the spread and impact of disinformation. We recommend performing fundamental checks, such as:

- Being wary of:

- New accounts.

- Accounts that have recently changed their username.

- Unverified accounts with misspelled usernames similar to other usernames.

- Accounts with parody in the biography.

- Accounts with fewer than expected followers.

- If an image, video, or recording seems a bit odd, has unexpected artifacts, or is of low quality, then it may be generated or modified.

- If you are reasonably sure that the content is fake, report it to the platform content moderation teams.

There is also a question about what role governments should play beyond using their regulatory and other levers to encourage platforms and media outlets to implement technical solutions. Public awareness campaigns should (at the very least) continue, with frequent reminders that in these times, people shouldn't assume what they're seeing or reading is necessarily genuine. Meanwhile, authorities should also place responsibility on political candidates to ensure they're taking steps to avoid amplifying disinformation.

Conclusion

Deepfakes and generative AI used for disinformation undoubtedly pose a risk to fair democratic processes globally. Mitigating that risk will take a concentrated effort from stakeholders, including AI developers and operators, the press, governments, political candidates, social media platforms, and voters themselves.

It remains to be seen whether a deepfake incident will decisively influence the voting public enough to alter the headline results of an election, but, for example, with many dozens of local constituency results decided by a matter of less than a few thousand votes in the UK it is certainly not beyond the realm of possibility that it could influence the results in a few marginal seats.