Introduction

The use of pairings in cryptography began in 1993, when an algorithm developed by Menezes, Okamoto and Vanstone, now known as the MOV-attack, described a sub-exponential algorithm for solving the discrete logarithm problem for supersingular elliptic curves.1 It wasn’t until the following decade that efficient pairing-based algorithms were used constructively to build cryptographic protocols applied to identity-based encryption, short signature signing algorithms and three participant key exchanges.

These protocols (and many more) are now being adopted and implemented “in the wild”:

- Ethereum uses BLS Signatures to cryptographically verify transactions in ETH2

- ZCash uses pairings in zk-SNARKs to verify encrypted transactions stored on the blockchain

- TPM have included the Elliptic Curve Direct Anonymous Attestation (ECDAA) protocol into TPM 2.0

- KZG commitment schemes have been suggested as a better accumulator of block data than Merkle trees for ETH2

Paring-based protocols present an interesting challenge. Unlike many cryptographic protocols which perform operations in a single cryptographic group, pairing-based protocols take elements from two groups and “pair them together” to create an element of a third group. This means that when considering the security of a pairing-based protocol, cryptographers must ensure that any security assumptions (e.g. the hardness of the discrete logarithm problem) apply to all three groups.

The growing adoption of pairing-based protocols has inspired a surge of research interest in pairing-friendly curves; a special class of elliptic curves suitable for pairing-based cryptography. In fact, currently all practical implementations of pairing-based protocols use these curves. As a result, understanding the security of pairing-friendly curves (and their associated groups) is at the core of the security of pairing-based protocols.

In this blogpost, we give a friendly introduction to the foundations of pairing-based protocols. We will show how elliptic curves offer a perfect space for implementing these algorithms and describe at a high level the cryptographic groups that appear during the process.

After a brief review of how we estimate the bit security of protocols and the computational complexity of algorithms, we will focus on reviewing the bit-security of pairing-friendly curves using BLS-signatures as an example. In particular, we collect recent results in the state-of-the-art algorithms designed to solve the discrete logarithm problem for finite fields and explain how this reduces the originally reported bit-security of some of the curves currently in use today.

We wrap up the blogpost by discussing the real-world ramifications of these new attacks and the current understanding of what lies ahead. For those who wish to hold true to (at least) 128 bit-security, we offer references to new pairing-friendly curves which have been carefully generated with respect to the most recent research.

This blogpost was inspired by some previous work that NCC Group carried out for ZCash, which in-part discussed the bit-security of the BLS12-381 curve. This work was published as a public report.

Maths Note: throughout this blog post, we will assume that the reader is fairly comfortable with the notion of a mathematical group, finite fields and elliptic curves. For those who want a refresher, we offer a few lines in an Appendix: mathematical notation.

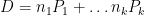

Throughout this blog post, we will use  to denote a prime and

to denote a prime and  denote a prime power. It follows that

denote a prime power. It follows that  is a prime field, and

is a prime field, and  is prime-power field. We allow

is prime-power field. We allow  so we can use

so we can use  when we wish to be generic. When talking about pairing elements, we will use the notation

when we wish to be generic. When talking about pairing elements, we will use the notation  such that the embedding degree

such that the embedding degree  is explicit.

is explicit.

Acknowledgements

Many thanks to Paul Bottinelli, Elena Bakos Lang and Phillip Langlois from NCC Group, and Robin Jadoul in personal correspondence for carefully reading drafts of this blog post and offering their expertise during the editing process.

Bilinear Pairings and Elliptic Curves

Pairing groups together

At a high level, a pairing is a special function which takes elements from two groups and combines them together to return an element of a third group. In this section, we will explore this concept, its applications to cryptography and the realisation of an efficient pairing using points on elliptic curves.

Let  ,

,  and

and  be cyclic groups of order

be cyclic groups of order  . For this blog post, we will assume an additive structure for

. For this blog post, we will assume an additive structure for  and

and  with elements

with elements  and a multiplicative structure for the target group

and a multiplicative structure for the target group  . A pairing is a map:

. A pairing is a map:  which is:

which is:

- Bilinear:

![e([a]P, [b]Q) = e(P,Q)^{ab}](/media/dayd3zjs/_e-a-p-b-q-e-p-q-ab.png) .

. - Non-degenerate: If

is non-zero, then there is a

is non-zero, then there is a  such that

such that  (and vice-versa).

(and vice-versa).

In the special case when the input groups  , we say that the pairing is symmetric, and asymmetric otherwise.

, we say that the pairing is symmetric, and asymmetric otherwise.

For a practical perspective, let’s look at an example where we can use pairings cryptographically. Given a group with an efficient pairing  , we can use bilinearity to easily solve the decision Diffie-Hellman problem.2 Using a symmetric pairing, we can write

, we can use bilinearity to easily solve the decision Diffie-Hellman problem.2 Using a symmetric pairing, we can write

![e([a]P, [b]P) = e(P,P)^{ab}, \qquad e(P, [c]P)= e(P,P)^c.](/media/mrgblewi/_e-a-p-b-p-e-p-p-ab-qquad-e-p-c-p-e-p-p-c.png)

Therefore, given the DDH triple

![([a]P, [b]P, [c]P)](/media/i5yfjl3y/_-a-p-b-p-c-p.png) , we can solve DDH by checking whether

, we can solve DDH by checking whether ![e([a]P, [b]P) \stackrel{?}{=} e(P, [c]P),](/media/4dqi05i0/_e-a-p-b-p-stackrel-e-p-c-p.png)

which will be true only if and only if

.

.

Pairing Points

🤓 The next few sections have more maths than most of the post. If you’re more keen to learn about security estimates and are happy to just trust that we have efficient pairings which take points on elliptic curves and return elements of  , you can skip ahead to What Makes Curves Friendly? 🤓

, you can skip ahead to What Makes Curves Friendly? 🤓

Weil Beginnings

As the notation above may have hinted, one practical example of a bilinear pairing is when the groups  and

and  are generated by points on an elliptic curve. In particular, we will consider elliptic curves defined over a finite field:

are generated by points on an elliptic curve. In particular, we will consider elliptic curves defined over a finite field:  . We have a handful of practical bilinear pairings that we could use, but all of them take points on curves as an input and return an element of the group

. We have a handful of practical bilinear pairings that we could use, but all of them take points on curves as an input and return an element of the group  .

.

The first bilinear pairing for elliptic curve groups was proposed in 1940 by André Weil, and it is now known as the Weil pairing. Given an elliptic curve  with a cyclic subgroup

with a cyclic subgroup  of order

of order  , the Weil pairing takes points in the

, the Weil pairing takes points in the  -torsion

-torsion ![E(\mathbb{F}_{p^k})[r]](/media/154csafy/_e-mathbb-f-_-p-k-r.png) and returns an

and returns an  th root of unity in

th root of unity in  for some integer

for some integer  .3

.3

The  -torsion of the curve is the group

-torsion of the curve is the group ![E(\mathbb{F}_{q})[r]](/media/m31bh3am/_e-mathbb-f-_-q-r.png) which consists of all the points on the curve which have order

which consists of all the points on the curve which have order  :

: ![E(\mathbb{F}_{q})[r] = \{P \; | \; rP = \mathcal{O} , P \in E(\mathbb{F}_q) \}](/media/bg2nardj/_e-mathbb-f-_-q-r-p-rp-mathcal-o-p-in-e-mathbb-f-_q.png) . The integer

. The integer  appearing in the target group is called the (Weil) embedding degree and is the smallest integer

appearing in the target group is called the (Weil) embedding degree and is the smallest integer  such that

such that ![E(\mathbb{F}_{p^k})[r]](/media/154csafy/_e-mathbb-f-_-p-k-r.png) has

has  elements.4

elements.4

To compute the value of the Weil pairing, you first need to be able to compute rational functions  and

and  with a prescribed divisor.5 The Weil pairing is the quotient of these two functions:

with a prescribed divisor.5 The Weil pairing is the quotient of these two functions:

![e: E(\mathbb{F}_{p^k})[r] \times E(\mathbb{F}_{p^k})[r] \to \mathbb{F}^*_{p^k}, \qquad e(P,Q) = \frac{f_P(\mathcal{D}_Q)}{f_Q(\mathcal{D}_P)},](/media/eikhwjxs/_e-e-mathbb-f-_-p-k-r-times-e-mathbb-f-_-p-k-r-to-mathbb-f-_-p-k-qquad-e-p-q-frac-f_p-mathcal-d-_q-f_q-mathcal-d-_p.png)

where the rational function

has a divisor

has a divisor  and

and  is a divisor

is a divisor  , for point on the curve

, for point on the curve  .

.

When Weil first proposed his pairing, algorithms to compute these special functions ran in exponential time, so to compute the pairing for a cryptographically suitable curve was impossible.

It wasn’t until 1986, when Miller introduced his linear time algorithm to compute functions on algebraic curves with given divisors, that we could think of pairings in a cryptographic context. Miller’s speed up can be thought of in analogy to how the “square and multiply” formula speeds up exponentiation and takes  steps. Similar to “Square and Multiply”, the lower the hamming weight of the order

steps. Similar to “Square and Multiply”, the lower the hamming weight of the order  , the faster Miller’s algorithm runs. For a fantastic overview of working with Miller’s algorithm in practice, we recommend Ben Lynn’s thesis.

, the faster Miller’s algorithm runs. For a fantastic overview of working with Miller’s algorithm in practice, we recommend Ben Lynn’s thesis.

Tate and Optimised Ate Pairings

The Weil pairing is a natural way to introduce elliptic curve pairings (both historically and conceptually), but as Miller’s algorithm must be run twice to compute both  and

and  , the Weil pairing can affect the performance of a pairing based protocol.

, the Weil pairing can affect the performance of a pairing based protocol.

In contrast, Tate’s pairing  requires only a single call to Miller’s algorithm and can be computed from

requires only a single call to Miller’s algorithm and can be computed from

leading to more efficient pairing computations. We can relate the Weil and Tate pairings by the relation:

The structure of the input and output for the Tate pairing is a little more complicated than the Weil pairing. Roughly, the first input point

should still be in the

should still be in the  -torsion, but now the second point

-torsion, but now the second point  need not have order

need not have order  . The output of the tate pairing is an element of the quotient group

. The output of the tate pairing is an element of the quotient group  .

.

For the Weil pairing, the embedding degree was picked to ensure we had all  of our torsion points in

of our torsion points in ![E(\mathbb{F}_{p^k})[r]](/media/154csafy/_e-mathbb-f-_-p-k-r.png) . However, for the Tate pairing, we instead only require that

. However, for the Tate pairing, we instead only require that  contains the

contains the  th roots of unity, which is satisfied when the order of the curve point

th roots of unity, which is satisfied when the order of the curve point  divides

divides  .6

.6

Due to the Balasubramanian-Koblitz Theorem, for all cases other than  , the Weil and Tate embedding degrees are the same. Because of this, for the rest of this blog post we will just refer to

, the Weil and Tate embedding degrees are the same. Because of this, for the rest of this blog post we will just refer to  as the embedding degree and think of it in Tate’s context; as finding one number to divide another is simpler than extending a field to ensure that the

as the embedding degree and think of it in Tate’s context; as finding one number to divide another is simpler than extending a field to ensure that the  -torsion has been totally filled!

-torsion has been totally filled!

The exponentiation present in the Tate pairing can be costly, but it can be further optimised, and for most implementations of pairing protocols, a third pairing called the Ate-pairing is picked for best performance. As with the Weil pairing, the Ate pairing can be written in terms of the Tate pairing. The optimised Ate pairing has the shortest Miller loop and is the pairing of choice for modern implementations of pairing protocols.

What Makes Curves Friendly?

When picking parameters for cryptographic protocols, we’re concerned with two problems:

- The protocol must be computationally efficient.

- The best known attacks against the protocol must be computationally expensive.

Specifically for elliptic curve pairings, which map points on curves to elements of  , we need to consider the security of the discrete logarithm problem for all groups, while also ensuring that computation of the chosen pairing is efficient.

, we need to consider the security of the discrete logarithm problem for all groups, while also ensuring that computation of the chosen pairing is efficient.

For the elliptic curve groups, it is sufficient to ensure that the points used generate a prime order subgroup which is cryptographically sized. More subtle is how to pick curves with the “right” embedding degree.

- If the embedding degree is too large, computing the pairing is computationally very expensive.

- If the embedding degree is too small, sub-exponential algorithms can solve the discrete log problem in

efficiently.

efficiently.

Given a prime  and picking a random elliptic curve

and picking a random elliptic curve  , the expected embedding degree is distributed (fairly) evenly between

, the expected embedding degree is distributed (fairly) evenly between  . As we are working with cryptographically sized primes

. As we are working with cryptographically sized primes  , the embedding degree is then also expected to be cryptographically sized and the probability to find small

, the embedding degree is then also expected to be cryptographically sized and the probability to find small  becomes astronomically small.7 Aside from the complexity of computing pairings when the degree is so large, we would also very quickly run out of memory even trying to represent an element of

becomes astronomically small.7 Aside from the complexity of computing pairings when the degree is so large, we would also very quickly run out of memory even trying to represent an element of  .

.

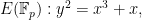

One trick to ensure we find curves with small embedding degree is to consider supersingular curves. For example, picking a prime  and defining the curve

and defining the curve

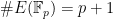

we always find that the number of points on the curve:

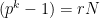

. Picking

. Picking  , we can factor

, we can factor  . As the order of the curve

. As the order of the curve  divides

divides  , we see that the embedding degree is

, we see that the embedding degree is  .

.

The problem with this curve is that ensuring the discrete log problem in  is cryptographically hard would require a

is cryptographically hard would require a  so large, working with the curves themselves would be too cumbersome. Similar arguments with primes of different forms allow the construction of other supersingular curves, but whatever method used, all supersingular curves have

so large, working with the curves themselves would be too cumbersome. Similar arguments with primes of different forms allow the construction of other supersingular curves, but whatever method used, all supersingular curves have  , which is usually considered too small for pairing-based protocols.

, which is usually considered too small for pairing-based protocols.

If we’re not finding these curves randomly and we can’t use supersingular curves, then we have to sit down and find friendly curves carefully using mathematics. In particular, we use complex multiplication to construct our special elliptic curves. This has been a point of research for the past twenty years, and cryptographers have been carefully constructing families of curves which:

- Have large, prime-order subgroups.

- Have small (but not too small!) embedding degree, (usually) between

.

.

Additionally, some “nice-to-haves” are also finding:

- A carefully picked field characteristic

to allow for fast modular arithmetic.

to allow for fast modular arithmetic. - A curve group order

with low hamming weight to speed up Miller’s loop.

with low hamming weight to speed up Miller’s loop.

Curves with these properties are perfect for pairing-based protocols and so we call them pairing-friendly curves!

A invaluable repository of pairing-friendly curves and links to corresponding references is Aurore Guillevic’s Pairing Friendly Curves. Guillevic is a leading researcher in estimating the security of pairing friendly curves and has worked to tabulate many popular curves and their best use cases.

A familiar face: BLS12-381

In 2002, Baretto, Lynn and Scott published a paper: Constructing Elliptic Curves with Prescribed Embedding Degrees, and curves generated with this method are in the family of “BLS curves”.

The specific curve BLS12-381 was designed by Sean Bowe for ZCash after research by Menezes, Sarkar and Singh showed that the Barreto–Naehrig curve, which ZCash had previously been using, had a lower than anticipated security.

This is a popular curve used in many protocols that we see while auditing code here at NCC, so it’s a perfect choice as an example of a pairing-friendly curve.

Let’s begin by writing down the curve equations and mention some nice properties of the parameters. The two groups  and

and  have a 255 bit prime order

have a 255 bit prime order  and are generated by points from the respective curves:

and are generated by points from the respective curves:

![E_1(\mathbb{F}_p) : y^2 = x^3 + 4, \\ \\ E_2(\mathbb{F}_{p^2}) : y^2 = x^4 + 4(1 + u), \qquad \mathbb{F}_{p^2} = \mathbb{F}_p[u] / (u^2 + 1).](/media/yullmqmg/_e_1-mathbb-f-_p-y-2-x-3-plus-4-e_2-mathbb-f-_-p-2-y-2-x-4-plus-4-1-plus-u-qquad-mathbb-f-_-p-2-mathbb-f-_p-u-u-2-plus-1.png)

The embedding degree is

,

,  , and the characteristic of the field

, and the characteristic of the field  has 381 bits (hopefully now the name BLS12-381 makes sense!).

has 381 bits (hopefully now the name BLS12-381 makes sense!).

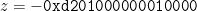

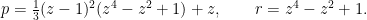

The parameters  and

and  have some extra features baked into them too. There is in fact a single parameter

have some extra features baked into them too. There is in fact a single parameter  which generates the value of both the characteristic

which generates the value of both the characteristic  and the subgroup order

and the subgroup order  :

:

The low hamming weight of

reduces the cost of the Miller loop when computing pairings and to aid with zkSnark schemes,

reduces the cost of the Miller loop when computing pairings and to aid with zkSnark schemes,  is chosen such that it is divisible by a large power of two (precisely,

is chosen such that it is divisible by a large power of two (precisely,  ).

).

Ben Edgington has a fantastic blog post that carefully discusses BLS12-381, and is a perfect resource for anyone wanting to know more about this particular curve. For a programming-first look at this curve, NCC’s Eric Schorn has written a set of blog posts implementing pairing on BLS12-381 using Haskell and goes on to look at optimisations of pairing with BLS12-381 by implementing Montgomery arithmetic in Rust and then further improves performance using Assembly.

Something Practical: BLS Signatures

Let’s finish this section with an example, looking at how a bilinear pairing gives an incredibly simple short signature scheme proposed by Boneh, Lynn and Shacham.9 A “short” signature scheme is a signing protocol which returns small signatures (in bits) relative to their cryptographic security.

As above, we work with groups  of prime order

of prime order  . For all practical implementations of BLS-signatures,

. For all practical implementations of BLS-signatures,  and

and  are prime order groups generated by points on elliptic curves and

are prime order groups generated by points on elliptic curves and  where

where  is the embedding degree. We could, for example, work with the above BLS12-381 curve and have

is the embedding degree. We could, for example, work with the above BLS12-381 curve and have  .

.

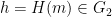

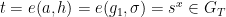

Key Generation is very simple: we pick a random integer  , within the range

, within the range  . The corresponding public key is

. The corresponding public key is ![a = [x]g \in G_1](/media/ewbjbtut/_a-x-g-in-g_1.png) , where

, where  is a generator of

is a generator of  .

.

To sign a message  , we represent the hash of this message

, we represent the hash of this message  as a point

as a point  on the curve.8 The signature is found by performing scalar multiplication of the point:

on the curve.8 The signature is found by performing scalar multiplication of the point: ![\sigma = [x]h](/media/wxfpxxqg/_-sigma-x-h.png) .

.

Pairing comes into play when we verify the signature  . The verifier uses the broadcast points

. The verifier uses the broadcast points  and the message

and the message  to check whether

to check whether

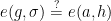

Where the scheme uses the bilinearity of the pairing:

![e(g, \sigma) = e(g, [x]h) = e(g, h)^x = e([x]g, h) = e(a, h)](/media/1wsnr33z/_e-g-sigma-e-g-x-h-e-g-h-x-e-x-g-h-e-a-h.png) .

.Picking groups

For pairing-friendly curves such as BLS or Barreto-Naehrig (BN) curves,  has points with coordinates in

has points with coordinates in  while

while  has points with coordinates in

has points with coordinates in  . This means that the compressed points of

. This means that the compressed points of  are twice as large as those from

are twice as large as those from  and operations with points from

and operations with points from  are slower than those from

are slower than those from  .

.

When designing a signature system, protocols can pick for public keys or signatures to be elements of  , which would speed up either key generation or signing respectively. For example, ZCash pick

, which would speed up either key generation or signing respectively. For example, ZCash pick  for their signatures, while Ethereum have picked

for their signatures, while Ethereum have picked  for their public keys in ETH2. Note that neither choice affects the benchmarks for verification; this is instead limited by the size (and Hamming weight) of the integers

for their public keys in ETH2. Note that neither choice affects the benchmarks for verification; this is instead limited by the size (and Hamming weight) of the integers  and

and  .

.

A Bit on Bit Security

Infeasible vs. Impossible

Cryptography is built on a set of very-hard but not impossible problems.

For authenticated encryption, such as AES-GCM or ChaCha20-Poly1305, correctly guessing the key allows you to decrypt encrypted data. For asymmetric protocols, whether it’s computing a factor of an RSA modulus, or the private key for an elliptic curve key-pair, you’re usually one integer away from breaking the protocol. Using the above BLS signature example, an attacker knowing Alice’s private key  would allow them to impersonate Alice and sign any chosen message.

would allow them to impersonate Alice and sign any chosen message.

Cryptography is certainly not broken though. To ensure our protocols are secure, we instead design problems which we believe are infeasible to find a solution to given reasonable amount of time. We often describe the complexity of breaking a cryptographic problem by estimating the number of operations we would need to perform to correctly recover the secret. If a certain problem requires performing  operations, then we call this an n bit-strength problem.10

operations, then we call this an n bit-strength problem.10

As an example, let’s consider AES-256 where the key is some random 256-bit sequence.11 Currently, the best known attack to decrypt a given AES ciphertext, encrypted with an unknown key, is to simply guess a random key and attempt to decrypt the message. This is known as a brute-force attack. Defining our operation as: “guess a key, attempt to decrypt”, we’ll need at most  operations to stumble upon the secret key. We therefore say that AES-256 has 256-bit security. This same argument carries over exactly to AES-128 and AES-192 which have 128- and 192-bit security respectively, or the ChaCha/Salsa ciphers which have either 128 or 256-bit keys.

operations to stumble upon the secret key. We therefore say that AES-256 has 256-bit security. This same argument carries over exactly to AES-128 and AES-192 which have 128- and 192-bit security respectively, or the ChaCha/Salsa ciphers which have either 128 or 256-bit keys.

How big is big?

Humans can have a tough time really appreciating how large big-numbers are,12 but even for the “lower” bound of 128-bit security, estimates end up comparing computation time with a galaxy of computers working for the age of the universe before terminating with the correct key! For those who are interested in a visual picture of quite how big this number is, there’s a fantastic video by 3blue1brown How Secure is 256 bit security and if you’re skimming this blogpost and would rather do some reading, this StackExchange question has some entertaining financial costs for performing  operations.

operations.

Asymmetric Bit-Security

For a well-designed symmetric cipher, the bit-strength is set by the cost of a brute force search across the key-space. Similarly for cryptographic hash functions, the bit-strength for preimage resistance is the bit-length of the hash output (collision resistance is always half of this due to the Birthday Paradox).

For asymmetric protocols, estimating the precise bit-strength is more delicate. The security of these protocols rely on mathematical problems which are believed to be computationally hard. For RSA, security is assured by the assumed hardness of integer factorisation. For Diffie-Hellman key exchanges, security comes from the assumed hardness of the computational Diffie-Hellman problem (and by reduction, the discrete logarithm problem).

Although we can still think of breaking these protocols by “guessing” the secret values (a prime factor of a modulus, the private key of some elliptic curve key-pair), our best attempts are significantly better than a brute force approach; we can take the mathematical structure which allows the construction of these protocols and build algorithms to recover secret values from shared, public ones.

To have an accurate security estimate for an asymmetric protocol, we must then have a firm grasp of the computational complexity of the state-of-the-art attacks against the specific hard problem we consider. As a result, parameters of asymmetric protocols must be fine-tuned as new algorithms are discovered to maintain assurance of a certain bit-strength.

To attempt to standardise asymmetric ciphers, cryptographers generate their parameters (e.g. picking the size of the prime factors of an RSA modulus, or the group order for a Diffie-Hellman key-exchange) such that the current best known attacks require (at least)  ,

,  or

or  operations.

operations.

RSA Estimates: Cat and Mouse

As a concrete example, we can look at the history of estimates for RSA. When first presented in 1976, Rivest, Shamir and Adleman proposed their conservative security estimates of 664-bit moduli, estimating 4 billion years (corresponding to  operations) to successfully factor. Their largest modulus proposed had 1600 bits, with an estimated 130 bit-strength based off the state of the art factoring algorithm at the time (due to Schroeppel).

operations) to successfully factor. Their largest modulus proposed had 1600 bits, with an estimated 130 bit-strength based off the state of the art factoring algorithm at the time (due to Schroeppel).

The RSA algorithm gave a new motivation for the design of faster factoring algorithms, each of which would push the necessary bit-size of the modulus higher to ensure that RSA could remain safe. The current record for integer factorisation is a 829 bit moduli, set Feb 28th, 2020 taking “only” 2700 core years. Modern estimates require a 3072 bit modulus to achieve 128-bit security for RSA implementations.

Estimating computational complexity

Let’s finish this section with a discussion of how we denote computational complexity. The aim is to try and give some intuition for “L-notation” used mainly by researchers in computational number theory for estimating the asymptotic complexity of their algorithms.

Big-O Notation

A potentially more familiar notation for our readers is “Big- ” notation, which estimates the number of operations as an upper bound based on the input to the algorithm. For an input of length

” notation, which estimates the number of operations as an upper bound based on the input to the algorithm. For an input of length  , a linear time algorithm runs in

, a linear time algorithm runs in  , which means if we double the length of

, which means if we double the length of  , we would expect the running time to also double. A naive implementation of multiplication of numbers with

, we would expect the running time to also double. A naive implementation of multiplication of numbers with  digits runs in

digits runs in  or quadratic time. Doubling the length of the input, we would expect a four-times increase in computation time. Other common algorithms run in logarithmic:

or quadratic time. Doubling the length of the input, we would expect a four-times increase in computation time. Other common algorithms run in logarithmic:  , polylogarithmic:

, polylogarithmic:  or polynomial time:

or polynomial time:  , where

, where  is a fixed constant. An algorithm which takes the same amount of time regardless of input length is known as constant time, denoted by

is a fixed constant. An algorithm which takes the same amount of time regardless of input length is known as constant time, denoted by  .

.

In order to protect against side-channel attacks, constant time algorithms are particularly important when implementing cryptographic protocols. If a secret value is supplied to a variable-time algorithm, an attacker can carefully measure the computation time and learn about private information. By assuring algorithms run in constant time, side-channel attacks are unable to learn about private values when they are supplied as arguments. Note that constant does not (necessarily) mean fast! Looking up values in hashmaps is  and fast. Computing the scalar multiplication of elliptic curve points, given a fixed elliptic curve

and fast. Computing the scalar multiplication of elliptic curve points, given a fixed elliptic curve  , for cryptographic use should be

, for cryptographic use should be  , but this isnt a particularly fast thing to do! In fact, converting operations to be constant time in cryptography will often slow down the protocol, but it’s a small price to protect against timing attacks.

, but this isnt a particularly fast thing to do! In fact, converting operations to be constant time in cryptography will often slow down the protocol, but it’s a small price to protect against timing attacks.

As a final example, we mention an algorithm developed by Shanks which is a meet in the middle algorithm for solving the discrete logarithm problem for a generic Abelian group. It is sufficient to consider prime order groups, as the Pohlig-Hellman algorithm allows us to reduce the problem for composite order groups into its prime factors, reconstructing the solution using the Chinese remainder theorem.

For a group of prime order  , a naive brute-force attack for the discrete logarithm problem would take at most

, a naive brute-force attack for the discrete logarithm problem would take at most  operations. Shank’s Baby-Step-Giant-Step (BSGS) step algorithm runs in two stages, and like all meet in the middle algorithms, the time complexity of the algorithm can be reduced with a time/space trade off.

operations. Shank’s Baby-Step-Giant-Step (BSGS) step algorithm runs in two stages, and like all meet in the middle algorithms, the time complexity of the algorithm can be reduced with a time/space trade off.

The BSGS algorithm takes in group elements  and looks for an integer

and looks for an integer  such that

such that ![P = [n]Q](/media/n3rmnbk5/_p-n-q.png) . First we perform the baby steps, where

. First we perform the baby steps, where  multiples of

multiples of ![R_i = [x_i]P](/media/glxp3ioa/_r_i-x_i-p.png) are computed and stored in a hashmap. Next, the giant steps, where up to

are computed and stored in a hashmap. Next, the giant steps, where up to  group elements

group elements ![S_j = Q + [y_j]P](/media/pu4asxyq/_s_j-q-plus-y_j-p.png) are computed. At each giant step, a check for

are computed. At each giant step, a check for  is performed. When a match is found, the algorithm terminates and returns

is performed. When a match is found, the algorithm terminates and returns  . All in, the BSGS takes

. All in, the BSGS takes  operations to solve the discrete logarithm problem and requires

operations to solve the discrete logarithm problem and requires  space for the hashmap. Pollard’s rho algorithm achieves the same

space for the hashmap. Pollard’s rho algorithm achieves the same  upper bound time complexity, without the need to build the large hashmap, which can be prohibitively expensive as the group’s order grows.

upper bound time complexity, without the need to build the large hashmap, which can be prohibitively expensive as the group’s order grows.

L-notation

L-notation is a specialised notation used for many of the best-case time complexity approximations for cryptographically hard problems such as factoring, or solving the discrete logarithm for finite fields.

L-notation gives the expected time complexity for an algorithm with input of length  as we allow the length to become infinitely large:

as we allow the length to become infinitely large:

![L_n[\alpha, c] = \exp \left\{ (c + o(1))(\ln n)^\alpha (\ln \ln n)^{1-\alpha} \right\}](/media/tfmlou54/_l_n-alpha-c-exp-left-c-plus-o-1-ln-n-alpha-ln-ln-n-1-alpha-right.png)

Despite the intimidating expression, we can get a rough picture by understanding how the size of

and

and  effect expected computational complexity.

effect expected computational complexity.

- When

we say an algorithm is sub-exponential

we say an algorithm is sub-exponential - When

the complexity reduces to polylogarithmic time:

the complexity reduces to polylogarithmic time:

- When

the complexity is equivalent to polynomial time:

the complexity is equivalent to polynomial time:

For improvements, small reductions in  marginally improve performance, while reductions in

marginally improve performance, while reductions in  usually are linked with significant improvement of an algorithm’s performance. Efforts to reduce the effect of the

usually are linked with significant improvement of an algorithm’s performance. Efforts to reduce the effect of the  term are known as “practical improvements” and allow for minor speed-ups in computation time, without adjusting the complexity estimate.

term are known as “practical improvements” and allow for minor speed-ups in computation time, without adjusting the complexity estimate.

For cryptographers, L-notation appears mostly when considering the complexity of integer factorisation, or solving the discrete logarithm problem in a finite field. In fact, these two problems are intimately related and many sub-exponential algorithms suitable for one of these problems has been adapted to the other.13

The first sub-exponential algorithm for integer factorisation was the index-calculus attack, developed by Adleman in 1979 and runs in ![L_n[\frac{1}{2}, \sqrt{2}]](/media/tzmbu4bv/_l_n-frac-1-2-sqrt-2.png) . Two years later, Pomerance (who also was the one who popularised L-notation itself) showed that his quadratic number field sieve ran asymptotically faster, in

. Two years later, Pomerance (who also was the one who popularised L-notation itself) showed that his quadratic number field sieve ran asymptotically faster, in ![L_n[\frac{1}{2}, 1]](/media/s3vdarww/_l_n-frac-1-2-1.png) time. Lenstra’s elliptic curve factoring algorithm runs in

time. Lenstra’s elliptic curve factoring algorithm runs in ![L_p[\frac{1}{2}, \sqrt{2}]](/media/e03k0jem/_l_p-frac-1-2-sqrt-2.png) , with the interesting property that the time is bounded by the smallest prime factor

, with the interesting property that the time is bounded by the smallest prime factor  of

of  , rather than the number

, rather than the number  we wish to factor itself.

we wish to factor itself.

The next big step was due to Pollard, Lenstra, Lenstra and Manasse, who developed the number field sieve; an algorithm designed for integer factorisation which would be adapted to also solving the discrete logarithm problem in  . Their improved algorithm reduced

. Their improved algorithm reduced  in the L-complexity, with an asymptotic running time of

in the L-complexity, with an asymptotic running time of ![L_n[\frac{1}{3},c]](/media/4g3an3ii/_l_n-frac-1-3-c.png) .

.

Initially, the algorithm was designed for a specific case where the input integer was required to have a special form:  , where both

, where both  and

and  are small. Because of this requirement, this version is known as the special number field sieve (SNFS) and has

are small. Because of this requirement, this version is known as the special number field sieve (SNFS) and has ![c = \sqrt[3]{\frac{32}{9}}](/media/z1ke2xzo/_c-sqrt-3-frac-32-9.png) . This is great for numbers such as the Mersenne numbers:

. This is great for numbers such as the Mersenne numbers:  , but fails for general integers.

, but fails for general integers.

Efforts to generalise this algorithm resulted in the general number field sieve (GNFS), with only a small increase of ![c = \sqrt[3]{\frac{64}{9}} \simeq 1.9](/media/ag0fy2cs/_c-sqrt-3-frac-64-9-simeq-19.png) in the complexity. Very quickly, the GNFS was adapted to solving discrete logarithm problems in

in the complexity. Very quickly, the GNFS was adapted to solving discrete logarithm problems in  , but it took another ten years before this was further generalised for finite fields

, but it took another ten years before this was further generalised for finite fields  and renamed as the tower number field sieve (TNFS), which has the same complexity as the GNFS.

and renamed as the tower number field sieve (TNFS), which has the same complexity as the GNFS.

As a “hand-wavey” approximation, problems are cryptographically hard when the input has a few hundred bits for  complexity and a few thousand bits for sub-exponential complexity. For example, if the best known attack runs in

complexity and a few thousand bits for sub-exponential complexity. For example, if the best known attack runs in  time, we would need

time, we would need  to have 256 bits to ensure 128 bit-strength. For RSA, which can be factored in

to have 256 bits to ensure 128 bit-strength. For RSA, which can be factored in ![\sim L_n[\frac{1}{3}, 1.9]](/media/aotf5lcx/_-sim-l_n-frac-1-3-19.png) time, we need a modulus of 3072 bits to reach 128-bit security.

time, we need a modulus of 3072 bits to reach 128-bit security.

Estimating the bit security of pairing protocols

With an overview of pairings and complexity estimation for asymmetric protocols, let’s combine these two areas together and look at how we can estimate the security of pairing-friendly curves.

As a concrete example, let’s return to the BLS signature scheme explained at the beginning of the post, and study the assumed hard problems of the protocol together with the asymptotic complexity of their best-known attacks. This offers a great way to look at the security assumptions of a pairing protocol in a practical way. We can take what we study here and apply the same reasoning to any pairing-based protocol which relies on the hardness of the computational Diffie-Hellman problem for its security proofs.

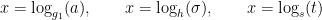

In a BLS signature protocol, the only private value is the private key  which is bounded within the interval

which is bounded within the interval  . If this value can be recovered from any public data, the protocol is broken and arbitrary data can be signed by an attacker. We can therefore estimate the bit-security of a pairing-friendly curve in this context by estimating the complexity to recover

. If this value can be recovered from any public data, the protocol is broken and arbitrary data can be signed by an attacker. We can therefore estimate the bit-security of a pairing-friendly curve in this context by estimating the complexity to recover  from the protocol.

from the protocol.

Note: for the following discussion we assume the protocol is implemented as intended and attacks can only come from the solution of problems assumed to be cryptographically hard. Implementation issues such as side-channel attacks, small subgroup attacks or bad randomness are assumed to not be present.

We assume a scenario where Alice has signed a message  with her private key

with her private key  . Using Alice’s public key

. Using Alice’s public key ![a = [x]g_1](/media/yb2b3nur/_a-x-g_1.png) , Bob will attempt to verify her signature

, Bob will attempt to verify her signature ![\sigma = [x]h](/media/wxfpxxqg/_-sigma-x-h.png) before trusting the message. Eve, our attacker, wants to learn the value of

before trusting the message. Eve, our attacker, wants to learn the value of  such that she can sign arbitrary messages while impersonating Alice.

such that she can sign arbitrary messages while impersonating Alice.

In a standard set up, Eve has access to the following data:

- Protocol parameters: The pairing-friendly curves

,

,  , the characteristic

, the characteristic  and the embedding degree

and the embedding degree  (and hence

(and hence  )

) - Pairing groups: The generators of the prime order subgroups

,

,  and their generators

and their generators

- Alice’s public key:

![a = [x]g_1 \in G_1](/media/b3tisk5f/_a-x-g_1-in-g_1.png)

- Alice’s signature of the message

![\sigma = [x]h \in G_2](/media/qaqbwv43/_-sigma-x-h-in-g_2.png) as well as the message

as well as the message

Additionally, using these known values Eve can efficiently compute

- The message as a curve point

using the protocol’s chosen

using the protocol’s chosen hash_to_curvealgorithm - Elements of the target group:

and

and

To recover the private key, Eve has the option to attempt to solve the discrete log problem in any of the three groups  ,

,  and

and  :

:

The bit-security for the BLS-signature is therefore the minimum number of operations needed to be performed to solve any of the three problems.

Elliptic Curve Discrete Logarithm Problem

Given a point and its scalar multiple: ![P, Q = [n]P \in E(\mathbb{F}_q)](/media/ds1lnoit/_p-q-n-p-in-e-mathbb-f-_q.png) , the elliptic curve discrete logarithm problem (ECDLP) is to recover the value of

, the elliptic curve discrete logarithm problem (ECDLP) is to recover the value of  . Since Miller (1986) and Koblitz (1987) independently suggested using this problem for cryptographic protocols, no attacks have been developed for generic curves which are any faster than those which we have for a generic abelian group.14

. Since Miller (1986) and Koblitz (1987) independently suggested using this problem for cryptographic protocols, no attacks have been developed for generic curves which are any faster than those which we have for a generic abelian group.14

Therefore, to solve either of the first two problems:

,

,

Eve has no choice but to perform

curve operations to recover the value of

curve operations to recover the value of  . This makes estimating the bit security of these two problems very easy. To enjoy

. This makes estimating the bit security of these two problems very easy. To enjoy  -bit security for these problems, we only need to ensure that the prime order

-bit security for these problems, we only need to ensure that the prime order  has at least

has at least  -bits. Remember that this is only true when the subgroup has prime order. If

-bits. Remember that this is only true when the subgroup has prime order. If  was composite, then the number of operations would be

was composite, then the number of operations would be  , where

, where  is the largest prime factor of

is the largest prime factor of  , reducing the security of the curve.

, reducing the security of the curve.

Note: As  is usually generated from points on the curve

is usually generated from points on the curve  while

while  is defined over the extension field, the CPU time to solve problem one will be quicker than problem two, as an individual operation on

is defined over the extension field, the CPU time to solve problem one will be quicker than problem two, as an individual operation on  will be more efficient than that of

will be more efficient than that of  .

.

Finite Field Discrete Logarithm Problem

In contrast to the group of points on an elliptic curve, mathematicians have been able to use the structure of the finite fields  to derive sub-exponential algorithms to solve the discrete logarithm problem.

to derive sub-exponential algorithms to solve the discrete logarithm problem.

Up until fairly recently, the best known attack for solving the discrete logarithm problem in  , was the tower number field sieve, which runs in

, was the tower number field sieve, which runs in ![L_q[\frac{1}{3}, \sqrt[3]{\frac{64}{9}}]](/media/ygyh150s/_l_q-frac-1-3-sqrt-3-frac-64-9.png) time. To reach 128 bit security, we would need a 3072 bit modulus

time. To reach 128 bit security, we would need a 3072 bit modulus  ; for pairing-friendly curves with a target group

; for pairing-friendly curves with a target group  , we would then need the characteristic of our field to have

, we would then need the characteristic of our field to have  bits. Additionally, if we work within a subgroup of

bits. Additionally, if we work within a subgroup of  , this subgroup must have an order where the largest prime factor has at least 256 bits to protect against generic attacks such as Pollard’s Rho, or BSGS.

, this subgroup must have an order where the largest prime factor has at least 256 bits to protect against generic attacks such as Pollard’s Rho, or BSGS.

Just as with the original SNFS, where factoring numbers in a special form had a lower asymptotic complexity, recent research in solving the discrete logarithm problem for fields  shows that when either

shows that when either  or

or  has certain properties the complexity is lowered:

has certain properties the complexity is lowered:

- The Special Number Field Sieve for

, showed that when

, showed that when  has a sparse representation, such as the Solinas primes used in cryptography for efficiency of modular arithmetic, the complexity is reduced to

has a sparse representation, such as the Solinas primes used in cryptography for efficiency of modular arithmetic, the complexity is reduced to ![L_{p^k}[\frac{1}{3}, c]](/media/artil55w/_l_-p-k-frac-1-3-c.png) for

for ![c = \lambda \sqrt[3]{\frac{32}{9}}](/media/3ekf24yb/_c-lambda-sqrt-3-frac-32-9.png) where

where  some small multiplicative constant. This factor reduces to one when the primes are large enough.

some small multiplicative constant. This factor reduces to one when the primes are large enough. - The Extended Tower Number Field Sieve (exTNFS) shows that when we can non-trivially factor

, the complexity can reduce to

, the complexity can reduce to ![L_{p^k}[\frac{1}{3}, \sqrt[3]{\frac{48}{9}}]](/media/1pgathqs/_l_-p-k-frac-1-3-sqrt-3-frac-48-9.png) . Additionally, this can be used together with the progress from the SNFS allowing a complexity of

. Additionally, this can be used together with the progress from the SNFS allowing a complexity of ![L_{p^k}[\frac{1}{3}, \sqrt[3]{\frac{32}{9}}]](/media/2dlfmaff/_l_-p-k-frac-1-3-sqrt-3-frac-32-9.png) , even for medium15 sized primes. The combination of advances is referred to as the SexTNFS.

, even for medium15 sized primes. The combination of advances is referred to as the SexTNFS.

In pairing-friendly curves, the characteristic of the fields are chosen to be sparse for performance, and the embedding degree is often factorable, e.g.  for both BLS12 and the BN curves. This means the bit-security of many pairing-friendly curves should estimated by the complexity of the special case SexTNFS, rather than the GNFS.

for both BLS12 and the BN curves. This means the bit-security of many pairing-friendly curves should estimated by the complexity of the special case SexTNFS, rather than the GNFS.

New Estimates for pairing-friendly curves

Discovery of the SexTNFS meant almost all pairing-friendly curves which had been generated prior had to be re-evaluated to estimate the new bit-security of the discrete logarithm problem.

This was first addressed in Challenges with Assessing the Impact of NFS Advances on the Security of Pairing-based Cryptography (2016), where among other estimates, they conducted experiments for the complexity 256 bit BN curve, which had a previous estimated security of 128 bits. With the advances of the SexTNFS, they estimated a new complexity between 150 and 110 bit security, bounded by the value of the constants appearing in the  term in

term in ![L_n[\alpha, c]](/media/kfhburzy/_l_n-alpha-c.png) .

.

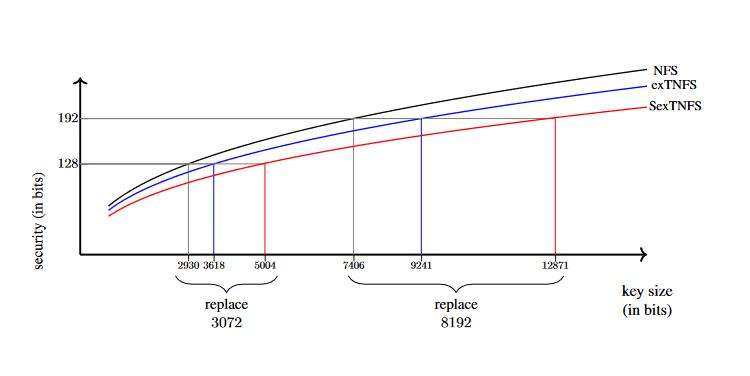

The simplification of  was graphed in Updating key size estimations for pairings (2019; eprint 2017) and allows an estimate of expected key sizes for pairing-friendly curves. With the advances of the SexTNFS, we must increase the bit length of

was graphed in Updating key size estimations for pairings (2019; eprint 2017) and allows an estimate of expected key sizes for pairing-friendly curves. With the advances of the SexTNFS, we must increase the bit length of  to have at approximately 5004 bits for 128 bit security and 12871 bits for 192 bit security.

to have at approximately 5004 bits for 128 bit security and 12871 bits for 192 bit security.

In the same paper, they argue that precise analysis of the  constant term must be understood, especially for the newer exTNFS and SexTNFS. By using careful polynomial selection in the sieving process, they estimate that the 256-bit BN curve has only 100 bit security, and other pairing-friendly curves will be similarly effected. They conclude that for 128 bit security, we should be considering pairing-friendly curves with larger primes, such as:

constant term must be understood, especially for the newer exTNFS and SexTNFS. By using careful polynomial selection in the sieving process, they estimate that the 256-bit BN curve has only 100 bit security, and other pairing-friendly curves will be similarly effected. They conclude that for 128 bit security, we should be considering pairing-friendly curves with larger primes, such as:

- a BLS-12 curve over a 461-bit field (previously 381-bit)

- a BN curve over a 462-bit field (previously 256-bit)

Guillevic released A short-list of pairing-friendly curves resistant to Special TNFS at the 128-bit security level (2019) looking again at the estimated bit security of curves and published a list of the best curves for each case with (at least) 128 bit security. Additionally, there is an open-source repository of SageMath code useful for estimating the security of a given curve https://gitlab.inria.fr/tnfs-alpha/alpha.

In short, Guillevic recommends

For efficient non-conservative pairings, choose BLS12-381 (or any other BLS12 curve or Fotiadis–Martindale curve of roughly 384 bits), for conservative but still efficient, choose a BLS12 or a Fotiadis–Martindale curve of 440 to 448 bits.

with the motivation that

The security between BLS12-446 and BLS12-461 is not worth paying an extra machine word, and is close to the error margin of the security estimate.

Despite the recent changes in security estimates, there are a wealth of new curves which promise 128 bit security, many of which can be dropped into place in current pairing protocols. For those who already have a working implementation using BLS12-381 in a more rigid space, experiments with Guillevic’s sage code seemed to suggest that application of SexTNFS would only marginally reduce the security to ~120 bit security, which currently is still a totally infeasible problem to solve.

Conclusions

TL;DR

Algorithmic improvements have recently reduced the bit-security of pairing-friendly curves used in pairing-based cryptography, but not by enough to warrant serious concern about any current implementations.

Recommendations

The reductions put forward by improvements in the asymptotic complexity of the SexTNFS do not weaken current pairing based protocols significantly enough to cause a mass panic of protocol changes. The drop from 128 bit security to ~120 bit security leaves the protocol secure from all reasonable attacks, but it does change how we talk about the bit-security of specific pairing friendly curves.

Before a pairing-based protocol with “only” 120 or even 100-bit security is broken, chances are, some other security vulnerability will be exposed which requires less than a universe’s computation power to break. Perhaps a more looming threat is the arrival of quantum computers which break pairing-based protocols just like all cryptographic protocols which depend on the hardness of the discrete logarithm problem for some Abelian group, but that’s for another blog post.

For the most part, the state-of-the-art improvements which effect pairing-based protocols should be understood to ensure our protocols behave as they are described. Popular curves, such as those appearing in BLS12-381 can still be described as safe, pairing-friendly curves, just not 128-bit secure pairing curves. The newly developed curves become important if you wish to advertise security to a specific NIST level, or simply state 128-bit security of a protocol implementation. In these scenarios, we must keep up to date and use the new, fine-tuned curves such as Fotiadis-Martindale curve FM17 or the modified BLS curve BLS12-440.

Computational Records

As a final comment, it’s interesting to look at where the current computation records stand for cryptographically hard problems and compare them to standard security recommendations

- The RSA factoring record is for a 829-bit modulus. The current recommendations are 2048-4096 bit moduli, with 128-bit security for 3072 moduli.

- The NFS Diffie-Hellman record for

is for a 795-bit prime modulus. Recommended groups lie in the range of 1536-4096 bit prime order.

is for a 795-bit prime modulus. Recommended groups lie in the range of 1536-4096 bit prime order. - One ECDLP record was completed on the bitcoin curve secp256k1, with a 114-bit private key. The current recommendation is to use 256-512 bit keys

- The current pairing-friendly ECDLP record was against a BN-curve of 114-bit group order. Pairing-friendly curves usually are generated with ~256-bit prime order

- The current standing TNFS record is for

with 512 bits. In comparison, BLS12-381 has

with 512 bits. In comparison, BLS12-381 has  with 4572 bits.

with 4572 bits.

We see that of all these computationally hard problems, the biggest gap between computational records and security estimates is for solving the discrete log problem using the TNFS. Partially, this is historical; solving the discrete log in  has only really gained interest *because* of pairing-based protocols and this area is relatively new. However, even in the context of pairing-friendly curves, the most significant attack used the familiar Pollard’s rho algorithm to solve the discrete logarithm problem on the elliptic curve, rather than the (Sex)TNFS on the output of the pairing.

has only really gained interest *because* of pairing-based protocols and this area is relatively new. However, even in the context of pairing-friendly curves, the most significant attack used the familiar Pollard’s rho algorithm to solve the discrete logarithm problem on the elliptic curve, rather than the (Sex)TNFS on the output of the pairing.

The margins we give cryptographically hard problems have a certain level of tolerance. Bit strengths of 128 and 256 are targets aimed for during protocol design, and when these advertised margins are attacked and research shows there are novel algorithms which have lower complexity, cryptographers respond by modifying the protocols. However, often after an attack, previous protocols are still robust and often associated cryptographic problems are still infeasible to solve.

This is the case for the pairing-friendly curves that we use today. Modern advances have pushed cryptographers to design new 128 bit secure curves, but older curves such as the ones found in BLS12-381 are still great choices for cryptographic protocols and thanks to the slightly smaller primes used, will be that much faster than their 440-bit contemporaries.

Summary

- Pairing-based cryptography introduces a relatively new set of interesting protocols which have been developed over the past twenty years.

- A pairing is a map which takes elements from two groups and returns an element in a third group.

- For all practical implementations, we use elliptic curve pairings, which take points as input and returns elements of

.

. - Pairing-based protocols offer a unique challenge to cryptographers, requiring the generation of special pairing-friendly elliptic curves which allow for efficient pairing, while still maintaining the hardness of the discrete logarithm problem for all three groups.

- Asymmetric protocols are only as secure as the corresponding best known attacks are slow. If researchers improve an algorithm, parameters for the associated cryptographic problem must be increased, resulting in the protocol becoming less efficient.

- Increased attention on pairings in a cryptographic context has inspired more research on improving algorithms which can solve the discrete logarithm problem in

.

. - The newly discovered exTNFS and SNFS, allow a reduction in the complexity of solving the discrete logarithm problem in

using the SexTNFS.

using the SexTNFS. - For pairing friendly-curves generated without knowledge of these algorithms (or before their discovery) the advertised bit-strength of a curve is lower than described.

- Although research on TNFS and related algorithms is still ongoing, new improvements are only expected to happen in the

contribution of

contribution of ![L_n[\alpha, c]](/media/kfhburzy/_l_n-alpha-c.png) , which could only cause small modifications to the bit complexity. Any substantial change in complexity would be as surprising as a change in the security of the ECDLP.

, which could only cause small modifications to the bit complexity. Any substantial change in complexity would be as surprising as a change in the security of the ECDLP. - These modifications should only really concern implementations which promise a specific bit-security, from the point of view of real-world security, the improvements offered by the SexTNFS and related algorithms is more psychological than practical.

Appendix: Mathematical Notation

The below review will not be sufficient for a reader not familiar with these topics, but should act as a gentle reminder for a rusty reader.

Groups, Fields and Curves

Groups

For a set  to be considered as a group we require a binary operator

to be considered as a group we require a binary operator  such that the following properties hold:

such that the following properties hold:

- Closure: For all

, the composition

, the composition

- Associativity:

- Identity: there exists an element

such that

such that  for all

for all

- Inverse: for every element

there is an element

there is an element  such that

such that

An Abelian group has the additional property that the group law is commutative:

- Commutativity:

for all elements

for all elements  .

.

Fields and Rings

A field is a set of elements which has a group structure for two operations: addition and multiplication. When considering the group multiplicatively, we remove the identity of the additive group from the set to ensure that every element has a multiplicative inverse. An example of a field is the set of real numbers  or the set of integers modulo a prime

or the set of integers modulo a prime  (see below).

(see below).

We denote the multiplicative group of a field  as

as  .

.

A ring is very similar, but we relax the criteria that every non-zero element has a multiplicative inverse. An example of a ring is the set of integers  , where every integer

, where every integer  has an additive inverse:

has an additive inverse:  but for

but for  for

for  we do not have that

we do not have that  (unless

(unless  or

or  ).

).

Finite Fields

Finite fields, also known as Galois fields, are denoted in this blog post as  for

for  for some prime

for some prime  and positive integer

and positive integer  . When

. When  , we can understand

, we can understand  as the set of elements

as the set of elements  which is closed under addition and excluding 0, is also closed under multiplication. The multiplicative group is

which is closed under addition and excluding 0, is also closed under multiplication. The multiplicative group is  .

.

When  , the finite field

, the finite field  has elements which are polynomials

has elements which are polynomials ![\mathbb{F}_p[x]](/media/1q3euuul/_-mathbb-f-_p-x.png) of degree

of degree  . The field is generated by first picking an irreducible polynomial

. The field is generated by first picking an irreducible polynomial  and considering the field

and considering the field ![\mathbb{F}_q = \mathbb{F}_p[x] / (f)](/media/e42hknki/_-mathbb-f-_q-mathbb-f-_p-x-f.png) . Here the quotient by

. Here the quotient by  can be thought of as using

can be thought of as using  as the modulus when composing elements of

as the modulus when composing elements of  .

.

Elliptic Curves

For our purposes, we consider elliptic curves over finite fields, of the form

where the coefficients

and a point on the curve

and a point on the curve  is a solution to the above equation where

is a solution to the above equation where  , together with an additional point

, together with an additional point  which is called the point at infinity. The field

which is called the point at infinity. The field  where

where  has characteristic

has characteristic  and to use the form of the curve equation used above, we must additionally impose

and to use the form of the curve equation used above, we must additionally impose  .

.

The set of points on an elliptic curve form a group and the point at infinity acts as the identity element:  and

and  . The group law on an elliptic curve is efficient to implement. Scalar multiplication is realised by repeated addition:

. The group law on an elliptic curve is efficient to implement. Scalar multiplication is realised by repeated addition: ![[3]P = P + P + P](/media/z5ilxbgz/_-3-p-p-plus-p-plus-p.png) .

.

Footnotes

- One year later, this attack was generalised by Frey and Ruck to ordinary elliptic curves and is efficient when the embedding degree of the curve is “low enough”. We will make this statement more precise later in the blogpost. ⤶

- As a recap, the decision Diffie-Hellman (DDH) problem is about the assumed hardness to differentiate between the triple

and

and  where

where  are chosen randomly. Note that if the discrete log problem is easy, so is DDH, but being able to solve DDH does not necessarily allow us to solve the discrete log problem. ⤶

are chosen randomly. Note that if the discrete log problem is easy, so is DDH, but being able to solve DDH does not necessarily allow us to solve the discrete log problem. ⤶ - The roots of unity are the elements

such that

such that  . As a set, the

. As a set, the  th roots of unity form a multiplicative cyclic group. ⤶

th roots of unity form a multiplicative cyclic group. ⤶ - It can be proved that the Weil embedding degree

always exists, and that the

always exists, and that the  -torsion group

-torsion group ![E(\mathbb{F}_{p^k})[r] \subset E(\mathbb{F}_{p^k})](/media/szlbuxha/_e-mathbb-f-_-p-k-r-subset-e-mathbb-f-_-p-k.png) decomposes into

decomposes into  Once

Once  has been found, further extending to

has been found, further extending to  will not increase the size of the

will not increase the size of the  -torsion. ⤶

-torsion. ⤶ - In an attempt to not go too far off topic, a divisor can be understood as a formal sum of points

. Given a rational function

. Given a rational function  , the divisor of a function

, the divisor of a function  is a measure of how the curve related to

is a measure of how the curve related to  intersects with the elliptic curve

intersects with the elliptic curve  and is computed as

and is computed as  . For a very good and comprehensive discussion of the mathematics (algebraic geometry) needed to appreciate the computations of pairings, we recommend Craig Costello’s Pairing for Beginners. ⤶

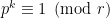

. For a very good and comprehensive discussion of the mathematics (algebraic geometry) needed to appreciate the computations of pairings, we recommend Craig Costello’s Pairing for Beginners. ⤶ - As another way to think about this, we have that

for some integer

for some integer  . We can rephrase this as

. We can rephrase this as  and so the Tate embedding degree

and so the Tate embedding degree  is the order of

is the order of  ⤶

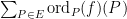

⤶ - The distribution of small

was formally studied in the context of the expectation that a random curve could be attacked by the MOV algorithm. R. Balasubramanian N. Koblitz showed in The Improbability That an Elliptic Curve Has Subexponential Discrete Log Problem under the Menezes—Okamoto—Vanstone Algorithm that the probability that we find a small embedding degree:

was formally studied in the context of the expectation that a random curve could be attacked by the MOV algorithm. R. Balasubramanian N. Koblitz showed in The Improbability That an Elliptic Curve Has Subexponential Discrete Log Problem under the Menezes—Okamoto—Vanstone Algorithm that the probability that we find a small embedding degree:  is vanishingly small. ⤶

is vanishingly small. ⤶ - Hashing bytes to a point on a curve can be efficiently performed, as described in Hashing to Elliptic Curves ⤶

- This BLS triple is distinct from the BLS curves, although Ben Lynn is common to both of them ⤶

- The term operations here can hide a computational complexity. If your operation is addition of elliptic curve points, this will have an additional hidden cost when compared to an operation such as bit shifting, or multiplication modulo some prime. Some authors mitigate this by using a clock cycle as the operation. ⤶

- Although here we think of this key as a 256 long stream of

1sand0s, we’re much more familiar with seeing this 32-byte key as a hexadecimal or base64 encoded string ⤶ - For fun, we include a tonuge-in-cheek estimate. Equipping every one of the world’s 8 billion humans with their very own “world’s fastest supercomputer”, the burning hot computer-planet would be guessing

keys per second. At this rate, it would take 17 million years to break AES-128. For AES-256, you’ll need a billion copies of these supercomputer Earths working for more than 2 billion times longer than the age of the universe. 🥵 ⤶

keys per second. At this rate, it would take 17 million years to break AES-128. For AES-256, you’ll need a billion copies of these supercomputer Earths working for more than 2 billion times longer than the age of the universe. 🥵 ⤶ - In fact, this relationship between factoring and the discrete log problem even shows up in a quantum context. Shor’s algorithm was initially developed as a method to solve the discrete log problem and only then was modified (with knowledge of this close relationship) to then produce a quantum factoring algorithm. Shor tells this story in a great video. ⤶

- When an elliptic curve has additional structure we can sometimes solve the ECDLP using sub-exponential algorithms by mapping points on the curve to some “easier” group. When the order of the elliptic curve is equal to its characteristic (

), Smart’s anomalous curve attack maps points on the elliptic curve to the additive group of integers mod p, which means the discrete logarithm problem is as hard as inversion mod

), Smart’s anomalous curve attack maps points on the elliptic curve to the additive group of integers mod p, which means the discrete logarithm problem is as hard as inversion mod  (which is not hard at all!). When the embedding degree

(which is not hard at all!). When the embedding degree  is small enough, we can use pairings to map from points on the elliptic curve to the multiplicative group

is small enough, we can use pairings to map from points on the elliptic curve to the multiplicative group  . This is exactly the worry we have here, so let’s go back to the main text!⤶

. This is exactly the worry we have here, so let’s go back to the main text!⤶ - In this context, medium refers to a number theorist’s scale, which we can interpret as “cryptographically sized”. Although 5000 bit numbers seem large for us, number theorists are interested in all the numbers and the set of numbers you can store in a computer are still among the smallest of the integers! ⤶