After 400 days of research, the Project Ava team round up their conclusions on whether machine learning could ever be harnessed to complement current pentesting capabilities. Read more to uncover the team’s verdict on whether this will ever be possible in the near future…

Overview

Having spent almost 400 people days of research effort on Project Ava we were in a position to assess the efficacy of the approaches that we’d investigated in order to understand which might warrant further research and development.

In this final blog post of the series we answer some key questions:

- What worked well?

- What didn’t work well?

- What further work is required?

What worked well?

Having tried a few different approaches and proofs of concept, we confirmed potential in the application of ML to web application security testing. From all approaches followed, it appeared that the best success would derive from ML approaches focused on detecting specific classes of web application vulnerability.

Analysing semantic relationships within HTTP data revealed potential as an approach. However, using such an approach as a holistic method of identifying multiple vulnerability classes was not as accurate or relevant as one of our approaches specifically developed to find SQLi, and even more specifically, to find SQLi in applications using the MySQL DBMS.

It therefore appears that training ML models to detect specific classes of vulnerability is a positive way forward for further research. On a related note, such an approach might lend itself well to routine re-training on specific applications. For example, suppose we train models on distinct applications, perhaps as part of a DevOps process, changes in the application due to code change might therefore form part of flagged potential issues against the models developed.

An approach using anomaly detection also showed promise – i.e. rather than training models on known vulnerable, we showed the potential for training on known good or ‘secure’, and using anomaly detection to identify web responses that perhaps contained a vulnerability due to their outlier properties. This particular approach also took us down a more refined feature engineering process whereby we were able to discard any sensitive data pertaining to the HTTP data being used for our models, thus addressing the various data protection issues we identified early on when wanting to amass real-world data for our model training needs.

We did demonstrate, albeit in a fairly limited environment, the potential success with reinforcement learning techniques combined with genetic algorithms for learning how to uncover specific classes of vulnerability (XSS in this case). Further research is required here, with a need for more rounds of training and adjustment of our evaluation criteria.

We also showed real potential using Expert System-based approaches, again to find specific classes of vulnerability (XSS in our example), albeit with the associated complexities in clearly defining our human-based approaches as a sequence of rules and processes, and the steep learning curve involved in using Expert System frameworks.

While we looked at supervised, unsupervised and reinforcement learning techniques in isolation, the fact that each approach showed at least some promise might indicate that future capabilities in this space will benefit from a combined learning approach.

What didn’t work well?

Overall it appeared that any approach in this domain that seeks to yield an ‘all singing all dancing’ web application security testing capability using ML would be inordinately complicated. The best approach might be to use multiple distinct ML techniques in conjunction with each other, providing, of course, that the accuracy of those techniques exceeds that of simpler, regular expression-based vulnerability checking approaches. In addition, the approaches that we developed, in their current form require human operation and observation – we have not developed fully autonomous proofs of concept, but rather human-driven work aids that employ ML techniques. While this was our original intention, we reiterate this outcome in that we are likely a long way off, if ever close to, a fully automated capability.

Machines lack contextual awareness

As had been identified by others researching in this field, adding automation through intelligent crawling is very difficult. A core difference between machine and human web application testing is that machines lack contextual awareness of the underlying application. While additional ML approaches could be developed to facilitate improved contextual awareness, this will not likely rival human based awareness and subsequent potential for inference of likely vulnerabilities in specific parts of a web application, particularly when assessing the function of specific parts of an application such as searching (potential SQLi), displaying user-supplied text (potential XSS) etc. Machines may be able to detect such functions, but will still lack an understanding of exactly what it means ‘to search’ or ‘to reflect some user-supplied text’, and what the security implications of those actions really mean.

Real-world data

The need for real-world data is key to training accurate models. Potential issues around data protection relating to real-world HTTP data meant that we instead focused on synthetic data, or data generated from deliberately-vulnerable (for training purposes) web applications; with both approaches not quite delivering on our ‘real-world’ data needs. We reiterate that the data protection issues are not a barrier, but rather a good reminder for engineering privacy by design into systems. Indeed, this helped us explore the anomaly detection approach which leveraged feature engineering so as to avoid having to store any personal or sensitive information as part of model generation within that specific proof of concept.

Accuracy

Related to the need for real-world data is the need to understand the true error rates of the models and approaches that we realised through proof of concept. Despite early signs of potential success with some of our approaches, without access to large, real-world datasets for training and testing we are currently unable to provide certainty around the true error rates of our outputs. In addition, further work will be required to understand what periodicity of re-training will be necessary of any final models produced? As web application technologies (servers, frameworks, and development languages) continue to evolve, so will the need for any trained models to evolve in order to avoid issues of concept-drift and thus dwindling effectiveness of pre-trained models.

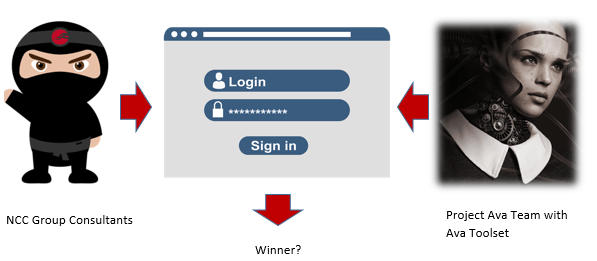

On the topic of accuracy, one of our goals (and as a matter of fun) is to run an internal web application security vulnerability hunt competition between outputs of Project Ava and our human security consultants. Annually we hold an internal conference (NCC Con), and at a future conference we plan on running a session whereby our web application security consultants will compete with Project Ava team members using proofs of concept from Project Ava – both sides will be presented with a previously unseen web application and will be given a time limit to find as many vulnerabilities as possible. It will be interesting to see if Project Ava outputs, operated by Project Ava team members, will find more confirmed vulnerabilities than those consultants using only their existing toolkits, experience and testing intuition.

What further work is required?

Much further work is required in this space in order to understand the viability of our approaches; we’re also acutely aware that there are many other approaches that we’re yet to experiment with in this domain. Thus, what we’ve done in Project Ava is by no means exhaustive.

For us, obtaining large volumes of real-world data for training and testing, that doesn’t expose any personal information is a key requirement for next steps – this is of course a general data protection and engineering problem, as opposed to any issues with ML. We shall continue to explore methods of feature engineering to remove dependence on contextual information within HTTP data, in addition to anonymization, homomorphic encryption and differential privacy techniques.

As part of ongoing research we shall also continue to monitor developments in the field of AI/ML which is an ever-expanding, rapidly developing field of research in itself and this may present future new techniques that are applicable to our problem domain.

Unrelated to Project Ava, we shall also continue to research the tangential route we took into looking at NLP combined with sentiment and personality analysis, specifically applied to scenarios of social engineering, as this appears to present a fascinating research domain combining cyber security, psychology and philosophy.

- Part 1 – Understanding the basics and what platforms and frameworks are available

- Part 2 – Going off on a tangent: ML applications in a social engineering capacity

- Part 3 – Understanding existing approaches and attempts

- Part 4 – Architecture, design and challenges

- Part 5 – Development of prototype #1: Exploring semantic relationships in HTTP

- Part 6 – Development of prototype #2: SQLi detection

- Part 7 – Development of prototype #3: Anomaly detection

- Part 8 – Development of prototype #4: Reinforcement learning to find XSS

- Part 9 – An expert system-based approach

- Part 10 – Efficacy demonstration, project conclusion and next steps

Summary

On the matter of using Machine Learning for web application security testing, we have determined that this is by no means an easy feat but there are signs of promise. We hope that this blog series has been interesting to the reader and that more broadly, it serves to help stimulate thought, discussion and further research in the cyber security community on the topic of ML applied to web application (and more general penetration) security testing. We welcome comment, feedback and new ideas in this space.

We have learned a lot in the process but feel we have barely scratched the surface in terms of the potential in this domain. Our research continues and we aim to share as much as possible as we continue on this research adventure.

It is increasingly likely that ML techniques will at some point form part of a web application tester’s standard toolkit. As for those who work in web application security testing, rest easy – your jobs are safe… for now…

“AI might be a powerful technology, but things won’t get better simply by adding AI”

Vivienne Ming

Published by NCC Group

First published on 27/06/19