Mathew Vermeer is a doctoral candidate at the Organisation Governance department of the faculty of Technology, Policy and Management of Delft University of Technology. At the same university, he has received both a BSc degree in Computer Science and Engineering, as well as a MSc degree in Computer Science with a specialization in cyber security. His master’s thesis examined (machine learning-based) network intrusion detection systems (NIDSs), their effectiveness in practice, and methods for their proper evaluation in real-world settings. In 2019 he joined the university as a PhD researcher. Mathew’s current research similarly includes NIDS performance and management processes within organizations, as well as external network asset discovery and security incident prediction.

Introduction

The following is a short summary of a study conducted as part of my PhD research at TU Delft in collaboration with Fox-IT. We’re interested in studying the different processes and technologies that determine or impact the security posture of organizations. In this case, we set out to better understand the signature-based network intrusion detection system (NIDS). Ubiquitous within the field of network security, it’s been part of the bedrock of network security for over two decades, and industry reports have been predicting its demise for almost just as long [1]. Both industry and academia [2, 3] seem to be pushing for a gradual phasing out of the supposedly “less-capable” [2] signature-based NIDS in favour of machine-learning (ML) methods. The former uses sets of signatures (or rules) that inform the NIDS what to look for in network traffic and flag as potentially malicious, while the latter uses statistical techniques to find potentially malicious anomalies within network traffic. The underlying motivation is that conventional rule- and signature-based methods are deemed unable to keep up with the fast-evolving threats and will, therefore, become increasingly obsolete. While some argue for complementary use, others imply outright replacement to be a more effective solution, comparing their own ML system with an improperly configured (i.e., enabling every single rule from the Emerging Threats community ruleset) signature-based NIDS to try to drive home the point [4]. On the other hand, walk into any security operations center (SOC) and what you’ll see is analysts triaging alerts generated by NIDSs that still rely heavily on rulesets. So how much of this push is simply hype and how much is backed up by actual data? Do traditional signature-based NIDSs truly no longer add to an organization’s security? To answer this, we analyzed alert and incident data from Fox-IT, and the many proprietary and commercial rulesets employed at Fox-IT spanning from mid-2009 to mid-2018. We used this data to examine how Fox-IT manages its own signature-based NIDS to provide security for its clients. The most interesting results are described below.

NIDS environment

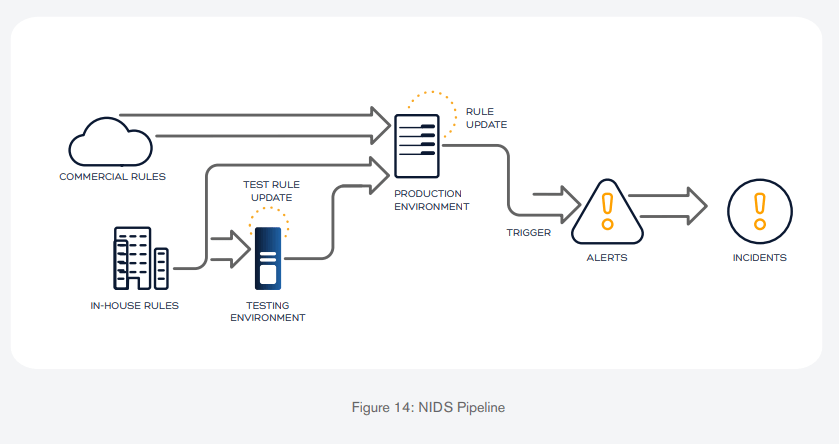

First, it’s helpful to get acquainted with the environment in place at Fox-IT. The figure below roughly illustrates the NIDS pipeline in use at Fox-IT, starting from the NIDS rules on the left to the incidents all the way on the right. Rules are either purchased from a threat intel vendor or created in-house. Of note is that in-house rules are usually tested for a period of time, where they are tweaked until its performance is deemed acceptable, which can vary depending on the novelty, severity, etc., of the threat it is trying to detect. Once that condition is reached, the rules added to the production environment, where rules can again be modified based on its performance in a real-world environment.

Modelling the workflows in this way allows us to find relationships between alerts, incidents, and rules, as well as the effects that security events have on the manner in which rules are managed.

Custom ruleset important for proper functioning of NIDS

One of the go-to metrics for measuring the effectiveness of security systems is their precision [5]. This is because, as opposed to simple accuracy, precision penalizes false positives. Since false positive detections is something rule developers and SOC analysts often strive to minimize, it stands to reason that such occurrences are taken into account when measuring the performance of an NIDS. We found that the custom rulesets Fox-IT creates in-house is critical for the proper functioning of its NIDS. The precision of Fox-IT’s proprietary ruleset is higher than the commercial sets employed: an average of 0.74, in contrast to 0.68 and 0.65 for the commercial rulesets, respectively. Important to note here is that the commercial sets achieve such precision scores only because of extensive tuning by the Fox-IT team prior to introducing the rules into the sensors. Had this not occurred, their measured precision would be much lower (in case the sensors had not burst into flames beforehand). The Fox-IT ruleset is much smaller than the commercial rule sets: around 2,000 rules versus over the tens of thousands commercial rules from ET and Talos. Nevertheless, the rules within Fox-IT’s own ruleset are present in 27% of all true positive incidents. This is surprising, given the massive difference in ruleset size (2,000 Fox-IT rules vs. 50,000+ commercial rules) and, therefore, threat coverage. Both findings here clearly demonstrate the higher utility of Fox-IT’s proprietary rules. Still, they clearly play a complementary role to the commercial rules, which is something we explore in a different study.

Newest rules produce most incidents

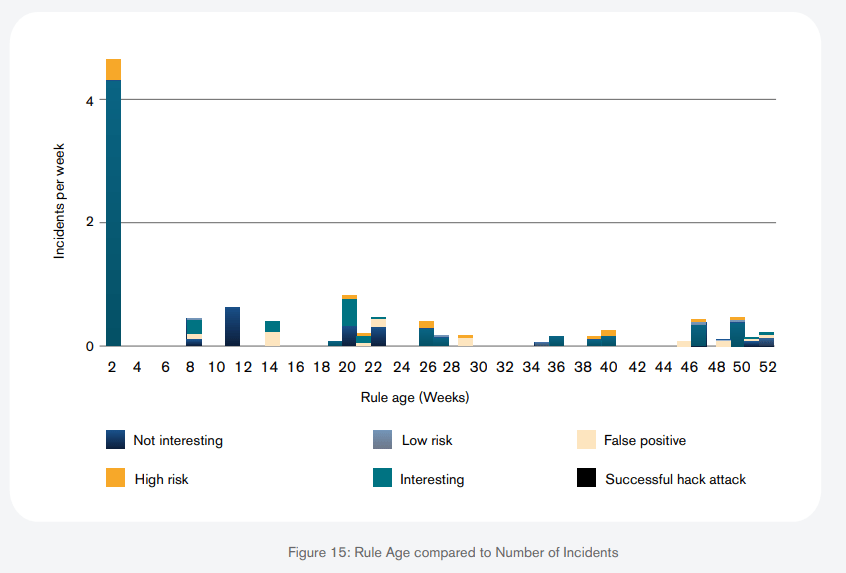

The figure below shows the average age of rules plotted against the number of incidents that such a particular rule of that age will trigger on average per week. For instance, the spike on the left represents rules that are a week old. Such a week-old rule would, then, on average, produce around four incidents per week. This means that it’s the newest rules that produce the most incidents. The implications of this are twofold. Firstly, it emphasizes the importance of staying up to date with the global threat landscape. It is insufficient to rely on rules and rulesets that perfectly protected your organization once upon a time. SOC teams need to continuously scour for new threats and perform their own research to maintain their organization and their clients secure. And secondly, rules seem to lose their relevance and effectiveness as time goes by. Probably obvious, yes, but it hints at the possibilities of another type of NIDS optimization: performance issues. While disabling any and all rules that pass a certain age threshold might not be the wisest of decisions, SOC teams can examine old rules to determine which ones produce results that are less than satisfactory. Such rules can then potentially be disabled, depending, of course, on the type of rule, severity of the threat it is designed to detect, its precision (or any other metric), etc.

99.8% of (detected) true positive incidents caught before becoming successful attacks

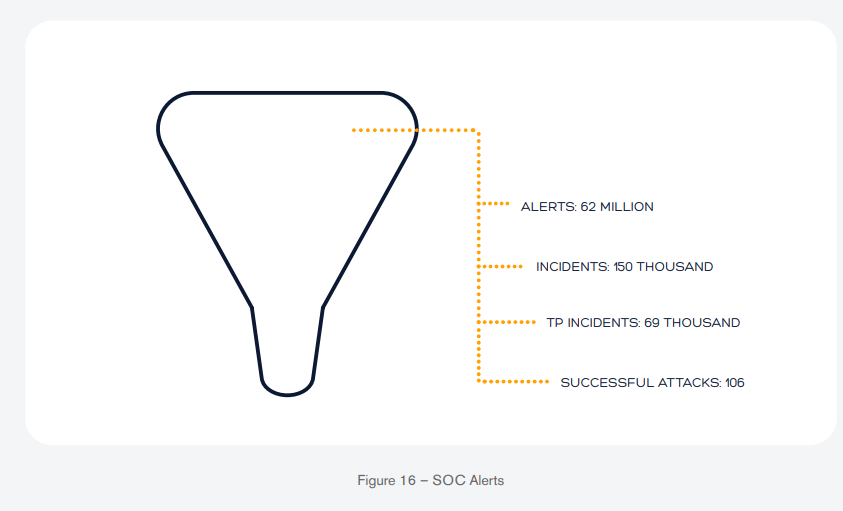

Finally, the image below is a visual representation of all the alerts we analyzed, and how they are condensed into incidents, true positive incidents, and successful attacks. For the 13 years of data made available for this analysis, we counted 62 million alerts that our SOC analysts processed. They were able to condense the 62 million alerts into 150,000 incidents. Out of these 150,000 incidents, they were again condensed to 69,000 true positive incidents. And finally, out of the 69,000, only 106 of these incidents turned out to be successful attacks. With some quick math we can deduce that 99.8% of all true positive incidents detected by the SOC were discovered before they were able to cause any serious damage to the organizations that they aim to protect on a daily basis. I’ll point out, though, that this number obviously ignores the potential false negatives that were able to evade detection. This is, naturally, a number that we can’t easily measure accurately. However, we’re certain it doesn’t run high enough to significantly alter the result, and so, we’re confident in the accuracy of the computed percentage.

Conclusion

So, with all of these results, we demonstrate that signature-based systems are still effective, given that they are managed properly, for example, by keeping them up to date with the newest threat intelligence. Of course, future work is still needed to compare the signature-based approach to other different types of intrusion detection approaches, whether they’re other network-based, host-based or application-based approaches. Only once that comparison is done will we be able to determine whether these signature-based systems really do need to be phased out as archaic and obsolete pieces of technology or if they remain an indispensable part of our network security. As it currently stands, however, the fact that they continue to provide value and security to the organizations that use them is indisputable. This was a quick overview of a few findings from our study. If you’re curious for more, you’re welcome to take a look at the full paper (https://dl.acm.org/doi/abs/10.1145/3488932.3517412).

References

[1] http://web.archive.org/web/20201209162847/https://bricata.com/blog/ids-is-dead/

[2] Shone, N., Ngoc, T.N., Phai, V.D. and Shi, Q., 2018. A deep learning approach to network intrusion detection. IEEE transactions on emerging topics in computational intelligence, 2(1), pp.41-50.

[3] Vigna, G., 2010, December. Network intrusion detection: dead or alive?. In Proceedings of the 26th Annual Computer Security Applications Conference (pp. 117-126).

[4] Mirsky, Y., Doitshman, T., Elovici, Y. and Shabtai, A., 2018. Kitsune: an ensemble of autoencoders for online network intrusion detection. arXiv preprint arXiv:1802.09089.

[5] He, H. and Garcia, E.A., 2009. Learning from imbalanced data. IEEE Transactions on knowledge and data engineering, 21(9), pp.1263-1284.